2021WGR_OMB_SupportingStatement-PartB_vdraft1_20210326 (002)

2021WGR_OMB_SupportingStatement-PartB_vdraft1_20210326 (002).docx

Workplace and Gender Relations Survey (Active/Reserve)

OMB: 0704-0615

SUPPORTING STATEMENT – PART B

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

If the collection of information employs statistical methods, it should be indicated in Item 17 of OMB Form 83-I, and the following information should be provided in this Supporting Statement:

1. Description of the Activity

Describe the potential respondent universe and any sampling or other method used to select respondents. Data on the number of entities covered in the collection should be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate the expected response rates for the collection as a whole, as well as the actual response rates achieved during the last collection, if previously conducted.

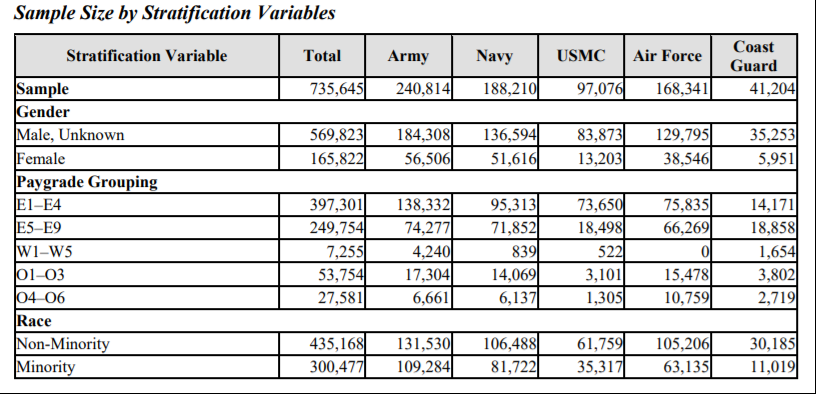

The WGR surveys will use a single-stage non-proportional stratified random sampling to identify eligible DoD participants in order to achieve precise estimates for important reporting categories (e.g., gender, Service, pay grade). The total sample size for the Active component will be approximately 735,000 (including a census of the Coast Guard) and is based on precision requirements for key reporting domains (prevalence rates by Service, gender, & pay grade). Given anticipated eligibility and response rates, an optimization algorithm will determine the minimum-cost allocation that simultaneously satisfies the domain precision requirements. Anticipated eligibility and response rates for the Active component will be based on the 2018 WGR of Active Duty Members (Table 1) which had a weighted response rate of 18%.

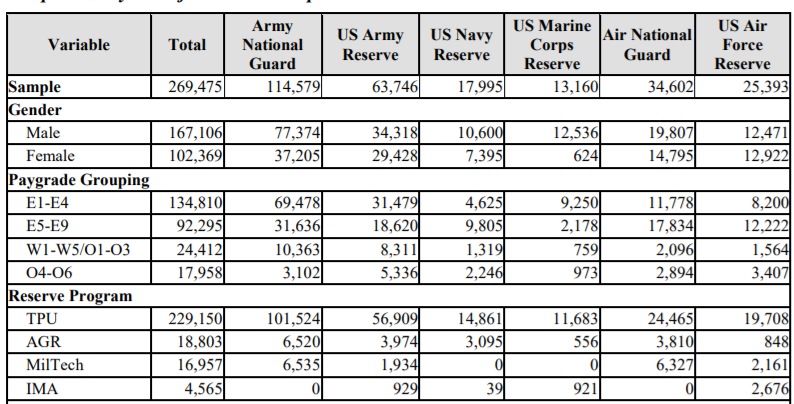

The total sample size for the Reserve component will be approximately 250,000 (including a census of the Coast Guard Reserve) and is based on precision requirements for key reporting domains. Given anticipated eligibility and response rates, an optimization algorithm will determine the minimum-cost allocation that simultaneously satisfies the domain precision requirements. Anticipated eligibility and response rates for the Reserve component will be largely based on the 2019 WGR of Reserve Component Members (Table 2) which had a weighted response rate of 15%. However, the 2021 WGR of Reserve component members will include a census of the Coast Guard Reserve (approximately 6,300 members).

Because precise estimates on the percentage of reported sexual assault rates among even the smallest domains (e.g., Marine Corps women) are required, a sizable sample is necessary.

Table 1.

2018 Active Component Sample Size by Stratification Variables

Table 2.

2019 Reserve Component Sample Size by Stratification Variables

The potential respondent universe may expand to the entire Active component (e.g., when doing so would not conflict with other OPA surveys being administered at the same time). In these cases, a five to seven question module of the WGR survey will be open to members of the Active component who are not in the study sample. In other words, OPA may allow Active component members who learn about the survey and wish to participate, but were not randomly selected into the study sample, to have the option to respond to a limited set of questions regarding their workplace. In fact, providing this option has the broader goal of ensuring that Service members who wish to share their experiences vis-à-vis their workplace have a means to do so. Data from “out of sample” respondents will not be combined with the sampled member’s responses for weighting and analysis purposes. Instead, responses from “out of sample” respondents may be used in separate analyses of Service member perceptions of organizational climate.

2. Procedures for the Collection of Information

Describe any of the following if they are used in the collection of information:

a. Statistical methodologies for stratification and sample selection;

Within each stratum, individuals will be selected with equal probability and without replacement. However, because allocation of the sample will not be proportional to the size of the strata, selection probabilities will vary among strata, and individuals will not be selected with equal probability overall. Non-proportional allocation will be used to achieve adequate sample sizes for small subpopulations of analytic interest for the survey reporting domains. These domains will include subpopulations defined by the stratification characteristics, as well as others.

The OPA Sample Planning Tool, Version 2.1 (Dever and Mason, 2003) will be used to accomplish the allocation. This application will be based on the method originally developed by J. R. Chromy (1987), and is described in Mason, Wheeless, George, Dever, Riemer, and Elig (1995). The Sampling Tool defines domain variance equations in terms of unknown stratum sample sizes and user-specified precision constraints. A cost function is defined in terms of the unknown stratum sample sizes and per-unit costs of data collection, editing, and processing. The variance equations are solved simultaneously, subject to the constraints imposed, for the sample size that minimizes the cost function. Eligibility rates modify estimated prevalence rates that are components of the variance equations, thus affecting the allocation; response rates inflate the allocation, thus affecting the final sample size.

b. Estimation procedures;

The eligible respondents will be weighted in order to make inferences about the entire active duty or Reserve component populations. The weighting will utilize standard processes. The first step will be to assign a base weight to the sampled member based on the reciprocal of the selection probability. For the second step, non-response, the base weights will be adjusted (eligibility and completion of the survey). Finally, the current weights will be post-stratified to known population totals to reduce bias associated with the estimates. Variance strata will then be created so precision measures can be associated with each estimate. Estimates will be produced for reporting categories using 95% confidence intervals with the goal of achieving a precision of 5% or less.

c. Degree of accuracy needed for the Purpose discussed in the justification;

OPA allocated the sample to achieve the goal of reliable precision on estimates for outcomes associated with reporting a sexual assault (e.g., retaliation) and other measures that were only asked of a very small subset of members, especially for males. Given estimated variable survey costs and anticipated eligibility and response rates, OPA used an optimization algorithm to determine the minimum-cost allocation that simultaneously satisfied the domain precision requirements. Response rates from previous surveys were used to estimate eligibility and response rates for all strata

The allocation precision constraints will be imposed only on those domains of primary interest. Generally, the precision requirement will be based on a 95% confidence interval and an associated precision of 5% or less on each reporting category. Constraints will be manipulated to produce an allocation that will achieve satisfactory precision for the domains of interest at the target sample size of approximately 735,000 members for the Active component and approximately 250,000 for the Reserve component.

d. Use of periodic or cyclical data collections to reduce respondent burden.

The WGR surveys occur on a biennial basis (i.e. every other year) in accordance with the congressional mandate.

3. Maximization of Response Rates, Non-response, and Reliability

Discuss methods used to maximize response rates and to deal with instances of non-response. Describe any techniques used to ensure the accuracy and reliability of responses is adequate for intended purposes. Additionally, if the collection is based on sampling, ensure that the data can be generalized to the universe under study. If not, provide special justification.

Multiple reminders will be sent to sample members during the survey field period to help increase response rates. Both postal letters and e-mails will be sent to sample members until they respond or indicate that they no longer wish to be contacted. The outreach communications will include text highlighting the importance of the surveys and signatures from senior DoD leadership (e.g., Service Chiefs, Senior Enlisted Advisors, and/or the Director of OPA). Additional outreach informing all Service members of the survey fielding is planned via military websites (e.g., Military OneSource), platforms (e.g., SAPR Connect), and social media (e.g., Instagram, Twitter, & Facebook).

The sample sizes will be determined based on prior response rates from similar active duty or Reserve component surveys. Given the anticipated response rates, OPA predicts enough responses will be received within all important reporting categories to make estimates that meet confidence and precision goals. A non-response bias study will be conducted to compare known values to weighted estimates from the survey and analyze missing data/drop-off.

4. Tests of Procedures

Describe any tests of procedures or methods to be undertaken. Testing of potential respondents (9 or fewer) is encouraged as a means of refining proposed collections to reduce respondent burden, as well as to improve the collection instrument utility. These tests check for internal consistency and the effectiveness of previous similar collection activities.

In accordance with the DoD Survey Burden Action Plan, we went to great length to develop a survey instrument that collected the information required to meet the Congressional-mandate and to support policy and program development and/or assessments. To accomplish this, we reviewed data from the 2018 WGR survey of the Active component and 2019 WGR survey of the Reserve component to identify items that did not perform well, for which very little usable data were collected, or that did not support information requirements. This process allowed us to delete 42 items from the survey. We also collaborated with leaders from each of the relevant policy offices (i.e. DoD Sexual Assault Prevention and Response Office [SAPRO], the Office for Diversity, Equity, and Inclusion [ODEI], & the Office of Force Resiliency [OFR]) to identify their critical information needs and research questions. This led us to identify important gaps in knowledge regarding response to, and especially prevention of, unwanted gender related behaviors that additional survey items could address—namely, additional information regarding bystander intervention, leader behaviors, and the prevalence of sexist beliefs. We added survey items to address these information gaps.

New or revised survey items reflect the DoD’s renewed focus on the prevention of unwanted gender-related behaviors. More specifically, the survey instrument replaces some items included in prior years (e.g., questions about training) with items specifically designed to support prevention (e.g., sexual harassment and stalking items, additional bystander intervention items, and items related to sexist beliefs). In all cases, a review of the literature was used to identify peer-reviewed and validated scales for use in the survey instrument (where available).

In 2020, the Health & Resilience Division conducted cognitive interviews of Service members from each of the military Departments in order to: 1) gain insight as to the clarity of specific terminology or instructions; 2) ensure that key terms (e.g., “leader”, “immediate supervisor”, “chain of command”) had equivalent meanings; and, 3) understand whether Service member interpretation of specific questions aligned with what the study team intended to measure. These cognitive interviews are informative for survey improvement and will continue to inform improvements to the WGR survey instrument.

5. Statistical Consultation and Information Analysis

a. Provide names and telephone number of individual(s) consulted on statistical aspects of the design.

Mr. David McGrath, Chief, Statistical Methods Branch, OPA, (571) 372-0983, david.e.mcgrath.civ@mail.mil

b. Provide name and organization of person(s) who will actually collect and analyze the collected information.

Data will be collected by Data Recognition Corporation, OPA’s operations contractor. Contact information is listed below.

Ms. Valerie Waller, Senior Managing Director, Data Recognition Corporation, Valerie.Waller@datarecognitioncorps.com

Data will be analyzed by OPA social scientists & analysts. Contact information is listed below.

Dr. Rachel Breslin, Chief of Military Gender Relations Research, Health & Resilience Research Division – OPA, rachel.a.breslin.civ@mail.mil

Dr. Ashlea Klahr, Director, Health & Resilience Research Division – OPA, ashlea.m.klahr.civ@mail.mil

Ms. Lisa Davis, Deputy Director, Health & Resilience Research Division – OPA, elizabeth.h.davis18.civ@mail.mil

Dr. Jon Schreiner, Senior Survey Strategist, Health & Resilience Research Division– OPA, jonathan.p.schreiner.civ@mail.mil

Ms. Kimberly Hylton, Senior Researcher – Fors Marsh Group, kimberly.r.hylton.ctr@mail.mil

Mr. Mark Petusky, Operations Analyst – Fors Marsh Group, Mark.petusky.ctr@mail.mil

Ms. Alycia White, Operations Analyst – Fors Marsh Group, Alycia.white.ctr@mail.milMs.

Amanda Barry, Director, Military Health & Wellbeing Research – Fors Marsh Group, lseverance@forsmarshgroup.com

Ms. Margaret H. Coffey – Fors Marsh Group, mhcoffey@forsmarshgroup.com

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Patricia Toppings |

| File Modified | 0000-00-00 |

| File Created | 2021-04-22 |

© 2025 OMB.report | Privacy Policy