NPD_OMB_Part A_Justification

NPD_OMB_Part A_Justification.docx

Implementation Evaluation of the Title III National Professional Development Program

OMB: 1850-0959

Office

of Management and Budget Clearance Request:

Supporting Statement

Part A—Justification (DRAFT)

Implementation Evaluation of the Title III National Professional Development Program

PREPARED BY:

American

Institutes for Research®

1000 Thomas Jefferson Street, NW,

Suite 200

Washington, DC 20007-3835

PREPARED FOR:

U.S. Department of Education

Institute of Education Sciences

December 2020

Office

of Management and Budget

Clearance Request

Supporting

Statement Part A

December 2020

Prepared

by: American Institutes for Research®

1000 Thomas Jefferson

Street NW

Washington, DC 20007-3835

202.403.5000 | TTY

877.334.3499

www.air.org

Contents

A1. Circumstances Making Collection of Information Necessary 1

A2. Purpose and Use of Information 3

A3. Use of Improved Technology to Reduce Burden 5

A4. Efforts to Avoid Duplication of Effort 5

A5. Efforts to Minimize Burden on Small Businesses and Other Small Entities 5

A6. Consequences of Not Collecting the Data 5

A7. Special Circumstances Justifying Inconsistencies With Guidelines in 5 CFR 1320.6 5

A8. Federal Register Announcement and Consultation 6

Federal Register Announcement 6

Consultations Outside the Agency 6

A9. Payment or Gift to Respondents 6

A10. Assurance of Confidentiality 6

A12. Estimated Response Burden 8

A.13 Estimate of Annualized Cost for Data Collection Activities 9

A.14 Estimate of Annualized Cost to Federal Government 9

A15. Reasons for Changes in Estimated Burden 9

A16. Plans for Tabulation and Publication 9

A17. Display of Expiration Date for OMB Approval 10

A18. Exceptions to Certification for Paperwork Reduction Act Submissions 11

Part A: Supporting Statement for Paperwork Reduction Act Submission

This package requests clearance from the U.S. Office of Management and Budget (OMB) to conduct data collection activities associated with the Implementation Evaluation of the Title III National Professional Development (NPD) Program. The purpose of this evaluation is to better understand the strategies that NPD grantees use to help educational personnel working with English learners (ELs) meet high professional standards and to improve classroom instruction for ELs. The Institute of Education Sciences (IES), within the U.S. Department of Education (the Department), has contracted with the American Institutes for Research® (AIR®) to conduct this evaluation.

Justification

A1. Circumstances Making Collection of Information Necessary

This evaluation is necessary because a key concern for states and school districts nationwide is how to meet the demand for teachers with the knowledge and skills to support ELs’ English proficiency, mastery of academic content, and social-emotional health. The number of ELs in grades K–12 has increased by approximately 30 percent since 2000–01 (Hussar et al., 2020), while nearly half of the Council of Great City Schools’ districts report an EL teacher shortage or anticipated shortage (Uro & Barrio, 2013). In 2009, only 20% of teacher preparation programs required at least one course on serving ELs (U.S. Government Accountability Office [GAO], 2009). The limited research base on what instructional practices work for EL students (Stephens, Halloran, & Xiao, 2012) was cited by institutions of higher education (IHEs) as a challenge to improving their instruction of EL educators (GAO, 2009).

The NPD program, authorized by Title III of the Elementary and Secondary Education Act (ESEA), attempts to address the demand for better EL teacher education by supporting IHEs in partnership with states and districts. The goals of the NPD program are to help educational personnel working with ELs meet high professional standards and to improve classroom instruction for ELs. NPD provides grantees up to 5 years of funding (approximately $350,000 to 550,000 per year) to support a variety of activities for both preservice and in-service educators, such as teacher preparation coursework; credentialing support; professional development; and parent, family, and community engagement efforts to improve services for ELs. Since 2002, the Department has funded 484 NPD grants. Two currently active cohorts, encompassing 92 grants totaling $224 million, were funded in 2016 and 2017. Those grant competitions prioritized awarding funds to applicants that (1) incorporated strategies supported by moderate evidence of effectiveness and (2) featured a focus on parent, family, and community involvement. They also established invitational priorities that encouraged the use of dual language approaches and support for the early learning workforce.

Given the scope of the EL teacher education challenge and the federal investment in the NPD program, it is critically important that policymakers, administrators, and educators have access to information on the implementation of these grants. Although the Department of Education conducted a small set of case studies in 2012–13 (Stephens, Cole, & Haynes, 2014), the sample was not representative, and the findings cannot be generalized to the entire NPD program or to the current grantees. A systematic assessment of the strategies used by the current grantees is needed to guide future grant competitions and evaluations in the area of teacher education for ELs. The proposed evaluation will provide an up-to-date look at the 92 current grantees’ goals, strategies used to meet those goals, changes made to teacher education programs, and challenges and successes in promoting educator capacity to serve ELs.

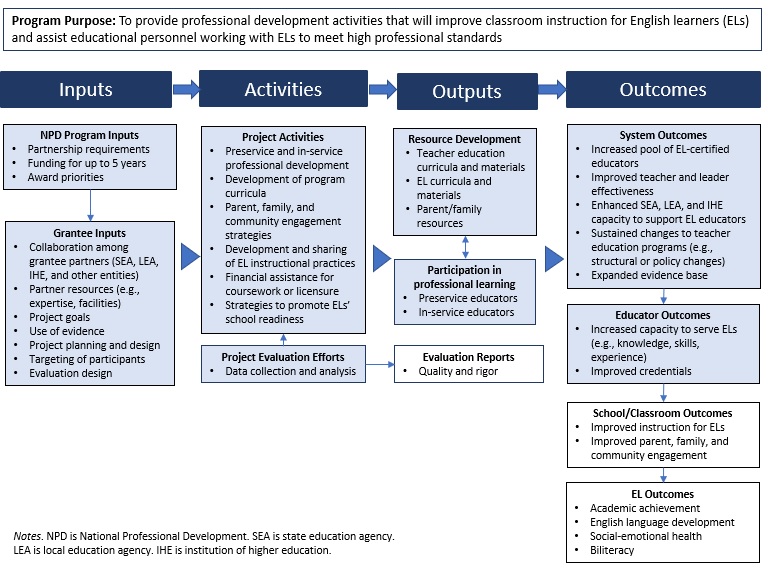

Exhibit A1 presents a logic model of the NPD program that will guide the data collection, analysis, and reporting for this evaluation. Because the evaluation is designed to examine implementation of the NPD program and not its impact on student outcomes, data collection will focus primarily on NPD program and grantee inputs, implemented activities, and outputs. However, the study team will collect some information on respondents’ perceived system and educator outcomes. The study’s principal areas of emphasis are delineated in blue in the logic model diagram.

Exhibit A1. Preliminary NPD Program Logic Model

This evaluation will rely on a survey of all 2016 and 2017 NPD grantees, a representative survey of NPD program participants, a systematic review of all grantees’ applications, interviews with state and LEA partners, and analyses of grantees’ annual performance data. The evaluation will address four primary evaluation questions (EQs):

What are the goals of NPD-funded projects, and what strategies are NPD grantees implementing to address those goals?

What factors facilitate or hinder grantees’ implementation of NPD program strategies? What challenges have NPD grantees and participants identified to adequately prepare EL teachers in general?

How have NPD grantees changed EL-related teacher preparation and professional development?

What are pre-service and in-service educators’ perceptions of the content and usefulness of the NPD-supported activities in which they participated?

A2. Purpose and Use of Information

The evaluation includes several complementary data collection activities that will enable the study team to address the EQs. In particular, we will collect the following extant data:

Grantee application and extant data review: As a first step in examining the goals and strategies of NPD program projects for EQ 1, the study team will systematically review each of the 2016 and 2017 grantee applications posted on the Department’s website by using a structured rubric to categorize and tabulate key features of grantees’ planned activities. This review will enable the study team to understand the prevalence and range of goals and strategies that grantees planned to pursue. The study team also will examine data from annual Government Performance and Results Act (GPRA) performance measures that grantees are required to submit to the Department to explore the extent to which grantees are realizing outcomes in service of their project goals, such as increasing certification rates and improving classroom instruction for ELs.

Participant rosters: To enable sampling of participants for the online survey, grantees will be asked to submit rosters and contact information of the educators who have participated in their NPD-funded activities. Rosters will be requested from the project director or their designee.

To obtain information on the implementation of NPD grants, we will administer grantee and participant surveys and interview a small number of state education agency (SEA) and local education agency (LEA) partners.

Grantee survey: The grantee survey, to be administered to all 92 active NPD projects, is designed to produce a fully representative picture of the strategies that grantees have implemented, specific changes that grantees made to their teacher education programs, and the steps that grantees have taken to sustain their strategies and outcomes after the grant period ends. The survey also will inquire about challenges in preparing educators to serve ELs effectively.

Participant survey: The participant survey will be administered to representative samples of preservice and in-service educator participants among the 92 active grantees to shed light on educators’ experiences engaging in NPD-supported professional learning activities. The survey will feature questions about the frequency with which participants had opportunities to learn about specific research-supported strategies for effective EL instruction as well as the perceived usefulness of those opportunities in enhancing their classroom instruction.

SEA/LEA partner interviews: To help guide interpretation of grantee survey and application review data, the study team will conduct telephone interviews with up to 9 SEA and LEA partners among the 92 grantees. These interviews will be designed to elicit state and district administrators’ perspectives on NPD grantee goals, strategies, implementation supports and challenges, and sustainability efforts.

IES requests clearance for all data collection activities except for the extant data and SEA/LEA partner interviews. The Department has already gathered the extant data through the NPD program, and the SEA and LEA interviews will involve fewer than 10 respondents. However, all data sources are included here to provide a comprehensive overview of the entire implementation study.

Exhibit A2 presents the data collection activities; EQs; respondents; modes; and schedule for the extant, survey, and interview data that the team will collect for the evaluation.

Exhibit A2. Data Collection Needs

Data Collection Activity |

Data Need |

Evaluation Questions (EQs) |

Respondent |

Mode |

Schedule |

|

Extant Data |

Grantee Application Review |

Project goals, design features (e.g., content, delivery modes, targeted participants); evidence base; evaluation plans; contextual factors |

EQs 1 and 3 |

NA |

Retrieved from the U.S. Department of Education (Department) website |

Fall 2020 |

Analysis of Annual Performance Data |

Participant completion rates, certification rates, and perceived effectiveness of the program |

EQs 1, 2, and 3 |

NA |

Supplied by the Department |

Winter 2021 |

|

Participant Roster Request |

List of educators who participated in National Professional Development (NPD) professional learning activities, to draw sample for participant survey |

EQs 1 ,2, and 4 |

Grantee project director or state education agency (SEA)/local education agency (LEA) partner |

Electronic communication |

Spring 2021 |

|

Surveys |

Grantee Survey |

Project goals, partners, and participants; implemented activities; participant follow-up and tracking; steps to promote sustainability; teacher education challenges |

EQs 1, 2, and 3 |

NPD grantee project directors |

Online questionnaire |

Winter 2021 |

Participant Survey |

Experiences learning about research-supported strategies for effective English learner student instruction, perceived usefulness of those experiences, perceived benefits and challenges of NPD-supported professional development |

EQs 1, 2, and 4 |

Preservice and in-service educators who engaged in NPD-supported professional learning activities |

Online questionnaire |

Spring 2021 |

|

Interviews |

SEA and LEA Partner Interviews |

SEA and LEA context, project goals, partner roles, implementation supports and challenges, perceived outcomes, steps to promote sustainability, use of evidence |

EQs 1, 2, and 3 |

SEA or LEA administrators involved in implementing the grant |

Telephone interview |

Spring 2021 |

A3. Use of Improved Technology to Reduce Burden

The study team will use a variety of information technologies to maximize the efficiency and completeness of the information gathered for this study and to minimize the burden on NPD grantee and participant respondents:

Use of extant data. The analysis of grantees’ NPD program applications and annual performance data previously collected by the Department will enable the study team to gather details about grantee projects without imposing any burden on respondents.

Online surveys. The grantee and participant surveys will be administered through a web‑based platform to facilitate and streamline the response process.

Support for respondents. A toll‑free number and an e‑mail address will be available during the data collection process to enable respondents to contact members of the study team with questions or requests for assistance. The toll‑free number and e-mail address will be included in all communication with respondents.

A4. Efforts to Avoid Duplication of Effort

Whenever possible, the study team will use existing data, particularly grantees’ applications for NPD funding and annual performance reporting data. Use of these existing data will reduce the number of questions asked in the surveys, thus limiting respondent burden and minimizing duplication of previous data collection efforts and information.

A5. Efforts to Minimize Burden on Small Businesses and Other Small Entities

The primary entities for the evaluation are IHE, state, district, and school staff. We will minimize burden for all respondents by requesting only the minimum data required to meet evaluation objectives. Burden on respondents will be further minimized through careful specification of information needs. We also will keep our data collection instruments short and focused on the data of greatest interest.

A6. Consequences of Not Collecting the Data

The data collection plan described in this submission is necessary for the Department to gain an up-to-date and representative picture of the NPD program implementation. The NPD grant program represents a substantial federal investment, and failure to collect the data proposed through this study would limit the Department’s understanding of how the program supports educator preparation and professional learning needs with respect to serving ELs. Understanding the strategies and approaches that the grantees implement will enable federal policymakers and program managers to monitor the program and to provide useful, ongoing guidance to current and future grantees. In addition, the evaluation will help to identify strategies that show promise for testing rigorously on a large scale. This will help inform future efforts to build the evidence base on effective strategies for improving EL instruction and supports.

A7. Special Circumstances Justifying Inconsistencies With Guidelines in 5 CFR 1320.6

There are no special circumstances concerning the collection of information in this evaluation.

A8. Federal Register Announcement and Consultation

Federal Register Announcement

The 60-day notice to solicit public comments was published in Vol. 85, no. 191, pp. 61940-61941 of the Federal Register on October 1, 2020. Two sets of substantive public comments have been received to date. The 30-day Federal Register Notice will be published to solicit additional public comments. Commentors were in support of the data collection but had suggestions regarding potential respondents, additional survey items, and revisions to the consent form. In response, the Department has added nearly all of the survey items suggested by one organization. These items reflect recruitment of individuals underrepresented in the teaching workforce, increasing the pool of teachers qualified to teach ELs and students with disabilities, and the use of technology to enhance instruction for ELs. The second commentor noted concerns related to the consent form, and the Department has added information related to the data collection timeline, mode of administration of the consent procedures, and that participation (for NPD participants) is voluntary. One commentor observed that some districts include data collection activities in teacher contract negotiations, and the proposed data collection activities would not be reflected in the current contract. However, because completion of the participant survey is voluntary (which is reflected in the study communications) this activity would fall outside of negotiated contract hours.

Consultations Outside the Agency

The experts who formulated the study design and contributed to the data collection instruments include Kerstin Le Floch, Rebecca Bergey, Maria Stephens, and Andrea Boyle of AIR. In addition, the study team will secure a technical working group (TWG) of researchers and practitioners to provide input on the data collection instruments developed for this study as well as on other methodological design issues. The TWG will comprise researchers with expertise in issues such as ELs and their acquisition of English, academic performance, and social-emotional health; evidence-based curricula and strategies in language instruction educational programs; and EL teacher preparation, credentialing, and professional development. The study team will consult the TWG throughout the evaluation.

A9. Payment or Gift to Respondents

The Department and several decades of survey research support the benefits of offering incentives to achieve high response rates (Dillman, 2007; American Statistical Association and American Association for Public Opinion Research, 2016; Jacob & Jacob; 2012). Accordingly, we propose incentives for the participant survey to partially offset respondents’ time and effort in completing the survey. Specifically, we propose to offer a $20 incentive to pre-service and in-service teachers for completion of the participant survey, to acknowledge the 40 minutes required to complete it. This proposed amount is within the incentive guidelines outlined in the March 22, 2005, “Guidelines for Incentives for NCEE Evaluation Studies,” memo prepared for OMB. Incentives are proposed because high response rates are needed to ensure that the survey findings are reliable, and data from the participant survey is essential for understanding participants’ experiences engaging in NPD-supported professional learning activities.

No incentives will be offered to NPD grantee project directors, since they are expected to

complete the survey, and we believe that will be sufficient to adequately obtain their responses

(U.S. Department of Education 2014).

A10. Assurance of Confidentiality

The study team is vitally concerned with maintaining the anonymity and security of their records. The project staff have extensive experience in collecting information and maintaining the confidentiality, security, and integrity of survey and interview data. All members of the study team have obtained their certification on the use of human subjects in research. This training addresses the importance of the confidentiality assurances given to respondents and the sensitive nature of data handling. The team also has worked with AIR’s institutional review board to seek and secure approval for this study, thereby ensuring that the data collection complies with professional standards and government regulations designed to safeguard research respondents.

The study team will conduct all data collection activities for this evaluation in accordance with all relevant regulations and requirements. These include the Education Sciences Institute Reform Act of 2002, Title I, Part C, Section 183, which requires that the director of IES “develop and enforce standards designed to protect the confidentiality of persons in the collection, reporting, and publication of data.” The evaluation also will adhere to the requirements of Part D of Section 183, which prohibit disclosure of individually identifiable information, as well as make the publishing or inappropriate communication of individually identifiable information by employees or staff a felony. Finally, the evaluation will adhere to the requirements of Part E of Section 183, which requires that “[all] collection, maintenance, use, and wide dissemination of data by the Institute . . . conform with the requirements of section 552 of Title 5, United States Code, the confidentiality standards of subsections (c) of this section, and sections 444 and 445 of the General Education Provisions Act (20 U.S.C. 1232 g, 1232h).” These citations refer to the Privacy Act, the Family Educational Rights and Privacy Act, and the Protection of Pupil Rights Amendment, respectively.

The study team will assure respondents that their confidentiality will be maintained, except as required by law. The following statement will be included under the Notice of Confidentiality in all voluntary requests for data:

Information collected for this study comes under the confidentiality and data protection requirements of the Institute of Education Sciences (the Education Sciences Reform Act of 2002, Title I, Part E, Section 183). Responses to this data collection will be used only for statistical purposes. The reports prepared for this study will summarize findings across the sample and will not associate responses with a specific district, school, or individual. We will not provide information that identifies you or your school or district to anyone outside the study team, except as required by law. Additionally, no one at your school or in your district will see your responses.

This study does not include the collection of sensitive information. All survey respondents will receive information regarding the survey topics, the ways in which the data will be used and stored, and the methods that will be used to maintain their confidentiality. Individual respondents will be informed that they may stop participating at any time. The goals of the study, the data collection activities, the risks and benefits of participation, and the uses for the data are detailed in an informed consent document, which all respondents will receive and read before they any data collection activities begin.

The following safeguards are routinely required of contractors for IES to carry out confidentiality assurance, and they will be consistently applied to this study:

All data collection employees sign confidentiality agreements that emphasize the importance of confidentiality and specify employees’ obligations to maintain it.

Personally identifiable information (PII) is maintained on separate forms and files, which are linked only by sample identification numbers.

Access to a crosswalk file linking sample identification numbers to PII and contact information is limited to a small number of individuals who have a need to know this information

Access to hardcopy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded materials are shredded.

Access to electronic files is protected by secure usernames and passwords, which are available only to approved users. Access to identifying information for sample members is limited to those who have direct responsibility for providing and maintaining sample crosswalk and contact information. At the conclusion of the study, these data are destroyed.

Sensitive data are encrypted and stored on removable storage devices that are kept physically secure when not in use.

The plan for maintaining confidentiality includes staff training on the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses. It also includes built-in safeguards concerning status monitoring and receipt control systems.

In addition, all electronic data will be protected through the use of several methods. The contractors’ internal networks are protected from unauthorized access, including through firewalls and intrusion detection and prevention systems. Access to computer systems is password protected, and network passwords must be changed regularly and must conform to the contractors’ strong password policies. The networks also are configured such that each user has a tailored set of rights, granted by the network administrator, to files approved for access and stored on the local area network. Access to all electronic data files associated with this study is limited to researchers on the data collection and analysis team. Online survey data will be collected using SurveyMonkey® or a comparably secure online platform. SurveyMonkey® stores data using SOC (Service Organization Controls) 2 accredited data centers that adhere to security and technical best practices. It ensures that collected data are transmitted via an HTTPS (hypertext transfer protocol secure) connection, that user logins are protected via a TLS (transport layer security) protocol, and that stored data are encrypted using industry-standard encryption algorithms and strength.

The study team will endeavor to protect the privacy of all survey respondents and interviewees and will avoid using their names in reports and attributing any quotes to specific individuals. Responses to the surveys and interviews will be used to summarize findings in an aggregate manner or will be used to provide examples of program implementation in a manner that does not associate responses with a specific grantee or individual.

A11. Sensitive Questions

No questions of a sensitive nature are included in this study.

A12. Estimated Response Burden

The total hour burden estimate for the data collections for the project is 1,781 hours, including 92 burden hours for the grantee survey, 46 hours for collecting participant rosters, and 1,643 hours for the participant survey. The total estimated cost of $29,481.70 is based on the estimated, average hourly wages of participants. Exhibit A3, below, summarizes the estimates of respondent burden for the various project activities.

For the grantee survey, the study team expects to achieve a response rate of 100 percent and estimates a total burden of 92 hours (60 minutes per survey, with all 92 grantees to be surveyed).

The 46-hour burden estimate for the participant roster collection assumes that the project directors will require 30 minutes to compile and submit the rosters.

The 1,643-hour burden estimate for the participant survey assumes that 1,190 preservice educators and 1,275 in-service educators will take 40 minutes to complete the survey (factoring in a response rate of 85 percent).

Averaged over the 3-year clearance period, the annual sample of respondents for this collection is 997. The annual number of respondents for this collection is 852. The annual number of burden hours for this collection is 594.

Exhibit A3. Summary of Estimated Response Burden

Data |

Respondent |

Total sample size |

Estimated response rate |

Estimated number of respondents |

Time estimate |

Total hour burden |

Hourly rate |

Estimated monetary cost of burden |

Grantee Survey |

Project directors (IHE or other entity) |

92 |

100% |

92 |

60 |

92 |

$46.90 |

$4,314.80 |

Participant Roster Collection |

Project directors (IHE or other entity) |

92 |

100% |

92 |

30 |

46 |

$46.90 |

$2,157.40 |

Participant Survey |

Preservice educators |

1,400 |

85% |

1,190 |

40 |

793 |

$0.00 |

$0.00 |

In-service educators |

1,500 |

85% |

1,275 |

40 |

850 |

$27.07 |

$23,009.50 |

|

TOTAL |

|

2,992 |

|

2,557 |

|

1,781 |

|

$29,481.70 |

Notes. IHE is institution of higher education. LEA is local education agency. SEA is state education agency.

A.13 Estimate of Annualized Cost for Data Collection Activities

No additional annualized costs for data collection activities are associated with this data collection beyond the hour burden estimated in item A12.

A.14 Estimate of Annualized Cost to Federal Government

The estimated cost to the federal government for this study, including development of the data collection plan and data collection instruments as well as data collection, analysis, and report preparation, is $640,975. Thus, the average annual cost to the federal government is $213,658.

A15. Reasons for Changes in Estimated Burden

This is a new collection, and there is an annual program change increase of 594 burden hours and 883 respondses.

A16. Plans for Tabulation and Publication

The evaluation team will produce a report featuring descriptive information about NPD program implementation to inform policymakers and other stakeholders, guide upcoming grant competitions, and shed light on EL teacher education research needs.

Analysis Plan

Evaluation question 1: What are the goals of NPD-funded projects, and what strategies are NPD grantees implementing to address those goals? We will address evaluation question 1 through descriptive analyses that draw on NPD grantee survey data, application review data, extant performance data, and participant survey data. Specifically, we will describe the range and prevalence of grantees’ intended outcomes, the types of activities implemented to yield those outcomes, and the extent to which implemented activities are supported by research. We also will explore grantees’ progress in achieving their desired goals, based on analyses of extant grantee performance data and participant survey data.

Evaluation question 2: What factors facilitate or hinder grantees’ implementation of NPD program strategies? What challenges have NPD grantees and participants identified to adequately prepare EL teachers in general? For evaluation question 2, we will describe grantee survey respondents’ reports of the conditions that have supported or impeded their ability to carry out planned grant activities. In addition, we will tabulate grantee project director and participant reports of the challenges that EL teacher education programs and EL educators face in improving their capacity to serve ELs.

Evaluation question 3: How have NPD grantees changed EL-related teacher preparation and professional development?? Drawing primarily on grantee survey and application review data, we will explore the extent to which grantees’ approaches to implementing NPD activities feature actions to build long-term systemic capacity for promoting EL educator development and effective classroom instruction for ELs.

Evaluation question 4: What are pre-service and in-service educators’ perceptions of the content and usefulness of the NPD-supported activities in which they participated? To enrich our understanding of the activities provided to pre-service and in-service educators through the NPD program, the study team will use data from the participant survey to examine the extent to which NPD participants report engaging in professional learning experiences focused on effective instructional strategies for ELs and the extent to which they perceived those learning experiences to be useful for their classroom instruction. We will also compare preservice teachers’ reports about their NPD-supported preparation experiences related to effective instruction of ELs with those from a large national sample of preservice teachers, based on data collected by the IES funded Study of Teacher Preparation Experiences and Early Teaching Effectiveness (Goodson et al., 2019).

Publication of Results

The contractor will use the data collected to prepare a report that clearly describes how the data address the key study questions, highlights key findings of interest to policymakers and educators, and includes charts and tables to illustrate the key findings. The report will be written in a manner suitable for distribution to a broad audience of policymakers and educators. We anticipate that the Department will approve and release this report by summer 2022. This final report will be made publicly available on both the Department website and the AIR website.

A17. Display of Expiration Date for OMB Approval

All data collection instruments will display the OMB approval expiration date.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions to the certification statement identified in Item 19, “Certification for Paperwork Reduction Act Submissions,” of OMB Form 83-I are requested.

References

American Statistical Association and American Association for Public Opinion Research. (2016). Joint American Statistical Association/AAPOR statement on use of incentives in survey participation. Retrieved from https://www.aapor.org/Publications-Media/Public-Statements/AAPOR-Statement-on-Use-of-Incentives-in-Survey-Par.aspx.

Dillman, D. A. (2007). Mail and internet surveys: The tailored design, second edition, 2007 update. Hoboken, NJ: John Wiley. ISBN: 0-470-03856-x.

Goodson, B., Caswell, L., Price, C., Litwok, D., Dynarski, M., Crowe, E., . . . Rice, A. (2019). Teacher preparation experiences and early teaching effectiveness (NCEE 2019-4007). Washington, DC: Institute of Education Sciences, U.S. Department of Education. Retrieved from https://files.eric.ed.gov/fulltext/ED598664.pdf

Hussar, B., Zhang, J., Hein, S., Wang, K., Roberts, A., Cui, J., . . . Dilig, R. (2020). The condition of education 2020 (NCES 2020-144). Washington, DC: National Center for Education Statistics, U.S. Department of Education. Retrieved from https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2020144

Jacob, R. T., & Jacob, B. (2012). Prenotification, incentives, and survey modality: An experimental test of methods to increase survey response rates of school principals. Journal of Research on Educational Effectiveness, 5(4), 401–418.

Stephens, M., Cole, S., & Haynes, E. (2014). Findings from case studies of current and former grantees of the National Professional Development Program (NPDP): Final report. Washington, DC: U.S. Department of Education.

Stephens, M., Halloran, C., & Xiao, T. (2012). A systematic review of the literature on approaches in pre-service and in-service teacher education programs for instruction of English learners. Washington, DC: U.S. Department of Education.

Uro, G., & Barrio, A. (2013). English language learners in America’s great city schools: Demographics, achievement, and staffing. Washington, DC: Council of Great City Schools. Retrieved from https://files.eric.ed.gov/fulltext/ED543305.pdf

U.S. Department of Education. “Education Department General Administrative Regulations (EDGAR).” Sec. 75.591, 20 U.S.C. 1221e-3 and 3474. December 19, 2014.

U.S. Government Accountability Office. (2009 July). Teacher preparation: Multiple federal education offices support teacher preparation for instructing students with disabilities and English language learners, but systematic departmentwide coordination could enhance this assistance. Report to the Chairman, Subcommittee on Higher Education, Lifelong Learning, and Competitiveness, Committee on Education and Labor, House of Representatives. GAO-09-573. Washington, DC: Author.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Information Technology Group |

| File Modified | 0000-00-00 |

| File Created | 2021-01-13 |

© 2026 OMB.report | Privacy Policy