Volume II

Volume II NAEP SAIL Dynamic Assessments.docx

NCES System Clearance for Cognitive, Pilot, and Field Test Studies 2019-2022

Volume II

OMB: 1850-0803

|

|

|

National Center for Education Statistics

National Assessment of Educational Progress

Volume II

Protocols

NAEP Survey Assessments Innovations Lab (SAIL)

Dynamic Assessments

Cog Labs and Medium Scale Study

OMB# 1850-0803 v.275

October 2020

Table of Contents

Authorization and Confidentiality Assurance 3

Student Interviewer Welcome Script and Assent/Consent - Cognitive Interviews 4

Instructions and Generic Probes for Student Cognitive Interviews 5

Example Dynamic Assessment Scaffolds and Probes for Student Cognitive Interviews 6

General Debriefing and Thank You (For all student cognitive interviews) 8

In-platform Probe for Medium Scale study 9

Example Online Instructions for Individual Students (e.g., to be delivered via email) 9

Attachment 1: Sample Screenshots 11

Paperwork Burden Statement

The Paperwork Reduction Act and the NCES confidentiality statement are indicated below. Appropriate sections of this information are included in the consent forms and letters. The statements will be included in the materials used in the study.

Paperwork Burden Statement

According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless it displays a valid OMB control number. The valid OMB control number for this voluntary information collection is OMB #1850-0803 v.275. The time required to complete this information collection is estimated to average 90-minutes for the cognitive interview study, and 45-minutes for the medium-scale study, including the time to review instructions and complete and review the information collection. If you have any comments concerning the accuracy of the time estimate, suggestions for improving this collection, or any comments or concerns regarding the status of your individual submission, please write to: National Assessment of Educational Progress (NAEP), National Center for Education Statistics (NCES), Potomac Center Plaza, 550 12th St., SW, 4th floor, Washington, DC 20202.

Authorization and Confidentiality Assurance

National Center for Education Statistics (NCES) is authorized to conduct NAEP by the National Assessment of Educational Progress Authorization Act (20 U.S.C. §9622) and to collect students’ education records from education agencies or institutions for the purposes of evaluating federally supported education programs under the Family Educational Rights and Privacy Act (FERPA, 34 CFR §§ 99.31(a)(3)(iii) and 99.35). All of the information you provide may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law (20 U.S.C. §9573 and 6 U.S.C.§151).

OMB No. 1850-0803 v.275 Approval Expires 7/31/2023

The purpose of this study is to look at how supporting materials are able to help students show the limits of their understanding when navigating challenging mathematical content. Below are the generic probes and example item-specific probes that students will be asked during the cognitive interviews. Please note that some probes or guiding questions may not be addressed if time does not permit.

Cognitive Interviews

Student Interviewer Welcome Script and Assent/Consent - Cognitive Interviews

The following script does not have to be read verbatim. You, as the interviewer, should be familiar enough with the script to introduce the participant to the cognitive interview process in a conversational manner. The text in italics is suggested content for you to become thoroughly familiar with in advance. You should project a warm and reassuring tone toward the participant in order to develop a friendly rapport. You should use conversational language throughout the interview.

After answering questions and giving further explanation, begin the interview with the first item.

“Thank you for agreeing to meet with us. We are working on making a new type of interactive test that would give students access to some extra information if needed, and we would like your help. I will read the instructions to you now and, for your reference. A copy was e-mailed to your parent as well, if you would like to follow along.

Instructions

In this activity, we will give you several problems to solve. Hopefully, they're also interesting.

Spend a couple of minutes trying to solve these problems on your own.

If you can't solve them on your own, look at the hints and help resources. [Interviewer will describe scaffolding in the current version of the system; e.g. pull hints, subproblem checkers, etc.] These are designed to help you figure these out. But do try to do these yourself first, and to use as few resources as possible to solve these problems. Try to be strategic about which resources you need.

We have a range of problems we’re trying with students. Some are easy, and some are hard. If you have some of the harder problems, think of these as puzzles. We'll give you enough background to solve them. As with most puzzles, you should be able to solve some of them, but you may not be able to solve all of the problems.”

You will have 90 minutes to complete these problems.

[Note: These may be adapted based on the study protocol. For example, if we withhold access to scaffolding for some time, instructions will reflect that. Instructions may be customized to the difficulty level of the items, or the amount of scaffolding provided.]

Did you know?

“You'll learn more from problems if you really think about them for a while before looking at hints or asking for help.

Some of these problems are hard; they're designed to really push you! If you can't solve them, even with hints, that's okay. Try your best!

You learn most quickly when you really stretch your brain by trying really hard things.

Remember, this is a voluntary study. We are happy to have you here to help us, but if you wish to stop at any time or take a break, please let me know. If there is a question that you don’t feel comfortable answering, please let me know. You have the right to withdraw at any time.

Is your parent or guardian in the room with you?

[If parent is present] [PARENT NAME], you are welcome to stay and watch this interview, but we request that you not help your child in any way, and that you wait until the end of the interview to ask any questions you may have for the researcher.

We would like to audio record the interview. I will not say your name during the interview in order to protect your privacy. We may also record the screenshare, but we will not keep a record of a video of your face.

Do I have your permission to record it?”

[With the participants’ agreement, turn on the recording equipment.]

Begin the recording with:

“This is <insert your name>, it is <time> on <date>, and I am using the Dynamic Assessment Task interviewing <Student ID # ____>. Do you agree to participate in the study?”

[If participant does not consent, do not turn on recording, but take careful notes of all responses and interactions.]

If you have them available, you may use any of the following that you find helpful:

Blank paper, graph paper, lined paper, a pencil, an eraser, a calculator, a ruler

Point the participant to the first item, and say:

“You may begin.”

Instructions and Generic Probes for Student Cognitive Interviews

Once the participant has joined the video call, the interviewer will share his or her screen, and give the participant control of the screen. If bandwidth issues make remote screen control prohibitive, interviewer may ask participant to load the assessment page in their own browser, and to screenshare in reverse. Interviewer will walk the participant through the assessment user interface on an item not in the main assessment.

Table 1. Examples of generic probes that may be used across items

|

Probe |

When to use (Matrix sample; all probes may not be used with all students) |

1 |

“Can you explain to me what the question is asking? Please try to explain without repeating the wording of the item.” |

Ask this probe for questions AFTER the respondent has answered the question. |

2 |

“Were there any words or parts of this question that were confusing?” Yes No |

All or most questions, particularly if a student struggles over any words |

3 |

“What did you find confusing? What could we do to make the question less confusing?” |

Ask both probes only if the respondent answered YES to the previous question. |

4 |

“What made you decide to use [support material used] to help answer this question? Did you find it helpful? Do you think you could have answered this question without looking at it?” |

Use to follow up on the particular support material(s) the student chose to interact with, if unclear. |

5 |

“Are there any other things (for example, formulas, or definitions) that you think would have helped you answer the problem?” |

Use with students who were unable to solve the problem or required many scaffolds. |

6 |

“How sure are you about your answer to this item?” Very Unsure Unsure Sure Very Sure |

Ask this probe for all discrete questions. |

7 |

“Is the math in this question something you’ve learned how to do at school? How recently? Do you feel like you remember what you learned about it?” |

Ask for probe for all questions that a student answers incorrectly and/or uses scaffolding materials for. |

Example Dynamic Assessment Scaffolds and Probes for Student Cognitive Interviews

There may be probes that reference terms, phrases, or high-level topics that are applicable to one or multiple items. During the problem-solving process, these may resemble a tutoring interaction or classical dynamic assessment. After the session, these might focus on specific issues that came up during the problem-solving process, to help develop more effective scaffolds and user interface. These questions may be asked on-the-fly, in response to observed student behavior, rather than pre-scripted.

Based on the interactions and problem-solving process, Table 2 gives examples of these probes, during the dynamic assessment and after. Of note, the level of use interviewer scaffolds may vary between interviews in part on what is being targeted during a particular interview. For example, an interviewer may give no scaffolds if evaluating how a student behaves using just system-provided scaffolds, as would happen in future phases of system development. On the other hand, when evaluating what scaffolds to add or remove, the interviewer may provide more extensive verbal scaffolding to help determine which sorts of scaffolds are most effective.

Table 2. Example of item-specific and cross-item probes.

No. |

Probe |

When to Probe |

1 |

“Do you remember the formula for the area of a triangle?” |

During |

2 |

“Can you try illustrating the problem?” |

During |

3 |

“Before looking at that hint, can you try thinking a little bit longer?” |

During |

4 |

“You were close, but you couldn’t figure out the area of a triangle. You clicked on the right resources. What might have made this resource more clear?” |

After |

5 |

“After seeing the third hint, you were able to solve the problem. What thought process did it trigger?” |

After |

General Debriefing and Thank You (For all student cognitive interviews)

“Before we finish, I’d like to hear any other thoughts you have about the math questions we worked on today.

Is there anything else you would like to tell me about working on these questions?

What do you think about having these sorts of supports available on standardized exams, where grading is based in part on which supports you needed, so students who can do problems independently are encouraged to do so?

Is there anything that you think could make these questions better?”

[Researchers will stop the recording at this time.]

Thank the participant(s) for his or her or their time. Notify students that they will be sent their virtual gift card.

Medium Scale Study

In-platform Probe for Medium Scale study

After conclusion of the items, we may include a single open-ended question within the platform.

“Do you have any thoughts about this format of assessment, the items, or anything you’d like to share with the research team?”

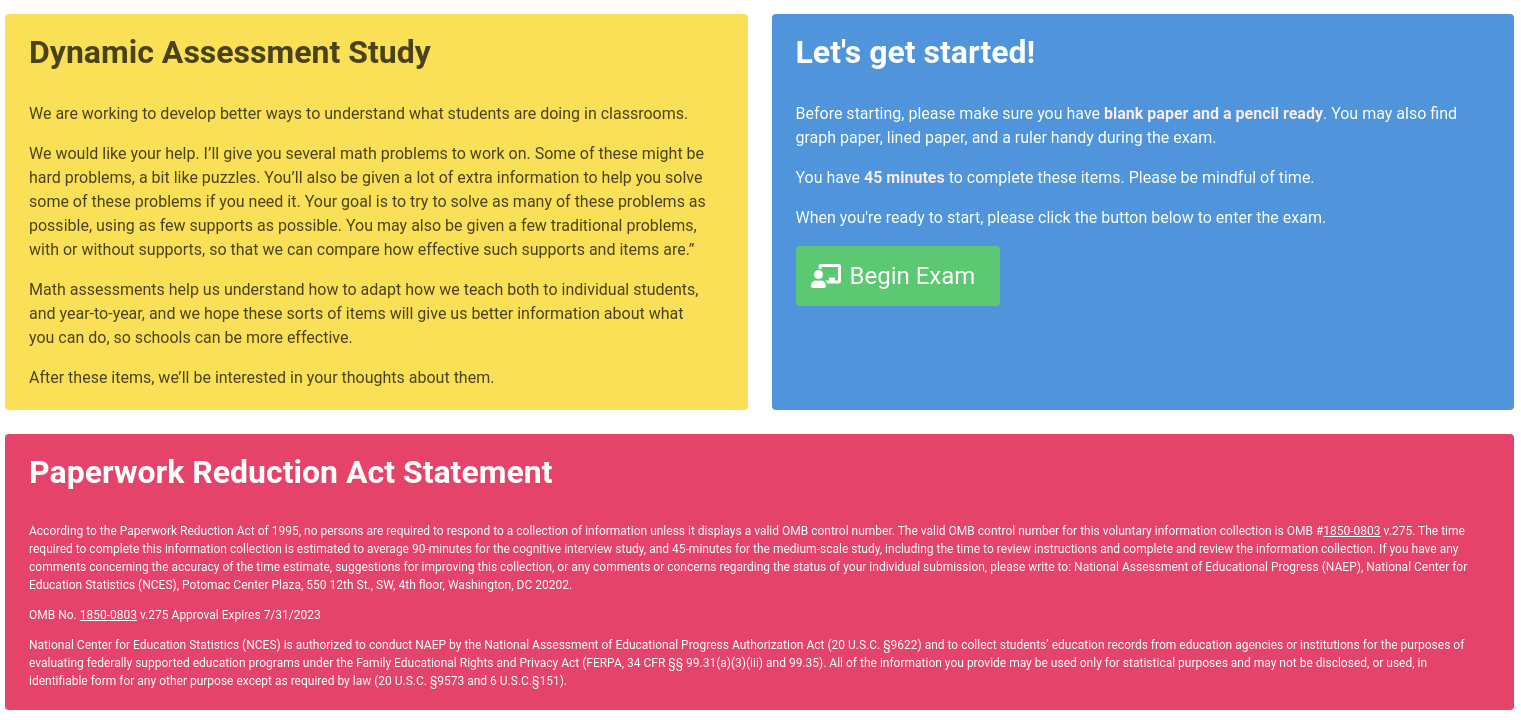

Example Online Instructions for Individual Students (e.g., to be delivered in the platform at the beginning of the assessment) - Medium Scale Study

We are working to develop better ways to understand what students are doing in classrooms.

We would like your help. I’ll give you several math problems to work on. Some of these might be hard problems, a bit like puzzles. You’ll also be given a lot of extra information to help you solve some of these problems if you need it. Your goal is to try to solve as many of these problems as possible, using as few supports as possible. You may also be given a few traditional problems, with or without supports, so that we can compare how effective such supports and items are.”

[Overview of how to use this system.]

“Math assessments help us understand how to adapt how we teach both to individual students, and year-to-year, and we hope these sorts of items will give us better information about what you can do, so schools can be more effective.

After these items, we’ll be interested in your thoughts about them.”

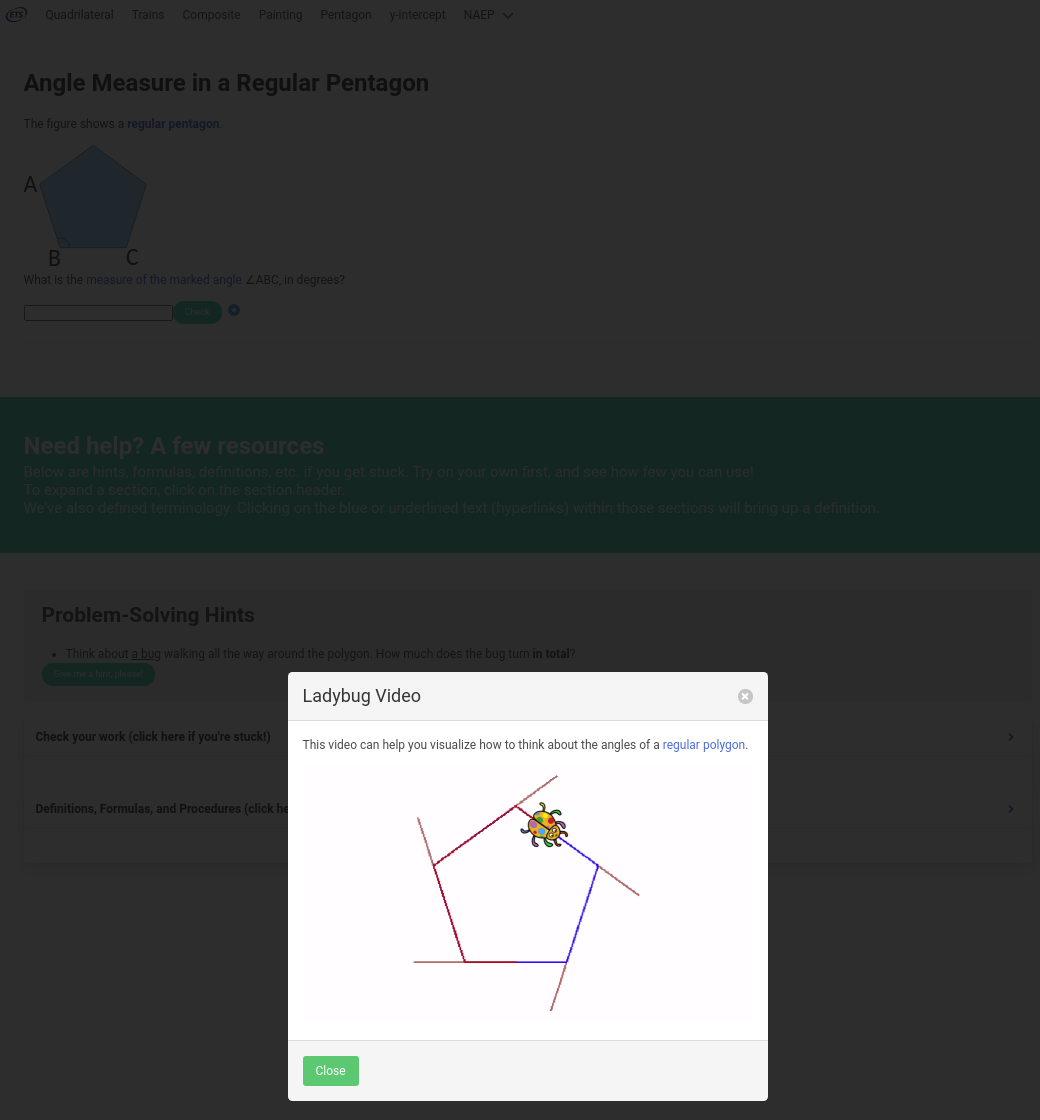

Attachment 1: Sample Screenshots

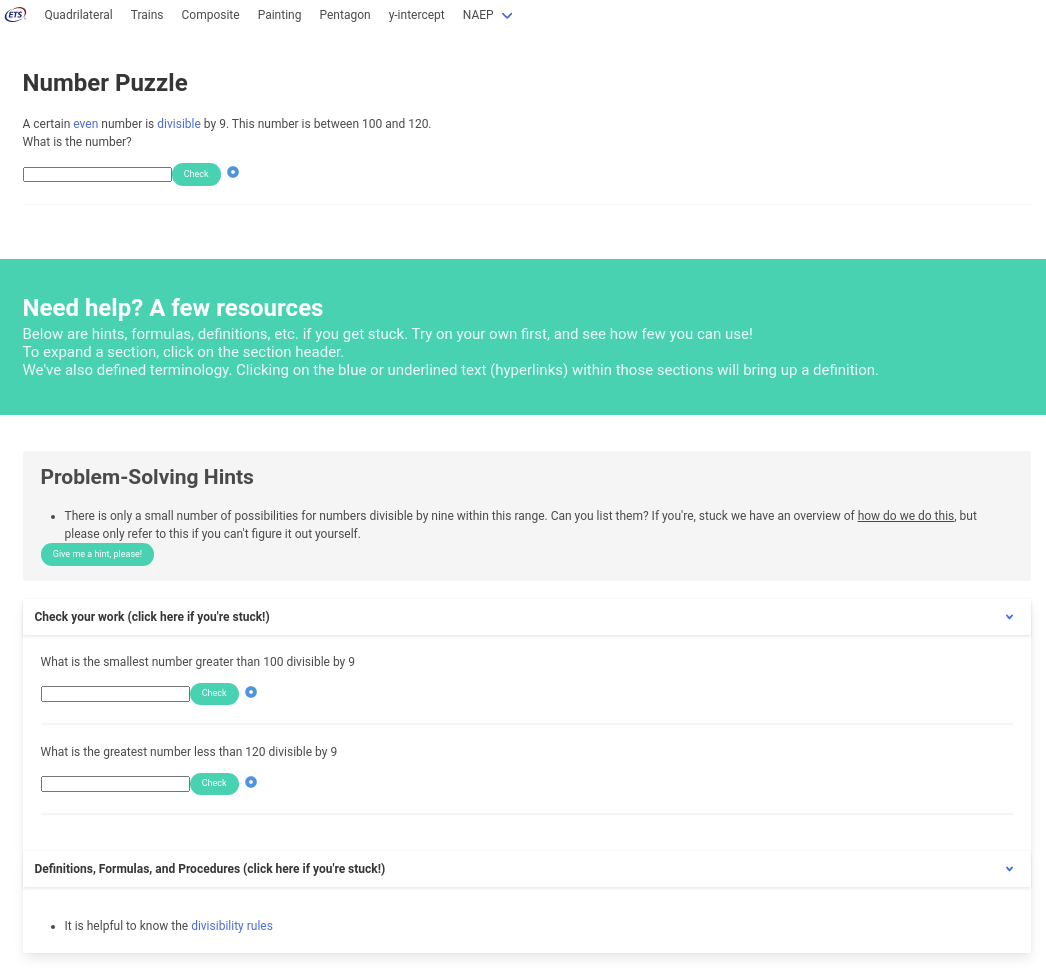

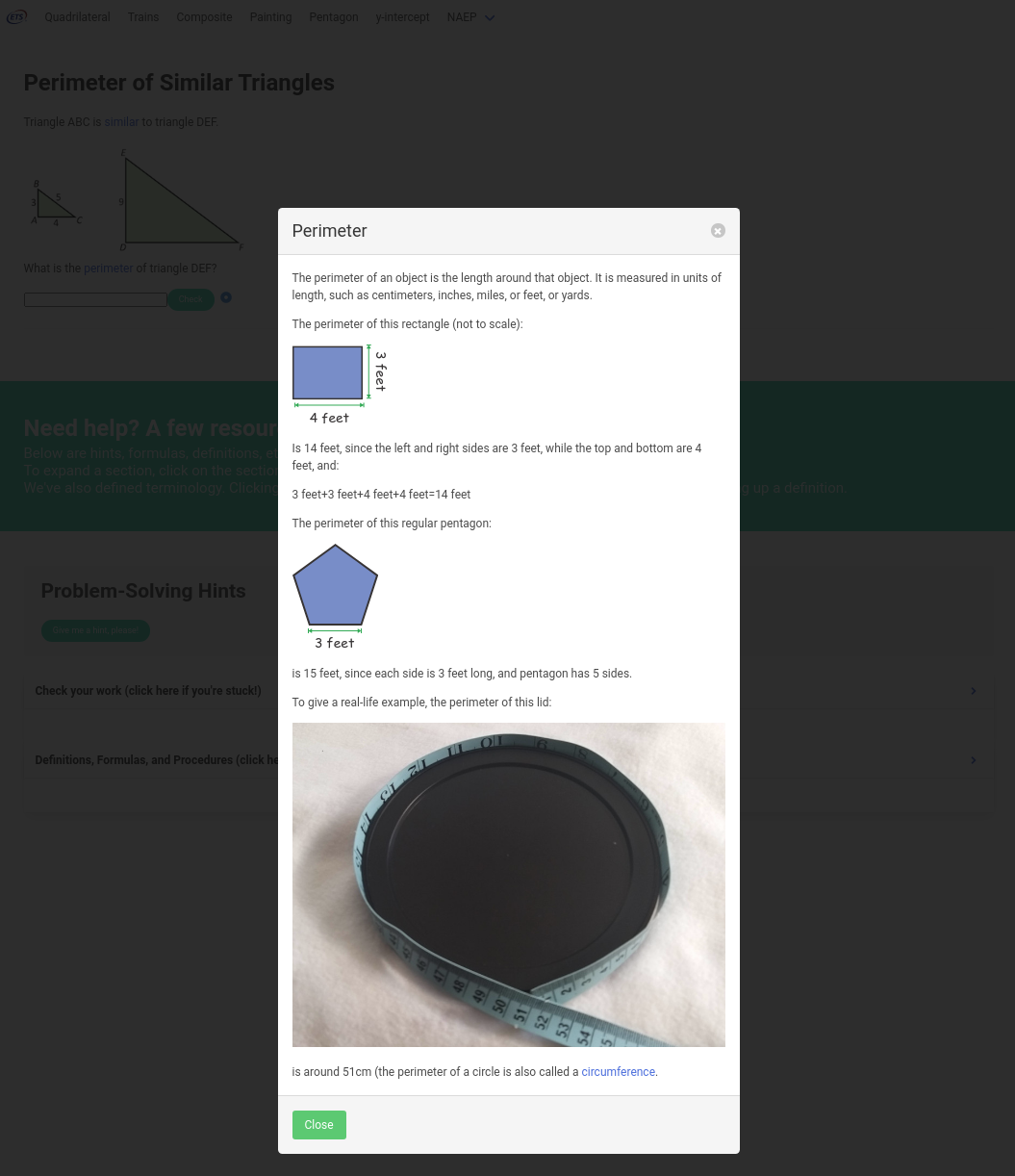

Submission contains sample screenshots of a user interface similar to what students will be presented with in the study. We may make minor changes (e.g., styling, layout, or based on data from the study). Note that for some items one or more sections may not be present (e.g., an item that does not need additional resources might not have a “resources” section).

Landing page (Students will see this page first when they begin the cognitive interview, or the direct link they are provided for the medium-scale study will bring them to it):

Item view, with all sections and one hint exposed:

Modal dialog with scaffold:

Pre-NAEP item, with all sections open, but no hints exposed:

Video scaffold (ladybug visualization):

|

|

|

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Qureshi, Farah |

| File Modified | 0000-00-00 |

| File Created | 2021-01-13 |

© 2026 OMB.report | Privacy Policy