1850-NEW AMP SS Part B OMB_Package_Attendance_Messaging_ptB_30day

1850-NEW AMP SS Part B OMB_Package_Attendance_Messaging_ptB_30day.docx

Impact Evaluation of Parent Messaging Strategies on Student Attendance

OMB: 1850-0940

June 2017

Impact Evaluation of Parent Messaging Strategies on Student Attendance

OMB Clearance Request: Data Collection Instruments, Part B

Data Collection

Impact Evaluation of Parent Messaging Strategies on Student Attendance OMB Clearance Request: Data Collection Instruments, Part B Data Collection June 2017

|

www.air.org Copyright © 2017 American Institutes for Research. All rights reserved. |

Contents

Page

Part B. Collections of Information Employing Statistical Methods 4

1. Respondent Universe and Sampling Methods 4

2. Procedures for Data Collection 8

3. Procedures to Maximize Response Rates 9

4. Testing Data Collection Processes and Instruments 10

5. Individuals Consulted on Statistical and Methodological Aspects of Data Collection 11

Exhibits

Page

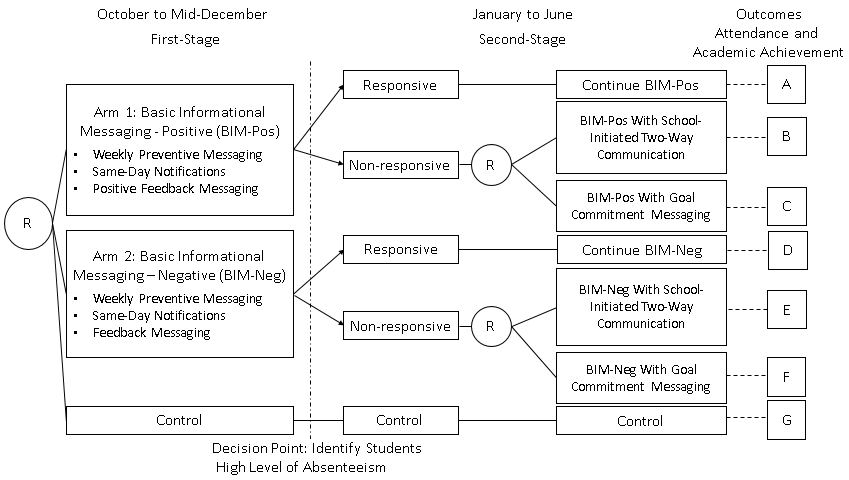

Exhibit 1. SMART Design to Evaluate the Adaptive Text Messaging Intervention 2

Exhibit 4. Selection Criteria 6

Introduction

Attendance in school is critically important for students’ short- and long-term academic and lifelong success. A number of studies show a link between attendance and outcomes such as academic performance, high school graduation, drug and alcohol use, and crime.1 While some of the research on the consequences of poor attendance focuses on middle and high school students, chronic absence in early grades is also linked with negative outcomes, including lower reading and mathematics achievement and higher absenteeism in middle and high school.2 Analyses in multiple states and school districts indicate that students who are chronically absent in the early grades are significantly less likely than their peers to read on grade level by grade 3, which, in turn, puts them at greater risk of dropping out of high school.3

According to recently released data by the U.S. Department of Education’s Office for Civil Rights, 11 percent of elementary grade students, or 3.5 million children, were chronically absent―defined as missing 15 days or more―during the 2013-14 school year. Preventing absenteeism early on may be more effective and less costly than intervening with older students, potentially helping to avoid the consequences of chronic absenteeism over multiple years.

This study will develop and rigorously test an innovative low-cost parent-focused text messaging intervention, meant to reduce elementary school absenteeism. The study will use a sequential multiarmed design in which, in the fall semester, families will be randomly assigned to one of two “first-stage” intervention conditions or to a business-as-usual (BAU) control group. In the spring semester, families in the control group will continue with business-as-usual. Families assigned to one of the first-stage interventions will continue to receive messages consistent with their first-stage assignments if their children are not chronically absent in the fall. However, families with children who are chronically absent in the fall, despite first-stage messaging, will be randomly assigned to one of two amplified “second-stage” intervention conditions. The study will examine the relative impact of the first- and second-stage messaging strategies separately and in combination. The study will also document the implementation of the text messaging intervention and its costs.

Study Description

Evaluation Design

The evaluation uses a sequential multiple assignment randomized trial (SMART) design4 (see Exhibit 1). In late September 2017, we will randomly assign families within schools to one of the two first-stage messaging conditions, Basic Informational Messaging with positive framing (BIM-pos) or Basic Informational Messaging with negative framing (BIM-neg), or to the business-as-usual (BAU) control condition. Between October and mid-December, families will receive messages consistent with their first-stage intervention condition. Parents or guardians assigned to the intervention conditions whose children were not chronically absent between October and mid-December (i.e., did not miss 10 percent or more of the school days during this period) will be considered “responsive,” and will continue with their first-stage messaging from January 2018 through the end of the school year. 5 Parents with children who were chronically absent, despite the first-stage messaging, will be considered “nonresponsive.” The nonresponsive families will be rerandomized to one of the two “amplified” second-stage messaging conditions, School Staff Outreach or Goal Commitment Messaging, in January. Parents in the School Staff Outreach or Goal Commitment Messaging conditions will receive messages consistent with their treatment group assignment from January through the end of the year.

Exhibit 1. SMART Design to Evaluate the Adaptive Text Messaging Intervention

Research Questions

The study design is guided by four research questions (RQs) on the impact of the text messaging strategies, and by two research questions related to implementation. RQ1 focuses on comparing the two first-stage messaging conditions for informing parents about the importance of regular attendance (BIM-pos or BIM-neg) to each other, and then to the BAU control group. RQ2 examines the relative effect of the two second-stage messaging conditions: School Staff Outreach and Goal Commitment Messaging. RQ3 focuses on the effects of the four different combinations of first- and second-stage messaging strategies (i.e., the four embedded adaptive interventions). RQ4 examines whether particular combinations of the first- and second-stage messaging strategies are more effective for students with different characteristics. RQ5 focuses on implementation fidelity, and RQ6 focuses on the costs of implementation and the cost effectiveness of the adaptive interventions.

RQ1. What is the average impact on student attendance of informing parents through two messaging strategies (1) preventive messages and same-day attendance notifications using positive framing (BIM-pos) or (2) preventive messages and same-day notifications using negative framing (BIM-neg), as compared to BAU and to each other?

RQ2. For those parents who do not respond to the initial first-stage messaging (BIM-pos or BIM-neg), can we improve attendance with school staff-initiated parent outreach and interpersonal support or by providing goal commitment messaging with additional tips and resources?

RQ3. Do the four combinations of first- and second-stage messaging strategies (i.e., the four adaptive interventions) have effects on end-of-year attendance and achievement when compared to each other and to BAU?

RQ4. Do the first- and second-stage messaging strategies work better for some families than for others?

RQ5. How is the text messaging intervention implemented?

RQ6. What are the costs of implementing the intervention and what is its cost effectiveness?

Analytical Approach

The study’s random assignment design will help to ensure that observed differences in average outcomes between treatment and control groups are due to the treatment and not to other factors. More specifically, this study will use an “intent-to-treat” approach, which examines the impact of offering the treatment. This is a policy-relevant impact because, typically in practice, policymakers cannot force participants to receive a treatment but rather can only offer it and encourage uptake. In this study, families assigned to a treatment condition will, by default, receive the study’s text messages but they are allowed to opt out. The families who opt out will still be considered part of the “treatment group,” however, since they were offered the messages.

Following this approach, we will estimate the effects of the text messaging intervention using two-level hierarchical models to account for the clustering of students within schools. We will use hierarchical linear models for continuous outcomes, such as student achievement, and hierarchical probit models for binary outcomes, such as chronic absenteeism (that is, whether or not students missed 10 percent or more of school days). For example, the model analyzing chronic absenteeism outcomes for RQ1, which examines the impacts of first-stage treatments, will be a mixed effects probit model with fixed effects for each first-stage intervention (treating BAU as the reference group) and school-level random effects. The basic model is

where

is the binary attendance outcome for child i

in school j

through mid-December;

is the binary attendance outcome for child i

in school j

through mid-December;

and

and

are the school mean centered indicators that child i

in school j

was assigned to BIM-pos or BIM-neg, respectively; and

are the school mean centered indicators that child i

in school j

was assigned to BIM-pos or BIM-neg, respectively; and

is a random effect for the jth

school taken to be

normally distributed with mean zero; and variance

is a random effect for the jth

school taken to be

normally distributed with mean zero; and variance

.

.

,

and

,

and

represent the effects of the interventions. The effects of BIM-pos

and BIM-neg are assessed by whether

represent the effects of the interventions. The effects of BIM-pos

and BIM-neg are assessed by whether

or

or

are different from zero, and the comparison of BIM-pos to BIM-neg is

assessed by whether

are different from zero, and the comparison of BIM-pos to BIM-neg is

assessed by whether

is different from zero. The combined effect of BIM-pos and BIM-neg is

assessed by an analysis in which a separate variable is created that

indicates whether BIM-pos or BIM-neg was offered. If the coefficient

for this indicator variable is different from 0, we can conclude that

text messaging overall made a difference regarding chronic

absenteeism relative to BAU.

is different from zero. The combined effect of BIM-pos and BIM-neg is

assessed by an analysis in which a separate variable is created that

indicates whether BIM-pos or BIM-neg was offered. If the coefficient

for this indicator variable is different from 0, we can conclude that

text messaging overall made a difference regarding chronic

absenteeism relative to BAU.

We will use a similar two-level hierarchical modeling approach for analyzing the other research questions. In the case of RQ2 and RQ3, we will apply additional procedures such as inverse probability weighting6 and simulated data to account for the unique estimation issues that arise from SMART designs (for example, some of the comparisons to be made in these RQs involve overlapping groups of study participants).

In the remainder of Part B, we address the respondent universe and sampling, procedures for data collection, procedures to maximize response rates, pilot-testing of the instruments, and the names of the team’s statistical and methodological consultants and data collectors.

Part B. Collections of Information Employing Statistical Methods

The Impact Evaluation of Parent Messaging Strategies on Student Attendance will examine the impact of the text messaging intervention on student absenteeism and achievement in elementary schools. The study will include a purposive sample of approximately 60 elementary schools across 4 school districts. We anticipate that approximately 26,000 families and 30,000 students in the 60 schools will participate.

Sample Size and Power Calculations. To determine the appropriate sample size for the study, we conducted power analyses, drawing on recently developed methods for studies using SMART designs. Here we present the minimum detectable effect sizes (MDESs) for RQ1 on the effects of the first-stage interventions on student attendance and RQ3 on the effects of the four embedded adaptive interventions on student attendance as well as end-of-year achievement. Exhibits 2 and 3 present the results of the power calculations for samples of 50, 60, and 70 schools. While the analyses will be conducted with the full sample as well as a sub-set of students determined to be “at-risk” for chronic absenteeism at baseline, the power calculations shown are based on the policy-relevant, “at-risk” student sub-sample only. Approximately 20 percent of students per school are assumed to be in the “at-risk” group.7

Exhibit 2. Minimum Detectable Change in Chronic Absenteeism and Number of Days Absent, for Comparisons Among First-Stage Messaging Strategies and Business-as-Usual

|

Number of Schools |

||

Outcome of Interest |

50 |

60 |

70 |

Chronic Absenteeism (Percentage Point Reduction) |

|

|

|

Overall effect (BIM-pos or BIM-neg vs. BAU) |

4.2 |

3.9 |

3.5 |

Effect of first-stage interventions (BIM-pos vs. BAU; BIM-neg vs. BAU; BIM-pos vs. BIM-neg) |

5.5 |

5.0 |

4.6 |

Absolute Number of Days Absent |

|

|

|

Overall effect (BIM-pos or BIM-neg vs. BAU) |

0.94 |

0.79 |

0.73 |

Effect of first-stage interventions (BIM-pos vs. BAU; BIM-neg vs. BAU; BIM-pos vs. BIM-neg) |

1.25 |

1.05 |

0.98 |

Exhibit 3. Minimum Detectable Change in Chronic Absenteeism, Number of Days Absent, and Student Achievement for Comparisons Among the Four Embedded Adaptive Interventions and Business-as-Usual

Outcome of Interest |

Number of Schools |

||

50 |

60 |

70 |

|

Chronic Absenteeism (Percentage Point Reduction) |

7.6 |

6.8 |

6.0 |

Absolute Number of Days Absent |

1.11 |

1.02 |

0.94 |

Student Achievement (Cohen’s d) |

0.27 |

0.24 |

0.23 |

The results in Exhibit 2 show that a sample of 60 schools can detect a 5.0 percentage point decrease in chronic absenteeism and a 1.0 day decrease in days absent when first-stage messaging strategies are compared to each other and the BAU condition. The results in Exhibit 3 show that a sample size of 60 schools is sufficient to detect a 6.8 percentage point decrease in chronic absenteeism, a 1.0 day decrease in number of days absent, and an MDES of 0.24 for student achievement outcomes when the embedded adaptive interventions are compared to each other and the BAU condition. These minimum detectable changes are considered meaningful and policy-relevant. In turn, a school sample of 60 schools was determined to be appropriate for the study.

Site Selection. The study does not employ random sampling of districts or schools. Instead, district and school selection will be based on characteristics required by the study design. In order to identify potential districts, the study team and the U.S. Department of Education (ED) developed a set of eligibility guidelines, summarized in Exhibit 4.

District Selection Guidelines: |

A district is considered for participation if they meet the following criteria:

|

School Selection Guidelines: |

Within an eligible district, a school is considered for participation if they meet the following criteria:

|

To determine initial eligibility, the study team first identified 64 districts across the country that had 10 or more elementary schools with 20 percent or more of students who were chronically absent during the 2013-14 school year. Although initial eligibility will be on the basis of absence rates, the goal is for the study to take place in schools considered low performing. We examined proficiency rates in the schools in identified districts and confirmed that they are considered “low performing” (e.g., on average, less than 60 percent of students reached proficiency in math and reading in the latest year of data available (e.g., 2014-15 or 2015-16)). We excluded three districts (Hawaii and Alaska school districts) from the eligible list due to cost concerns. From this initial list of districts, four school districts and a total of 60 schools will be selected for the study based on their current and planned practices (e.g., districts already providing attendance-related information to parents using text messaging will be excluded) and interest and ability to participate.

Sampling Methods

The five data sources planned for this study are (1) a parent survey, (2) School Attendance Counselor Logs, (3) district staff interviews, (4) district accounting records collection, and (5) district student records collection. In the paragraphs below we describe the sampling plans and anticipated response rates for these five data sources.

Parent Survey: The study team will administer a parent survey in April and May 2018 to a sample of 2,000 families from the total estimated study population of 26,000 families. The survey will focus on families that have children at risk for chronic absenteeism at baseline, where “at risk” is defined as having been chronically absent in the prior school year or having been chronically absent during the first month of the 2017-18 school year. Based on data from the U.S. Department of Education’s Office for Civil Rights, we estimate that approximately 20 to 30 percent of families in the study schools will have one or more children who will be identified as “at risk.”

We will randomly sample the families for the parent survey using a stratified sequential design. Prior to randomly sampling within strata, we will sort families sequentially by school so that families selected from all study schools are represented in the sample. The sample of 2,000 families will be allocated so that each of the seven end-of-year study conditions will have an equal number of sampled parents (i.e., 286). The full sample allocation scheme is provided in Exhibit 5. We expect to achieve an 85 percent response rate within each stratum.

Exhibit 5. Sample Allocation Across Strata

Study Condition |

Expected Number of At-Risk Families at Baseline |

Sample Allocation |

Probability of Being Surveyed |

Expected Number of Survey Completes |

BIM-pos |

299 |

286 |

96% |

243 |

BIM-pos with School Outreach |

1,343 |

286 |

21% |

243 |

BIM-pos with Goal Commitment Messaging |

1,343 |

286 |

21% |

243 |

BIM-neg |

448 |

286 |

64% |

243 |

BIM-neg with School Outreach |

1,269 |

286 |

23% |

243 |

BIM-neg with Goal Commitment Messaging |

1,269 |

286 |

23% |

243 |

BAU |

995 |

286 |

29% |

243 |

Total |

6,966 |

≈2,000 |

|

≈1,700 |

School Attendance Counselor Logs: School attendance counselors (or similar school staff) will conduct the parent outreach for the second-stage School Staff Outreach strategy. The school attendance counselor within each school will be asked to complete three brief logs in the spring of 2018 to estimate the costs of the School Staff Outreach strategy. We expect that each school will have one school staff person tasked with this outreach, for a total of 60 school staff. Across all schools, we expect a 90 percent response rate.

District Staff Interviews: At the beginning and end of the 2017-18 school year, the study team will collect additional information on the costs of setting up and administering the text messaging intervention through short, structured interviews with the heads of districts’ Information Technology (IT) and School Information System (SIS) departments. In these interviews, we will gather information on (1) the hardware and software necessary to set up and support the system, (2) the efforts school and district IT/SIS staff spend on the necessary technical expertise to integrate the system, and (3) the time it takes IT/SIS staff to monitor the attendance data and text messages throughout the year. We expect to interview one person within each of the four districts, and expect a 100 percent response rate for each round.

District Records Data Collection: The study team will request extant data from the school districts’ accounting records to estimate the costs to the participating school districts for setting up and implementing the text messaging system (e.g., labor and equipment costs). We will collect these data twice: first in October 2017 after the text messaging system has been set up, and second in summer 2018 after the implementation period has ended. We anticipate a 100 percent response rate for these requests made to each participating district.

District Student Records Data Collection: The study team will request extant data from the school districts’ records regarding student background characteristics and attendance information, teacher background characteristics and experiences, and student achievement information (e.g., standardized test scores for students in grades 3–6, formative assessment data if available). We will collect the extant data for the 2016-17 school year and for the first month of the 2017-18 school year in October 2017; we will request updated files in December 2017 and at the end of the 2017-18 school year. We anticipate a 100 percent response rate for these requests made to each participating district.

This section describes our approach for each of these data collections, including information about sample, timing, and instruments.

Parent Survey: The study team will follow survey administration procedures they have found successful with difficult-to-reach populations in prior studies. First, the study team will send parents a presurvey notification postcard through regular mail one week before the survey administration. Next, the study team will send text and e-mail notifications on the first day of the survey administration. The text and e-mail notifications will contain a link to the web-based survey. The survey will be programmed in Qualtrics Research Suite, which provides a visually appealing interface that is designed for computer screens and optimized for smartphone use.

For parents who have not responded, the study team will send follow-up text and e-mails in the second week of administration. To help with response rates, the study team will implement computer-assisted telephone interviewing follow-up with nonrespondents during the third week of administration. During the fourth week of administration, the study team will send a paper version of the questionnaire to all nonrespondents. Responses to the paper version of the questionnaire will be tracked in the database manually. Telephone attempts will continue throughout the remainder of the survey administration period that will end in late May 2018.

The study team will monitor the response rates by strata to determine if families from any of the strata have lower than expected response rates. In the fourth week of data collection, the study team will decide whether additional samples should be drawn to replace samples in the strata that have lower than expected return rates.

School Attendance Counselor Logs: The School Attendance Counselor Logs are designed to collect information on the amount of time attendance counselors spent working directly with families. The information will be used to estimate the costs of the School Staff Outreach strategy of the intervention. The study team will administer the web-based logs to the designated school attendance counselor (or other school staff person) for the School Staff Outreach strategy in each of the 60 study schools.

The study team will inform the school attendance counselors of the importance of the web-based log both during the attendance counselor training sessions in December 2017 and by e-mail in April 2018, one week prior to receiving it for completion. The study team will monitor completion rates electronically, and will begin follow-up reminders one week after the logs have been sent out. Individualized follow-up reminders will be sent by the study team starting two weeks after the initial invitation, followed by phone calls to attendance counselors who have not yet responded.

District Interviews: The district interviews will take place at the beginning and end of the 2017-18 school year and will collect information on the costs of setting up and implementing the text messaging intervention. The interviews will be scheduled with the heads of the Information Technology (IT) and Student Information System (SIS) departments two to four weeks in advance, and will be conducted via videoconference.

District Accounting System Records Data Collection: Plans for collection of extant cost records will be established before the start of the 2017-18 school year. We will include the details of the requests for the extant cost data collections within the memorandum of understanding (MOU) with each participating district. Prior to signing the MOU, study team members will work with the districts to answer any questions they may have regarding the extant cost data requests, and to discuss the timeline of the data requests, planned to occur once in fall 2017 and once in spring 2018.

District Student Records Data Collection: Plans for collection of extant student records will be established before the start of the 2017-18 school year. We will include the details of the requests for the student extant data collections within the MOU with each participating district. Prior to signing the MOU, study team members will work with the districts to answer any questions they may have regarding the extant data requests and to discuss the timeline of the data requests throughout the school year.

The goal is to achieve an overall response rate of 85 percent for the parent survey and across strata, 90 percent for the School Attendance Counselor Logs, and 100 percent for the district interviews and district records data collections. The following section describes our approach to maximizing response rates for each data collection.

Procedures to Maximize Parent Survey Response Rate: The study team will use the following methods to maximize the parent survey response rates:

The survey will be brief―approximately 10 minutes long―and will cover only crucial constructs, so that parents are less likely to refuse the survey because of the length or burden.

All parents being surveyed will be offered a $15 incentive for completing the survey, conditional on Office of Management and Budget approval.

Parents will be able to take the survey through multiple modes, including (1) electronically, via a link to the survey sent by text and e-mail, (2) on paper, via hard copy sent to parents’ homes, and (3) by phone, administered by trained data collectors. Notifications and reminders using text messaging with links to the web survey will also be sent (and will be designed to clearly be distinguishable from the intervention text messages). The web survey will be optimized so that it will format appropriately when using a smartphone web browser.

The survey will be available in both English and Spanish in each delivery mode. We will also consider other translations if a participating district indicates that a significant percent of the parent population in the participating schools are typically contacted with materials in other languages.

Procedures to Maximize Attendance Log Response Rates: To maximize response rates for the School Counselor Attendance Logs, the study team will use the following methods:

The logs will be short―approximately 40 minutes long including time preparing for the administration― and will ask simple questions on the amount of time the counselor has spent working with families of students.

The study team will emphasize the importance of completing the log during training for school attendance counselors on their role in the Augment component, and by e-mail one week prior to the administration of the log.

The study team will work closely with the district during the set up and implementation of the text messaging intervention, and we will rely on our primary district contact in the central office to encourage school attendance counselors to respond.

Procedures to Maximize Interview and Extant Data Response Rates: The study team will work closely with districts from the site selection phase onward, communicating the importance of these data collections, and including the data collection requests as part of the MOU. Study team members will be responsible for maintaining contact with the districts, ensuring that the district’s IT/SIS leaders are reminded of the interview and extant data requests in advance, and their importance to the success of the study.

The parent survey was piloted during the 60 day comment period (fewer than 10 respondents per instrument) and revised to ensure that the questions are as clear and simple as possible for respondents to complete. Pilot-test subjects included parents and district and school staff who are in areas similar to the study’s school districts (i.e., areas with high levels of chronic absenteeism). A think-aloud, or cognitive lab, format was used for pilot-testing, wherein the respondents were asked to complete the draft instrument, explain their thinking as they constructed their responses, and identify the following:

Questions or response options that are difficult to understand.

Questions in which none of the response options is an accurate description of a respondent’s circumstance.

Questions that call for a single response but more than one of the options is an appropriate response.

Questions for which the information requested is unavailable.

Based on the cognitive interviews, the survey language was simplified for readability, and one question was removed entirely based on our observation that cognitive lab participants had difficult answering it.

This project will be conducted by American Institutes for Research (AIR), under contract to the U.S. Department of Education, as well as by North Carolina State University and 2M Research Services. The data collection strategy and instruments were developed by Jessica Heppen, Anja Kurki, and Seth Brown of AIR, and Stephane Baldi and Paul Ruggiere of 2M Research Services. Marie Davidian and Eric Laber of North Carolina State University will lead the statistical design study and estimate the impacts of the Attendance Messaging Project intervention. Project staff will also draw on the experience and expertise of a network of outside experts who will serve as AIR’s technical working group (TWG) members. The TWG members for this study are listed in Exhibit 6:

Exhibit 6. Technical Working Group Members

Expert |

Title and Affiliation |

Hedy Nai-Lin Chang |

Executive Director, Attendance Works |

Peter Bergman |

Assistant Professor of Economics and Education, Teachers College, Columbia University |

Daniel Almirall |

Research Assistant Professor, Survey Research Center, Institute for Social Research, University of Michigan |

Fiona Hollands |

Adjunct Associate Professor of Education, Columbia University |

Lorien Abroms |

Associate Professor of Prevention and Community Health, School of Public Health and Health Services, George Washington University |

Lindsay Page |

Assistant Professor of Research Methodology, School of Education, University of Pittsburgh |

Susanna Loeb |

Barnett Family Professor of Education, Stanford University |

Mel Atkins |

Executive Director of Community and Student Affairs, Grand Rapids Public Schools |

The organizations responsible for data collection activities are as follows:

Organization |

Primary Contact |

Phone Number |

American Institutes for Research |

Seth Brown |

202-403-5959 |

2M Research Services |

Stephane Baldi |

202-796-1716 |

References for Supporting Statements, Part B

Almirall, D., Nahum-Shani, I., Sherwood, N.E., and Murphy, S.A. (2014). Introduction to SMART Designs for the Development of Adaptive Interventions: With Application to Weight Loss Research. Translational Behavioral Medicine, 4(3): 260–274. Retrieved from http://link.springer.com/article/10.1007/s13142-014-0265-0.

Davidian M., Tsiatis A.A., and Laber E.B (2016). Dynamic treatment regimes. In S.L. George, X. Wang, H. Pang (Eds.). Cancer Clinical Trials: Current and Controversial Issues in Design and Analysis (pp. 409-446). London, UK: Chapman and Hall.

Lunceford, J., Davidian, M., and Tsiatis, A. (2002). Estimation of Survival Distributions of Treatment Policies in Two-Stage Randomization Designs in Clinical Trials. Biometrics, 58(1), 48–57. Retrieved from http://onlinelibrary.wiley.com/wol1/doi/10.1111/j.0006-341X.2002.00048.x/abstract.

Murphy, A. (2005). An Experimental Design for the Development of Adaptive Treatment Strategies. Statistics in Medicine, 24(10): 1455–1481. Retrieved from http://onlinelibrary.wiley.com/doi/10.1002/sim.2022/full.

Murphy, S. A., and Almirall, D. (2009). Dynamic Treatment Regimens. Encyclopedia of Medical Decision Making. Thousand Oaks, CA: Sage Publications.

Nahum-Shani, I., Qian, M., Almirall, D., Pelham, W.E., Gnagy, B., Fabiano, G.A., and Murphy, S.A. (2012). Experimental design and primary data analysis methods for comparing adaptive interventions. Psychological Methods, 17(4): 457–477.

ABOUT AMERICAN INSTITUTES FOR RESEARCH

Established in 1946, with headquarters in Washington, D.C., American Institutes for Research (AIR) is an independent, nonpartisan, not-for-profit organization that conducts behavioral and social science research and delivers technical assistance both domestically and internationally. As one of the largest behavioral and social science research organizations in the world, AIR is committed to empowering communities and institutions with innovative solutions to the most critical challenges in education, health, workforce, and international development.

1000

Thomas Jefferson Street NW

Washington, DC

20007-3835

202.403.5000

www.air.org

![]()

LOCATIONS

Domestic

Washington, D.C.

Atlanta, GA

Austin, TX

Baltimore, MD

Cayce, SC

Chapel Hill, NC

Chicago, IL

Columbus, OH

Frederick, MD

Honolulu, HI

Indianapolis, IN

Metairie, LA

Naperville, IL

New York, NY

Rockville, MD

Sacramento, CA

San Mateo, CA

Waltham, MA

International

Egypt

Honduras

Ivory Coast

Kyrgyzstan

Liberia

Tajikistan

Zambia

1 Chang and Romero 2008; Ehrlich et al. 2014; Ginsburg, Jordan, and Chang 2014; Gottfried in press; Allensworth and Easton 2005, 2007; Baker, Sigmon, and Nugent 2001; Henderson, Hill, and Norton 2014; Henry and Thornberry 2010; Neild and Balfanz 2006; Balfanz and Byrnes 2013.

2 Applied Survey Research 2011; Ehrlich et al. 2014; Ready 2010.

3 Hernandez 2011; Jung, Therriault, and Prencipe 2013.

4 For more information about SMART, see Almirall, et al. 2014; Murphy and Almirall 2009; Nahum-Shani et al. 2012.

5 From here forward, we refer to parents and guardians as “parents,” for simplicity.

6 Davidian et al. 2016; Lunceford, Davidian, and Tsiatis 2002; Murphy 2005; Nahum-Shani et al. 2012.

7 We conducted these calculations using the lme4 package in the R programming language (https://cran.r-project.org), based on 1,000 Monte Carlo replications. They include a set of assumptions based on prior studies regarding the proportion of variance in the outcomes that is between schools, the percentage of outcome variance explained by covariates, the number of students and unique families per school, the percentage of students who will be “at-risk” for chronic absenteeism at baseline, and corrections for multiple comparisons.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | AIR Report |

| Subject | AIR Report |

| Author | Heppen, Jessica |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy