MTSS-B OMB package Part A (3)

MTSS-B OMB package Part A (3).docx

An Impact Evaluation of Training in Multi-Tiered Systems of Support for Behavior (MTSS-B)

OMB: 1850-0921

Impact Evaluation of Training in Multi-Tiered Systems of Support for Behavior (MTSS-B)

OMB Clearance Request:

Supporting Statements A

Data Collection

Prepared for:

Institute of Education Sciences

United States Department of Education

Contract No. ED-IES-14-C-003

Prepared By:

MDRC

16 East 34th Street, 19th Floor

New

York, NY 10016

Fred Doolittle, Project Director

(212) 340-8638

TABLE OF CONTENTS

INTRODUCTION 4

THEORY OF ACTION AND RESEARCH QUESTIONS 5

CHARACTERISTICS OF THE TREATMENT TO BE TESTED 6

STUDY DESCRIPTION 10

SUPPORTING STATEMENT FOR PAPERWORK REDUCTION ACT

SUBMISSION 17

A. JUSTIFICATION 17

1. Circumstances Making Collection of Information Necessary 17

2. Purposes and Use of the Data 17

3. Use of Technology to Reduce Burden 18

4. Efforts to Avoid Duplication 18

5. Methods to Minimize Burden on Small Entities 18

6. Consequences of Less Frequent Data Collection 18

7. Special Circumstances Relating to Federal Guidelines 18

8. Federal Register Comments and Persons Consulted Outside the Agency 18

9. Payment or Gifts to Respondents 19

10. Assurances of Confidentiality Provided to Respondents 21

11. Justification of Sensitive Questions 22

12. Estimates of Annualized Burden Hours and Cost 23

13. Estimate of Other Total Annual Cost Burden to Respondents and Record

Keepers 26

14. Annualized Cost to the Federal Government 26

15. Explanation for Program Changes or Adjustments 26

16. Time, Schedule, Publication and Analysis Plan 26

17. Approval to Not Display OMB Expiration Date 27

18. Explanation of Exceptions to the Paperwork Reduction Act 27

REFERENCES 28

APPENDICES

Site Visit Interview Protocol: Administrators

Site Visit Interview Protocol: Behavior Team Leader

Site Visit Interview Protocol: Students

Site Visit Interview Protocol: Staff

Phone Interview: MTSS-B Coach

Phone Interview: Administrator and Behavior Team Leader

Staff and Teacher Survey

Student Survey

Parent Informed Consent Form

Teaching Ratings of Student Behavior

District Records Data Collection Request Letter

Excerpt from IDEA, P.L. 108-446

INTRODUCTION

Creating and maintaining safe and orderly school and classroom environments to support student learning is a top federal priority, as indicated by the U.S. Department of Education and the U.S. Department of Justice jointly issued guidance on school discipline. The implementation of Multi-Tiered Systems of Support for Behavior (MTSS-B) is seen as a promising approach to improve school and classroom climate, student behavior, and academic achievement, and potentially reduce the inappropriate identification of students for special education. MTSS-B is a multi-tiered, systematic framework for teaching and reinforcing positive behavior for all students that includes universal, school wide supports (often called Tier I), targeted interventions to address the problems of students not responding to the universal components (often called Tier II), and intensive interventions (Tier III) for students not responding to Tier II services.

The widespread use of MTSS-B nationally contributes to the relevance of this evaluation. The U.S. Department of Education’s (ED’s) Office of Special Education Programs has supported technical assistance around MTSS-B since 1998. Recent data from the Office of Special Education Programs TA Center on Positive Behavior Interventions and Support indicate that as of 2014, MTSS-B is reportedly implemented in over 21,000 schools across the nation.1

As the support for and implementation of MTSS-B have increased, researchers have been developing an evidence base for it and other related programs. Numerous descriptive and correlational studies have associated aspects of MTSS-B implementation, or MTSS-B implementation overall, with student outcomes. More recently, researchers have begun to conduct causal studies of MTSS-B, although some of these initial studies have been on a relatively small scale (or conducted within a single state) or have been somewhat limited in duration and outcomes.2 Much of this research has focused on individual programs and not examined the impact of a large-scale implementation of both universal, school-wide programs and targeted programs within an MTSS-B framework.

Therefore, this evaluation focuses on a comprehensive MTSS-B program that includes core MTSS-B components: school-wide and classroom-level strategies (universal supports, or Tier I) and individual strategies (targeted intervention or Tier II), with appropriate infrastructure (staffing sand data system). This evaluation will not support intensive intervention for students not responding to Tier II interventions (i.e., there will be no training in Tier III interventions). The goal of the evaluation is to implement an MTSS-B program with fidelity and determine whether universal supports (Tier I) and a targeted intervention (Tier II) with appropriate infrastructure are effective when implemented in a large number of districts and elementary schools. IES has contracted with MDRC, AIR, Harvard University, and Decision Information Resources (DIR) to conduct the Impact Evaluation of MTSS-B.

Using a school-level random assignment design, this evaluation will examine the impact of training elementary school staff in the implementation of an MTSS-B framework including staff and data infrastructure with universal, school-wide components, as well as classroom-based activities (Tier I) in the first year of implementation, as compared to outcomes for schools undertaking typical behavior support practices. These schools are hereafter called business-as-usual or “BAU schools.” In addition, the study will also examine the impact of this Tier I training plus additional training in a targeted intervention (Tier II) in a second year of implementation. Schools that receive MTSS-B training and support are hereafter referred to as “Program schools.” The study will also investigate the differences in behavioral support services between program and BAU schools. Finally, the evaluation team will assess whether the MTSS-B training and support activities are implemented as intended, as well as the fidelity of schools’ implementation of the intended MTSS-B Tier I and Tier II activities.

THEORY OF ACTION & RESEARCH QUESTIONS

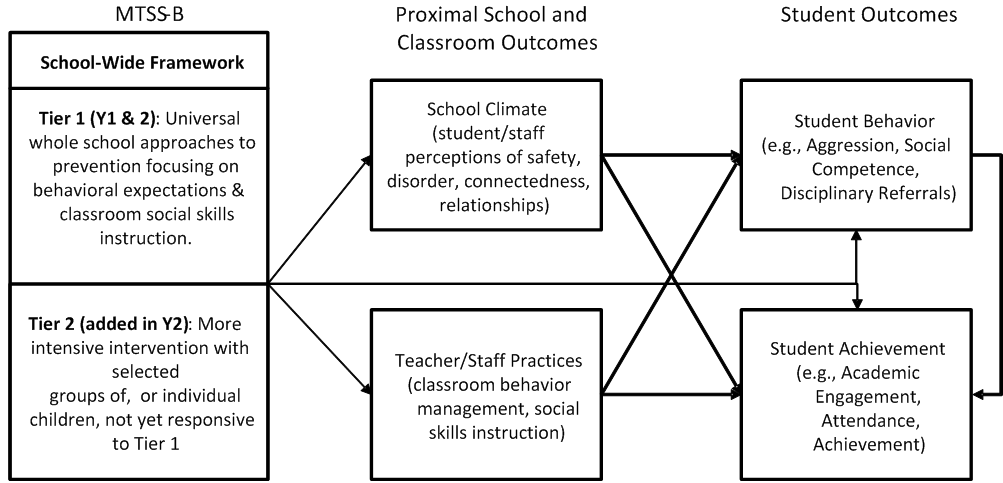

The implementation of MTSS-B is ultimately intended to improve student outcomes. The conduct of school-wide and classroom strategies (Tier I) and individual or small group strategies (Tier II), supported by appropriate infrastructure, is designed to improve school staff behavior practices as well as school and classroom climate. The improved practices and climate are, in turn, hypothesized to benefit student behavior and academic outcomes. The individual and small group strategies may affect the students who need additional support (Tier II) as well as all students if these strategies lessen the disruption of students needing additional support. Finally, improved student behavior could lead to better student academic outcomes (see Exhibit A-1).

Exhibit A-1: Conceptual Model of MTSS-B and Intended Outcomes

The evaluation is anchored on this conceptual model and will focus on the following research questions:

What MTSS-B training and support activities were conducted? What MTSS-B activities occurred in the schools receiving MTSS-B training? How do these MTSS-B activities differ from those in schools that do not receive the training?

What is the impact on school staff practices, school climate and student outcomes of providing training in the MTSS-B framework plus universal (Tier I) positive behavior supports and a targeted (Tier II) intervention?

What are the impacts for relevant subgroups (e.g., at-risk students)?

CHARACTERISTICS OF THE TREATMENT TO BE TESTED

In the spring of 2014, MDRC and partner organizations issued a Request for Proposals to providers of training in MTSS-B. In November 2014, we announced the winner of this competition, The Center for Social Behavior Support (CSBS), which is a collaboration between The Illinois-Midwest PBIS Network at the School Association for Special Education in DuPage, Illinois (SASED) and the PBIS Regional Training and Technical Assistance Center at Sheppard Pratt, in Maryland. The training and support activities proposed by CSBS are described below. Following that summary, the memo describes CSBS’s plan for how the MTSS-B Tier I and Tier II program will be implemented in schools.

CSBS Training and Support Activities

CSBS will mostly follow a “train the trainer” model with some direct support to all school staff and on-site support to the school staff and Coaches throughout implementation. Below we describe the key phases of their work with schools.

Readiness Period - Training and Support by CSBS to Develop Infrastructure: The first step is the development of school-level infrastructures that will support implementation of Tier I and Tier II program components, which include a staffing structure and data system. Tier I infrastructure will be put in place in the late spring of 2015 for all Program schools. Infrastructure for Tier II will be developed in the spring of 2016.

Staffing structure: CSBS trainers will work with each school to identify a representative School Leadership Team (SLT)3 and each district to identify MTSS-B Coaches. This will be accomplished during a two-day site visit to each district as well as webinars, email exchanges and phone calls. In addition to helping schools and districts identify the SLT and MTSS-B Coaches, CSBS will explain the MTSS-B program to the district coordinator, administrator, SLT and MTSS-B Coaches, as well as the roles and functions of each individual. During the spring of 2016, CSBS will work with schools to identify a Targeted Team (TT) to support Tier II implementation. Often, the TT is a subset of the SLT.

Behavioral monitoring data system: During the spring of 2015, CSBS trainers will support the SLT in putting in place the data infrastructure that relies on a web-based behavior monitoring data system called the School Wide Information System (SWIS). Minimally three SLT members and MTSS-B Coaches will be trained to use the SWIS data system to drive decision-making about issues such as which teachers may need additional supports with classroom management, and which locations in the school at what time may need more school staff to monitor student behavior. Appropriate staff will be identified and trained to enter data and generate reports. The data infrastructure will be augmented in the spring 2016 to include the data system for Check In Check Out (CICO), the primary Tier II intervention.

Initial and Booster Summer Trainings: During the summer of 2015, CSBS will host training within each district for the SLT, MTSS-B Coaches and administrators in all Program schools. The goal of the initial CSBS Tier I training during the summer of 2015 is to introduce the key school-level implementers (MTSS-B Coaches, SLT and administrators) to the core features of MTSS-B Tier I,4 as well as the tools to track implementation fidelity and engage in data-based problem solving. During the summer training each school team drafts a school-level implementation plan, and each school team should leave the summer training prepared to further develop and implement the school-level implementation plan. During the summer of 2016, the key Tier I implementers engage in a booster training in which they review core components, reflect on the previous year and develop a year 2 school-level implementation plan. During this booster training, new school staff are also introduced to the core components of MTSS-B Tier I. Initial summer training for Tier II also takes place in the summer of 2016 and includes training for the MTSS-B Coaches, Targeted Team and administrator in the core features of Check In Check Out5 and how to use CICO-SWIS data to monitor student progress. The goal of this initial training is for schools to develop and implement their CICO implementation plan.

Ongoing Training and Support: During the 2015-2016 and 2016-2017 school years, CSBS will provide ongoing support to the MTSS-B Coaches, SLT and administrators and some direct trainings to all school staff as schools implement Tier I activities. CSBS trainers will visit all Program schools four times over the course of the school year. During these visits, they will work with the SLT, administrator and MTSS-B Coaches to introduce Tier I core components to staff and to train staff in their version of the Good Behavior Game, referred to as the Positive Behavior Game (PBG), which helps teachers to teach and acknowledge classroom rules aligned with the school-wide expectations. Additionally, they will gather and use data regarding schools’ implementation fidelity to help the SLT to create and modify schools’ implementation plans.6 During site visits, monthly webinars and email/phone exchanges, CSBS will support Coaches and the SLT to train teachers in the eight CSBS classroom components.

CSBS’s

Eight Classroom Components Arrange

orderly physical environment Define,

teach, and acknowledge rules aligned with school-wide expectations

by modeling desired behaviors and using a gaming strategy; Explain

and teach routines;

Provide

specific and contingent praise for appropriate behavior;

Use

class-wide group contingencies;

Provide

error correction through prompt, re-teach, and provision of

choices; Employ

active supervision – move, scan, interact;

Provide

multiple opportunities to respond.

CSBS’s provision of ongoing support for the Tier II intervention during the 2016-2017 school year will follow the same model as their provision of ongoing support for Tier I. However, CSBS will conduct an extra site visit during year 2 of the study so they have sufficient time to provide ongoing support for the implementation of Tier I and Tier II.

MTSS-B Program Implementation

The key components of the Tier I and Tier II MTSS-B program evaluated in this study are described in the paragraphs below.

Tier I Practices: The school-level implementation of Tier I practices will occur in all Program schools during both the 2015-2016 and 2016-2017 school years. These practices include school-wide activities as well as specific classroom components that are overseen by the SLT and MTSS-B Coaches.

Leadership provided by SLT and MTSS-B Coaches: The SLT and MTSS-B Coaches are the lead implementers of the program and are responsible for introducing the program model and implementation plan to other staff members with support from CSBS trainers. They meet regularly to monitor implementation of the school action plan and, based on data collected on student behavior and implementation fidelity, provide support to other school staff to improve implementation.

Ongoing support for teachers by the SLT and MTSS-B Coaches: The SLT and MTSS-B Coaches provide ongoing training and coaching to teachers. These supports include collection of data on teacher performance, as well as the provision of targeted feedback to teachers and opportunities to practice the Positive Behavior Game.

School-wide implementation of program: A key component of the Tier I practices of MTSS-B is that the program is implemented school-wide and not just by particular administrators, teachers, or staff members. All school staff will be responsible for implementing the school’s 3-5 behavioral expectations, responding appropriately to positive and problem student behavior, and using student behavior data to develop solutions to specific problems.

Classroom components: Within the classroom, teachers establish and maintain a classroom management system, which includes eight specific components (see text box on page 9).

Tier II Practices: Tier II will begin in all Program schools during the 2016-2017 school year. During this period, the SLT and MTSS-B Coaches will review behavioral monitoring data to identify students who are not responding to Tier I supports. They will also solicit referrals from school staff and families. These students will receive the Check In Check Out (CICO) intervention, which is the Tier II intervention in this study.

CICO is a small group intervention that reinforces and extends the universal expectations by systematically providing a higher frequency of scheduled prompts, pre-correction, and acknowledgement to participating students. The program consists of students daily “checking in” with an adult at the start of school day to retrieve a goal sheet and receive encouragement and help in setting specific behavioral goals (including skills to practice) for the day. Throughout the day, teachers (or other school staff) provide feedback to the student and document student behavior on the goal sheet. Students “check out” at the end of the day with an adult, who summarizes the feedback and recognizes accomplishments. The Targeted Team (TT), usually a subset of the SLT, monitors students’ progress on CICO and establishes systems of communication with families.

STUDY DESCRIPTION

Study Design

School level random assignment is appropriate for this study because by definition, MTSS-B is an intervention delivered at the school level. The analysis of MTSS-B impacts will rely on a random assignment design in which schools within each participating district (approximately 9) are randomly assigned to the following two groups:

Program Schools: Training, infrastructure and support to implement Tier I components in year 1 (SY2015-2016) and Tier I and Tier II components in year 2 (SY2016-2017).

Business As Usual (BAU) Schools: Continue with any existing practices related to student behavior, and will not receive any additional training and support in MTSS-B from the evaluation.

First year impacts of Tier I will be calculated by comparing first year outcomes in all 58 Program schools implementing Tier I to first year outcomes in the 31 BAU schools. The second year impacts of Tier I plus II will be calculated by comparing second year outcomes in the Program schools to second year outcomes in the BAU schools. A baseline teacher assessment of student behavior (in fall 2015) allows for the identification of a high risk subgroup and the estimation of the net impact for high risk students of Tier I (year 1) and Tier I plus II services (year 2) compared to BAU schools.

The study team has recruited 89 elementary schools across approximately nine districts that are willing and able to comply with the requirements of MTSS-B training providers and the research and data collection procedures involved in the evaluation. Decisions regarding how many schools and districts to recruit were based upon desired minimum detectable effect sizes (MDES) for each outcome. We worked with IES to set the target minimum detectable effect size (MDES) based on reasonable and policy relevant expected impacts on various types of outcomes for this kind of intervention. We then calculated the required sample size for the target MDES, using parameter assumptions based on a review of existing literature.7

The impact study will examine several outcomes of MTSS-B – teacher practice, school climate, student behavior and academic achievement – following the theory of action presented above. The study will also consider the difference in behavior support practices in Program and BAU schools (service contrast). Finally, the evaluation will describe CSBS’s training and support activities as well as the implementation of MTSS-B in Program schools.

Data Collection Strategy & Analytic Approach

The data collection activities planned for this study are essential for the measurement of the key outcomes of interest—teacher practice, school climate, student behavior and academic achievement. Each data source contributes in a unique way to the measurement of these outcomes. For example, the student survey is the only measure of student’s perception of classroom climate and the staff survey is the only measure of staff’s perception of school climate.

Exhibit A-2 provides an overview of data collection activities. Data sources that present a burden to students and/or staff are indicated with an asterisk. A longer description of and justification for the data sources that present a burden is provided in the paragraphs below the table.

Exhibit A-2. Overview of MTSS-B Data Sources |

||||||||

|

Timeline |

|||||||

|

# of Respondents per School |

# of Schools |

Fall 2015 |

Spring 2016 |

Summer 2016 |

Fall 2016 |

Spring 2017 |

|

|

|

|

|

|

|

|

|

|

Site visits [SET/ISSET]* |

|

|

|

|

|

|

|

|

School tour to observe school rules |

0 |

89 |

X |

|

|

|

X |

|

Document review |

0 |

89 |

X |

|

|

|

X |

|

Administrator interview

|

1 |

89 |

X |

|

|

|

X |

|

Team leader interview

|

1 |

89 |

X |

|

|

|

X |

|

Student interview (stratified random sample)

|

15 |

89 |

X |

|

|

|

X |

|

Staff interview (stratified random sample)

|

15 |

89 |

X |

|

|

|

X |

|

Structured interviews with key implementers (phone interviews)* |

|

|

|

|

|

|

|

|

MTSS-B Coach phone interview

|

.20 ˅ |

58 |

|

X |

|

|

X |

|

Program school principal phone interview

|

1 |

58 |

|

X |

|

|

X |

|

Team leader phone interview

|

1 |

58 |

|

X |

|

|

X |

|

Review of web-based program forms (Coach logs, meeting minute forms and TIPS school forms) |

0 |

58 |

X |

X |

X |

X |

X |

|

Review of sample of CSBS records (CSBS trainer logs, attendance records, webinars, fidelity data) |

0 |

58 |

X |

X |

X |

X |

X |

|

Review of MTSS-B behavior monitoring and fidelity data (SWIS data, SWIS-CICO data & PBIS Apps data) |

0 |

58 |

X |

X |

X |

X |

X |

|

School staff survey (all school staff)* 30 minutes for non-teaching staff 35 minutes for teachers |

60 |

89 |

|

X |

|

|

X |

|

Student survey (all students in grades 4-5)* 20 minutes to administer survey [include time for set-up and reading survey aloud] |

200 |

89 |

|

|

|

|

X |

|

Classroom observations with the ASSIST and possibly CLASS [augmented in Program schools to included assessment of implementation fidelity]

|

0 |

89 |

|

|

|

|

X |

|

Teacher ratings of student behavior (all teachers in grades 1-5; all students in class at baseline and sample of students in spring 2016 and spring 2017)* 5 minutes per student per wave |

25 |

89 |

X |

X |

|

|

X |

|

District records data collection (9 districts) |

0 |

NA |

X |

X |

|

|

X |

|

Note: Activities that present a time burden to students and staff are indicated with an asterisk(*). Fielding of the staff survey in spring 2016 and collection of district records in Spring 2016 is not definite. ˅ The MTSS-B Coach role is designated as 0.2 FTE for each Program school. On average, however, districts will need 2 different MTSS-B coaches, though one of them may be only part-time. This increases the number of respondents for data collection purposes, but does not change the actual staffing.

|

||||||||

Description of Data Sources that Present a Burden to Staff and Students

Site Visits: We will conduct site visits in Program and BAU schools twice over the study period (fall 2015 and spring 2017). The site visit protocols involve document review, observation and interview. The times required for the interviews conducted as part of the site visit are indicated in Exhibits A-2 and A-3. The data collected from these visits will serve the following purposes: (1) Assess the difference in behavior support practices in Program and BAU schools (service contrast) and (2) Assess implementation fidelity to the core feature of the MTSS-B Tier I and Tier II programs in Program schools. To develop the protocols for these site visits, the study team is using the interview protocols as part of the School-wide Evaluation Tool (SET), which has been found to be a valid and reliable measure of MTSS-B Tier I implementation fidelity.8 It has also been used in prior randomized control trials of MTSS-B as a measure of service contrast between Program and BAU schools and found to accurately discriminate between schools implementing the core features of MTSS-B and those not.9 The team is combining the SET protocol with the I-SSET, which assesses the fidelity of Tier II and Tier III implementation.10 These two protocols have been combined in prior studies to provide a complete picture of the three tiers of MTSS-B implementation.11 The interview protocols for the site visits can be found in Appendices A-D.

Structured Phone Interviews with Key Implementers: We will conduct phone interviews with key program implementers (MTSS-B Coach, Administrator and Behavior Team Leader) in each of the 58 Program schools twice during the study period (spring 2016 and spring 2017) to assess these individuals’ perceptions of their schools’ implementation fidelity and the quality/utility of CSBS training and support. Understanding staff’s perceptions of the quality and utility of CSBS training and support in the Program schools is critical to fully understanding implementation fidelity and to identifying challenges associated with the implementation of MTSS-B in a large number of elementary schools and districts. The interview protocols for the structured interviews with key program implementers can be found in Appendices E and F.

Staff Survey: School staff (teachers, administrators and other staff) in all study schools will be asked to participate in a web-based survey about school climate, staff practices related to behavior, and training for behavior support practices and programs in the spring of 2016 and spring of 2017. The surveys also include a number of questions related to individual background characteristics and experiences. The survey for non-teaching staff will take approximately 30 minutes to complete and the survey for teaching staff will take approximately 35 minutes to complete.

Improved school climate is theorized to be the proximal outcome of the intervention and is therefore critical to measure to assess staff’s perceptions of school climate. We have adapted two well-validated measures: Organizational Health Inventory (OHI) and the Malasch Burnout Inventory. 12 Prior studies of MTSS-B have found impacts on the OHI.13

To assess staff practices related to behavior, we have adapted and abbreviated the Effective Behavior Support Survey (EBS).14 These items assess the extent to which school staff report implementing core features of MTSS-B. We have also adapted questions from the multi-site evaluation from IES’s Social and Character Development study to identify differences between BAU and Program schools in the extent to which staff report that they have received training for and/or are implementing school-wide and classroom-based programs similar to the MTSS-B approach being implemented in Program schools.15 We have also designed questions to assess staff’s reports of exposure to training and coaching related to behavior support practices. Staff’s answers to these questions will inform our understanding of service contrast; fully understanding service contrast is critical to the interpretation of the impact estimates.

The survey fielded in Program schools includes a limited number of items designed to assess staff’s perception of implementation fidelity and their perception of the quality and utility of the training and support they have received. Some of these questions have been adapted from prior studies of MTSS-B.16 The staff survey can be found in Appendix G.

Student Survey (Grades 4-5): Students in grades 4 and 5 in Program and BAU schools will be asked to complete a survey in the spring of 2017. The survey will take approximately 20 minutes for all fielding. This survey will provide critical information about students’ perceptions of their own behavior and classroom climate, which are both predicted by the theory of action to be positively impacted by MTSS-B. Most of the scales in the survey were borrowed from the student survey developed by Catherine Bradshaw and colleagues for the Maryland Safe and Supportive School Initiative. The high school version of this survey has been validated in prior studies.17We have also included a limited number of questions to assess students’ awareness of core features of MTSS-B implementation, such as the establishment of school rules. These questions will be asked in the survey administered in BAU and Program schools so they can inform the assessment of implementation and service contract. These questions were adapted from the student survey used for IES’s Violence Prevention study18. The student survey can be found in Appendix H.

Teacher Ratings of Student Behavior: Teachers of students in grades 1-5 in Program and BAU schools will be asked to rate their students’ behavior in fall of 2015.19 In the spring of 2016 and the spring of 2017, teachers will be asked to rate the behavior of a high-risk sub group (identified from the fall 2015 fielding) and a random sample of other students. Teachers will only be asked to rate the behavior of students in their classes with consent. The rating form is expected to take approximately five minutes per student to complete. If a teacher has twenty consenting students in her class, she may need to spend up to 100 minutes completing this rating form in Fall of 2015. In the Spring of 2016 and 2017, we are only asking teachers to rate a sample of their students and so the teachers will only need to spend up to 40 minutes on ratings each Spring.

The teacher ratings form will be used to assess the impact of the program on student behavior. The study team has adapted the Teacher Observation of Student Adaptation-Checklist (TOCA-C) for this instrument. The TOCA-C has been shown to be a valid and reliable measure of students’ behavior (concentration problems, disruptive behavior and prosocial behavior).20 It has been used in prior evaluations of MTSS-B where it has been shown to be sensitive to the intervention.21 The instrument measures key domains of student behavior closely tied to the theory of change of MTSS-B —prosocial behavior, concentration problems, and disruptive behavior. The study team is augmenting this measure with scales to assess additional policy-relevant concepts that are also closely tied to the MTSS-B theory of action-- bullying, students’ emotional regulation, and internalizing behaviors, as well as receipt of behavioral support services and referrals related to behavior. These additional items have been used in Catherine Bradshaw and colleagues’ ongoing evaluations of the MTSS-B interventions and shown in unpublished technical reports to have adequate reliability.22 The teacher ratings instrument is the most reliable and sensitive way to collect useful student behavior information. The teacher ratings form can be found in Appendix J.

District Records Data Collection: The study team will request extant data from school district records regarding student background characteristics, teacher background characteristics and experiences, student behavior incidences, student academic achievement (grades 3-5) and special education. Data will be collected at the end of each school year: baseline (SY 2014-2015), year 1 (SY 2015-2016) and year 2 (SY 2016-2017). Requests will be made of district research offices. Appendix K provides a sample of the letter that will be sent to districts requesting these data.

SUPPORTING STATEMENT FOR PAPERWORK

REDUCTION ACT SUBMISSION

Part A: Justification

Circumstances Making Collection of Information Necessary

The U.S. Department of Education (ED) has supported the implementation of Multi-Tiered Systems of Support for Behavior (MTSS-B) since the 1990s and partnered with the Department of Justice to encourage creating and maintaining safe and orderly school and classroom environments. More than 21,000 schools across the nation report implementing MTSS-B.23 Numerous descriptive studies as well as some initial rigorous studies have been conducted and demonstrate the promise of MTSS-B training. Therefore, a large scale evaluation to determine the effectiveness of MTSS-B is warranted. This study will provide the first national, random assignment study of the impacts of MTSS-B, providing impact estimates for universal supports (Tier I) as well as universal and targeted support together (Tiers I and II combined).

This impact evaluation of MTSS-B is authorized in Section 664 of the Individuals with Disabilities Education Act [IDEA], as amended, which assigns to IES the responsibility to conduct studies and evaluations of the implementation and impact of programs supported under IDEA, which includes MTSS-B.24 This statute is provided in Appendix L.

Purpose and Use of the Data: How the data will be collected, by whom, and for what purpose.

The information gathered through this data collection will be analyzed by the IES evaluation contractor (MDRC) and its subcontractors to study the implementation and impacts of MTSS-B in elementary schools. This will involve new data collection where needed through staff and student surveys, teacher ratings of student behavior, brief interviews with students and staff, and structured interviews with principals and key program implementers (MTSS-B Coaches and Behavior Team Leaders). Exhibit A-2 shows a timeline of these activities.

The data collected for this study will be used by ED to report to Congress on the assessment of activities using federal funds under the Individuals with Disabilities Education Act National Assessment.25 Failure to collect these data may result in ED being unable to adequately report to Congress on the assessment of these activities. Additionally, if this evaluation were not completed, ED and Congress would not have an accurate understanding of the impact of MTSS-B, which is reportedly being implemented in over 21,000 schools across the country and supported by federal funds.

Use of Technology to Reduce Burden

All staff surveys and teacher ratings will be administered using web-based surveys so they are easily accessible to respondents. Administration of web-based surveys enables reduced burden through complex skip patterns that are invisible to respondents, as well as prefilled information based on responses to previous items when appropriate. Web-based surveys also leads to decreased costs associated with processing and increased data collection speed. Paper survey options will be offered to respondents as part of the follow-up effort with individuals who do not respond. The interviews with key program implementers in the spring of 2016 and spring of 2017 will be administered by phone to reduce burden on respondents and reduce travel costs to the evaluation.

Efforts to Avoid Duplication

As described in the introduction, the data collection effort planned for this project will produce data that are unique, and it specifically targets the research questions identified for this project. Valid and reliable measures of teacher practice, school climate and student behavior are not available from extant data for the participating districts and schools.

Methods to Minimize Burden on Small Entities

The data will be collected from district and school staff, and no small businesses or entities will be involved in the data collection.

Consequences of Less Frequent Data Collection

If the proposed data collection is not done, it will not be possible for ED to report rigorous impact findings from a large scale study of MTSS-B to Congress, other policymakers, and practitioners seeking effective ways to support student learning.

Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

None apply to this data collection.

Federal Register Comments and Persons Consulted Outside the Agency

The 60 day Federal Register notice was published on May 1, 2015. No published comments have been received to date. The following experts serve on an Expert Panel for the Selection of Training Providers and have been consulted on the design of the study.

Expert |

Organization |

Leonard Bickman, Ph.D. |

Vanderbilt University, Peabody College of Education |

Catherine Bradshaw, Ph.D.26 |

University of Virginia, Curry School of Education |

Lori Newcomer, Ph.D. |

University of Missouri, Department of Educational, School, and Counseling Psychology |

The following individuals participated in the Technical Working Group for the Evaluation that convened on March 26, 2015.

Expert |

Organization |

David Cordray, Ph.D |

Vanderbilt University, Department of Psychology and Human Development |

Brian Flay |

Oregon State University, School of Social and Behavioral Health Sciences |

Mary Louise Hemmeter |

Vanderbilt University, Department of Special Education |

Sara E. Rimm-Kaufman, Ph.D. |

University of Virginia, Curry School of Education and Center for the Advanced Study of Teaching and Learning |

Lori Newcomer, Ph.D. |

University of Missouri, Missouri Prevention Center / Department of Educational School and Counseling Psychology |

Payment or Gifts to Respondents

We are aware that teachers are the targets of numerous requests to complete data collection instruments on a wide variety of topics from state and district offices, independent researchers, and ED, and several decades of survey research support the benefits of offering incentives. Further, high response rates are needed to make the study measures reliable and offering honoraria for staff and teachers will help ensure high response rates. In fact, the importance of providing data collection incentives in federal studies has been described by other researchers, given the recognized burden and need for high response rates.27

The use of incentives has been shown to be effective in lowering non-response rates and the level of effort required to obtain completions.28 Studies have shown that when used appropriately, incentives are a cost-effective means of significantly increasing response rates.29 Although much of the research on the value of using incentives has focused on low-income populations, the value of using incentives in educational settings has also been demonstrated. In the Reading First Impact Study commissioned by ED, monetary incentives proved to have significant effects on response rates among teachers. A sub-study requested by OMB on the effect of incentives on survey response rates for teachers showed significant increases when an incentive of $15 or $30 was offered to teachers as opposed to no incentive.30 In 2005, the National Center for Education Evaluation (NCEE) submitted a memorandum to OMB outlining guidelines for incentives for NCEE Evaluation Studies and tying recommended incentive levels to the level of burden (represented by the length of the survey). The amount of incentives planned for the survey respondents for this study is consistent with that proposed in the NCEE memo Guidelines for Incentives for NCEE Evaluation Studies.31

Payments to teachers and staff: Teachers and school staff will be asked to complete surveys and rating forms at multiple times over the study period (fall 2015-spring 2017). We expect that these activities will be completed outside of the normal workday covered in their labor agreements, given the level of demand already made upon their time. Teachers and other school staff will be asked to complete a 30-35 minute survey in the spring of 2016 and 2017. If district rules allow, we will offer a gift card valued at $25 for each completion. Teachers also will be asked to complete a 5 minute short behavior rating for each of their students (from a pool of consenting students) in the fall of 2015. In the spring of 2016 and spring of 2017, teachers will be asked to rate a sample of their consenting students. Each rating will take approximately 5 minutes per student and, if district rules allow, teachers will be provided a gift card. The value of the gift card for the teacher ratings will vary according to the number of ratings that the teacher completes. Teachers will be informed that they will only receive compensation if they complete a rating for all of their sampled students. The gift card value will not exceed $50. The incentive amounts are consistent with the 2005 NCEE memo recommendation for a “medium burden” data collection effort.

In addition, if district rules allow, we will provide a $25 incentive to classroom teachers or the school data collection liaison to encourage high rates of return for the parental consent forms needed for data collection activities. Our preferred method of distributing consent forms is to include the forms in a packet parents receive at the school’s open house during enrollment at the beginning of the school year. Alternatively, homeroom teachers may distribute consent forms to their students, or the school may wish to have the forms mailed directly to the students’ homes. We will work closely with each school to ensure that parents who do not return the consent form are sent reminder notices with replacement forms. This process will be repeated, as needed, to achieve the target active parental consent rate. We will provide an incentive ($25 gift card) for each classroom in which at least 90% of the student parental consent forms are returned, whether or not the parent allows the student to participate in the data collection activities. The incentive will be provided either to the classroom teacher or to the school data collection liaison, based on who is responsible for monitoring the consent form returns. This incentive will encourage the teacher, or the data collection liaison, to monitor consent returns carefully and follow up with students for whom a form has not been returned, encouraging students to return a signed form. Firms have used similar approaches successfully in previous studies. For example, in the cross-site evaluation of the Safe Schools/Healthy Students Initiative, teachers and staff were told that each school would be provided an additional $25 for each classroom in which a minimum of 70% of active parental consent forms were completed by parents and returned to the school. In schools where this approach was used, the return rates of parental consent forms of 78% were achieved, on average. This incentive opportunity will be available for all classrooms in grades 1-5.

Assurances of Confidentiality Provided to Respondents

All data collection activities will be conducted in full compliance with The Department of Education regulations to maintain the confidentiality of data obtained on private persons and to protect the rights and welfare of human research subjects as contained in the Department of Education regulations. These activities will also be conducted in compliance with other federal regulations; in particular with The Privacy Act of 1974, P.L. 93-579, 5 USC 552 a; the “Buckley Amendment,” and the Family Educational and Privacy Act of 1974, 20 USC 1232 g. Information collected for this study comes under the confidentiality and data protection requirements of the Institute of Education Sciences (The Education Science Reform Act of 2002, Title 1, Part E, Section 183).

An explicit verbal or written statement describing the project, the data collection, and confidentiality will be provided to study participants. These participants will include students in all study schools that participate in the student survey and interviews, as well as staff and administrators participating in surveys, interviews or teacher ratings.

Information collected for this study comes under the confidentiality and data protection requirements of the Institute of Education Sciences. All information from this study will be kept confidential as required by the Education Sciences Reform Act of 2002 (Title I, Part E, Section 183).Responses to this data collection will be used only for statistical purposes. Personally identifiable information about individual respondents will not be reported. We will not provide information that identifies an individual, school, or district to anyone outside the study team, except as required by law.

MDRC’s IRB has indicated that active informed consent from parents will be required for the student survey and the teacher ratings of student behavior. The parent consent form can be found in Appendix I. Some districts recruited for the study have rules requiring active informed consent for any data collection concerning students. In these districts we will add additional language to the parent consent forms regarding these other data sources. Consent forms for data collection that involves staff appears as the first page of the instrument (Appendices A, B, D-G and J).

Confidentiality assurances during data collection:

All MTSS-B data collection employees at MDRC, DIR and AIR and any data collection sub-contractors thereof sign confidentiality agreements that emphasize the importance of confidentiality and specify employees’ obligations to maintain it.

Personally identifiable information (PII) is maintained on separate forms and files, which are linked only by sample identification numbers.

Access to a crosswalk file linking sample identification numbers to personally identifiable information and contact information is limited to a small number of individuals who have a need to know this information

Access to hard copy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded materials are shredded.

Access to electronic files is protected by secure usernames and passwords, which are only available to approved users. Access to identifying information for sample members is limited to those who have direct responsibility for providing and maintaining sample crosswalk and contact information. At the conclusion of the study, these data are destroyed.

The plan for maintaining confidentiality includes staff training regarding the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses. It also includes built-in safeguards concerning status monitoring and receipt control systems.

Confidentiality assurance during analysis: The data collected for this study will be used only for broadly descriptive and statistical purposes. In no instances will the study team provide information that identifies districts, schools, principals, teachers, or students to anyone outside the study team, except as required by law. More detail on MDRC’s procedures to ensure data security are described below.

Data storage: MDRC and subcontractors will store all data in compliance with its federally approved data security plan. All quantitative data will be stored on a secure section of our network, accessible only to specific project staff identified by the data manager. Data that is used for analysis is stored with research ID numbers rather than actual student or staff identification information. Notes/digital recordings from interviews and all survey data will only be reviewed by the research team and stored in a secure area accessible only to the research team.

Once the study is completed, original data collected for the project will be destroyed at the end of the project and only the restricted access file without any actual identifiers for the district, school, or individual respondent will remain [to be discussed below].

Method of data destruction: All data containing individually identifiable records will be destroyed by an appropriate fail-safe method, including physical destruction of the media itself or deletion of the contents on our servers. After the study is completed, the study team will create a restricted- access file of the data collected and submit that file to IES once the project ends. This file will have been stripped of all student, teacher/staff, school and district identifiers.

Justification of Sensitive Questions

Questions in some components of the MTSS-B student survey are potentially sensitive. Students in grades 4 and 5 are asked about personal topics including students’ perceptions of fairness and their perceived level of aggression and feelings that they have in school. The questions we have included were selected to assess constructs that we need to measure in order to understand the impacts of the intervention. The specific scales adapted for the surveys were chosen in part because they have been used in previous research and found to be valid and reliable measures of the constructs we intend to measure.32 Additionally, the survey is currently being fielded successfully in Bradshaw’s current Maryland Safe and Supportive Schools initiative. Moreover, trained assessors will explain questions to students before they are posed. Finally, students and their parents will be informed by research staff prior to the start of the survey that their answers are confidential; that they may refuse to answer any question; that results will only be reported in the aggregate; and that their responses will not have any effect on any services or benefits they or their family members receive. The study team will be asking for active parental consent for this activity.

Questions on the teacher ratings of student behavior are also potentially sensitive because teachers are providing an assessment of each individual student’s concentration problems and disruptive behavior. This rating scale is essential to the study because it is so closely tied to the MTSS-B theory of action, and prior evaluations of MTSS-B have found effects on these rating scales.33 Many of the questions on this measure have been used in other studies, including the recent IES-funded evaluation of the Good Behavior Game. When required by districts and if required by the MDRC IRB, the study team will ask for active parental consent for this activity.

Estimates of Annualized Burden Hours and Costs

Exhibit A-3 summarizes reporting burden on respondents for each data source. The respondent pool will include school staff and students. For each data collection activity, there is a corresponding number of respondents assumed per school, an assumed number of schools associated with the activity, and a total number of respondents estimated (assuming a 90% response rate). Exhibit A-3 also provides the number of administrations for data collection activity over the course of the three years of the study, average estimates for the amount of time required for each activity in minutes, as well as the total burden hours calculated for each activity to be completed by all of the required respondents. This proposed information collection does not impose a financial burden on any respondents, and respondents will not incur any expenses. Total annual respondents, responses and burden hours can be found in the table note.

The introduction to supporting statement A and the responses to question B-2 provide more detail about the timeline of data collection activities listed in the exhibit and describe why these activities are essential to the study.

Exhibit A-3: Estimated Burden to Respondents |

||||||||

Data Collection Activity |

Assumed # of Respondents per school |

Assumed # of schools |

Estimated # of Respondents per Admininstration (90% consent rate assumed) |

Number of Administrations |

Average Burden Hours per Response (Minutes) |

Total Burden (Hours) |

Total Number of Responses (Over 3 Years) |

Total Number of Respondents (over 3 years; 90% consent rate assumed) |

Teacher ratings of student behavior (assumed 20 students per teacher and 5 min. per student) |

25 |

89 |

2,003 |

1 |

100 |

3,338 |

2,003 |

2,003 |

Teacher ratings of student behavior (assumed 8 students per teacher and 5 min. per student) |

25 |

89 |

2,003 |

2 |

40 |

2,670 |

4,005 |

2,003 |

Student survey (grades 4-5) |

200 |

89 |

16,020 |

1 |

20 |

5,340 |

16,020 |

16,020 |

Non-teaching staff survey |

30 |

89 |

2,403 |

2 |

30 |

2,403 |

4,806 |

2,403 |

Teacher survey |

30 |

89 |

2,403 |

2 |

35 |

2,804 |

4,806 |

2,403 |

Site Visit: Interview with stratified random sample of 15 staff |

15 |

89 |

1,202 |

2 |

5 |

200 |

2,403 |

2,403 |

Site Visit: interviews with stratified random sample of 15 students |

15 |

89 |

1,202 |

2 |

2 |

80 |

2,403 |

2,403 |

Site Visit: Interview with administrator |

1 |

89 |

80 |

2 |

45 |

120 |

160 |

80 |

Site Visit: Interview with Behavior Team Leader |

1 |

89 |

80 |

2 |

39 |

104 |

160 |

80 |

Phone interview with Behavior Team Leader in Program schools |

1 |

58 |

52 |

2 |

45 |

78 |

104 |

52 |

Phone interview with administrator in Program schools |

1 |

58 |

52 |

2 |

45 |

78 |

104 |

52 |

Phone interview with MTSS-B coach |

NA |

NA |

14 |

2 |

60 |

27 |

27 |

14 |

District Records Data Collection from 9 districts |

|

|

9 |

3 |

1,080 |

486 |

27 |

9 |

Total |

|

|

27,521 |

|

|

17,728 |

37,029 |

23,252 |

Annual total |

|

|

|

|

|

5,909 |

12,343 |

7,751 |

Note. Assumptions regarding # staff are based on the study team's preliminary conversation with a sample of the recruited districts regarding the size of their teaching and non-teaching staff. Assumptions regarding the # of students are based on MDRC processing of the 2012-2013 Common Core of Data regarding the number of grade 1-5 students in the recruited schools. Assumptions regarding the time to fulfill district records requests are based on MDRC's experience requesting district records for other studies that request comparable data from districts. The estimated total number of respondents is different from the estimates number of respondents per administration because of a stratified random sample of students and staff being selected for site visit interviews at each wave. In calculating the number of responses per data source, we assume that each teacher's rating of all the students' in their class is one response and that each district records data collection request counts as one response for each wave of collection. The “total number of respondents” column does not sum to the total # of respondents (n=23,252) because some respondents participate in multiple data collection activities. This study is being conducted over the course of three years. The annual total burden hours is 5,909, the annual total responses are 12,343 and the annual total respondents are7,751. |

||||||||

Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers

Not applicable. The information collection activities do not place any capital cost or cost of maintaining capital requirements on respondents.

Annualized Cost to the Federal Government

The total cost for the study is $17,770,151 over 5 years, for an annualized cost of $3,544,030.20

Explanation for Program Changes or Adjustments

This submission to OMB is a new request for approval.

Time, Schedule, Publication and Analysis Plan

Schedule & Publication Plan

The project schedule is as follows:

Selection and randomization of sites by summer of 2015

MTSS-B implementation 2015-2016 school-year and 2016-2017 school-year

Completion of data collection and creation of analysis files for final report by December 2017

Analysis of findings for final report in winter and spring of 2018, and

Preparation of a project final report and public-use data file for review by the Department and release in November 2018

We anticipate a report that includes an introductory chapter, a chapter on project design and data collection, a chapter discussing the nature and implementation of the MTSS-B training and support, a chapter discussing the nature and implementation of the MTSS-B program implemented in schools as well as services in the BAU schools, and a chapter presenting impact findings. The report will follow guidance provided in the National Center for Education Statistics (NCES) Statistical Standards34 and the IES Style Guide.35

Analysis Plan

To assess the implementation of MTSS-B training and support, the study team will use data from the direct observation of CSBS training and support activities, interviews with key program implementers (MTSS-B Coach, administrator and Behavior Team Leader), and review of program data (e.g. CSBS trainer logs and training attendance sheets). Systematic review of these data will allow the team to describe the content and frequency of CSBS activities, key implementers’ perceptions of the quality of CSBS activities, and variation in the content of training delivered between and within districts.

To assess implementation of the MTSS-B program in schools, the study team will use data obtained from site visits to Program schools (interview, observation and document review) and through a review of program data (e.g. program forms, MTSS-B Coach logs). Analysis of these data will allow the team to describe the observed implementation of Tier I and Tier II activities and to describe variation between and within districts in schools’ implementation of MTSS-B.

To estimate the impacts of MTSS-B on school staff practice, school climate and student outcomes (e.g. student behavior, student achievement and receipt of special education services) the study team will rely upon teacher and student surveys, teacher ratings of student behavior, classroom observation and district records. The study team will select one primary outcome per domain. For the student outcomes, we will estimate program impacts after one and then two years of implementation using a school-level random assignment design, with random assignment occurring at the level of the districts.36 Impact estimates for staff practices related to behavior and school climate will be estimated after two years of implementation. Impacts will be estimated using fixed effects, multi-level hierarchical models. The primary student sub-group analysis will be for students identified as being at-risk for future behavior problems at baseline, according to the teacher ratings of student behavior fielded in the fall of 2015. Comparison of the behavior support practices in Program and BAU Schools through data obtained from site visits, staff and student surveys and classroom observations will help the team to interpret the findings from the impact analysis.

Approval to Not Display OMB Expiration Date

All data collection instruments will include the OMB expiration date.

Explanation of Exceptions to the Paperwork Reduction Act

No exceptions are needed for this data collection.

REFERENCES USED IN PART A

Anderson, C. M., Teri Lewis-Palmer, Anne W. Todd, Robert H. Horner., George Sugai, N.K. Samson. 2008. "Individual student systems evaluation tool, version 2.6." Educational and Community Supports, University of Oregon.

Berry, Sandra H., Jennifer Pevar, and Megan Zander-Cotugno. 2008. “The use of incentives in surveys supported by federal grants.” Santa Monica, CA: RAND Corporation.

Bradshaw, Catherine P., Celine E. Domitrovich, Jeanne Poduska, Wendy Reinke, and Elise T. Pas. (2009). Measure of Coach and Teacher Alliance – Teacher Report. Unpublished Measure. Johns Hopkins University. Baltimore, MD.

Bradshaw, Catherine P., Tracey E. Waasdorp, Katrina J. Debnam, and Sarah Lindstrom Johnson. (2014). “Measuring school climate: A focus on safety, engagement, and the environment.” Journal of School Health, 84, 593-604. DOI: 10.1111/josh.12186

Bradshaw, Catherine P., Katrina J. Debnam, and Philip J. Leaf. (2009). Teacher Observation of Classroom Adaptation-Expanded Checklist (TOCA-EC). Unpublished Measure. Johns Hopkins University. Baltimore, MD.

Bradshaw, Catherine P., Christine W. Koth, Katherine B. Bevans, Nicholas Ialongo, and Philip J. Leaf. 2008. “The Impact of School-Wide Positive Behavioral Interventions and Supports (PBIS) on the Organizational Health of Elementary Schools.” School Psychology Quarterly, 23, 4: 463-473.

Bradshaw, Catherine P., Mary M. Mitchell, and Philip J. Leaf. 2010. “Examining the effects of School-Wide Positive Behavioral Interventions and Supports on student outcomes: Results from a randomized controlled effectiveness trial in elementary schools.” Journal of Positive Behavior Interventions, 12, 133-148.

Bradshaw, Catherine P., Tracy E. Waasdorp, and Philip J. Leaf. 2012. "Effects of School-Wide Positive Behavioral Interventions and Supports on Child Behavior Outcomes." PEDIATRICS 130, 5: e1136-e1145.

Debnam, Katrina J., Pas, Elise T., and Bradshaw, Catherine P. (2012). Secondary and tertiary support systems in schools implementing school-wide positive behavioral interventions and supports: A preliminary descriptive analysis. Journal of Positive Behavior Interventions, 14(3), 142–152.

Dillman, Don A. 2007. Mail and Internet Surveys: The Tailored Design Method (2nd ed.). New Jersey: John Wiley & Sons, Inc.

Gamse, Beth C., Howard S. Bloom, H. S., James J. Kemple, and Robin Tepper Jacob. 2008. “Reading First Impact Study: Interim Report.” NCEE 2008-4016. National Center for Education Evaluation and Regional Assistance.

Horner, Robert H. 2014. “The Role of District Leadership Teams in PBIS Implementation”. Presentation. Available at http://www.pbis.org/Common/Cms/files/pbisresources/1-Implementing%20PBIS.pptx.

Horner, R. H., Todd, A. W., Lewis-Palmer, T., Irvin, L. K., Sugai, G., & Boland, J. B. 2004. The school-wide evaluation tool (SET): A research instrument for assessing school-wide positive behavior support. Journal of Positive Behavior Interventions, 6, 3–12.

Horner, Robert H., George Sugai, Keith Smolkowski, Lucille Eber, Jean Nakasato, Anne W. Todd, and Jody Esperanza. 2009. "A randomized, wait-list controlled effectiveness trial assessing school-wide positive behavior support in elementary schools." Journal of Positive Behavior Interventions 11, 3: 133-144.

Hoy Wayne K. and Clemens John Tarter. 1997. The Road to Open and Healthy Schools: A Handbook for Change, Elementary Edition. Thousand Oaks, CA: Corwin Press.

Hurrell, Joseph J. and Margaret A. McLaney. (1988). “Exposure to job stress: A new psychometric instrument.” Scandinavian Journal of Work Environment and Health, 14, 27-28.

James, Tracy. 1997. “Results of the Wave I incentive experiment in the 1996 survey of income and program participation.” In Pp 834-839 in Proceedings of the Survey Methods Section, American Statistical Association.

Johnson,Sarah, Elise T. Pas, and Catherine P. Bradshaw. (2015). “Identifying Factors Relating to the Coach-Teacher Alliance as Rated By Teachers and Coaches.” Manuscript submitted for publication.

Koth, Christine W., Catherine P. Bradshaw, and Philip J. Leaf. 2009. "Teacher Observation of Classroom Adaptation—Checklist: Development and factor structure." Measurement and Evaluation in Counseling and Development 42, 1: 15-30.

Maslach, Christina, and Susan E. Jackson. 1981. "The measurement of experienced burnout." Journal of Organizational Behavior 2, 2: 99-113.

National Center for Education Evaluation. 2005. Guidelines for incentives for NCEE impact evaluations. (March 22). National Center for Education Evaluation: Washington, DC.

National Center for Education Statistics. 2002. Statistical Standards. National Center for Education Statistics (NCES 2003601): Washington, D.C.

OSEP Center on Positive Behavioral Interventions and Supports. 2007. “Is School-Wide Positive Behavior Support An Evidence-Based Practice? A Research Summary.” Website: www.pbismaryland.org.

Silvia, Suyapa, Jonathan Blitstein, Jason Williams, Chris Ringwalt, Linda Dusenbury, and William Hansen. "Impacts of a Violence Prevention Program for Middle Schools: Findings after 3 Years of Implementation.” NCEE 2011-4017." National Center for Education Evaluation and Regional Assistance (2011).

Singer, Eleanor and Richard A. Kulka. 2002. “Paying respondents for survey participation.” In M. Vander Ploeg, R.R. Moffitt, & C.F. Citro (eds), Studies of welfare populations: Data collection and research issues (pp.105-28). Washington: National Academy Press.

Social and Character Development Research Consortium. "Efficacy of schoolwide programs to promote social and character development and reduce problem behavior in elementary school children." Washington, DC: National Center for Education Research, Institute of Education Sciences, US Department of Education (2010).

Sugai, George, Todd, Anne W., and Horner, Robert H. (2000). Effective Behavior Support (EBS) Survey: Assessing and planning behavior supports in schools. Eugene, OR: University of Oregon.

U.S. Department of Education, Institute for Education Sciences. 2005. IES Style Guide. Website: www.nces.ed.gov.

1 Horner (2014).

2 Bradshaw, Mitchell, and Leaf (2010); Horner et al. (2009); OSEP Center on Positive Behavior Interventions and Supports (2007).

3 Note that the School Leadership Team is a term used by CSBS but will more often be referred to as a “behavior team” in business as usual schools. We refer to the SLT when describing the CSBS program but the “behavior team” when describing the data collection strategy (e.g. interviews with behavior team leader).

4 Core components of MTSS-B Tier I include teaching and reinforcing specific school-wide behavioral expectations, a classroom management system to support behavioral expectations, and the use of fidelity data to monitor and improve implementation.

5 Core components of MTSS-B Tier II (CICO) include use of data to identify and progress monitor students needing additional supports, additional instruction and time for students to develop behavioral skills, additional structures with increased opportunity for feedback from staff, and sharing information about student behavior between school and families.

6 CSBS will use fidelity measures to monitor schools’ progress, identify areas in need of improvement, and make adjustments in training when necessary. CSBS plans to use the Effective Behavior Support (EBS) survey and the Tiered Fidelity Inventory (TFI) implementation tool for this purpose. CSBS will use the Behavior Education Program Fidelity of Implementation (BEP-FIM) to assess fidelity of implementation for the Tier II intervention.

7 For academic achievement, the MDES for the year 2 estimates are 0.189 for reading and 0.195 for math. The MDES for behavior ratings in year two is 0.089 for the high-risk subgroup and 0.069 for the random sample of all students. The MDES for teacher practice outcomes range from 0.118 to 0.201 depending on the parameter value assumptions and number of classrooms observed. The MDES for school climate measures from the teacher survey in year 2 is between 0.186 and 0.410 depending on the parameter value assumptions. The MDES for the school climate measures from the student survey (grades 4&5) is between 0.149 and 0.285 depending on the parameter value assumptions.

8 Horner et al. (2004).

9 Bradshaw, Waasdorp, and Leaf (2012).

10 Anderson et al. (2008).

11 Debnam, Pas and Bradshaw (2012).

12 Hoy and Tartar (1997); Maslach and Jackson (1981). We have also drawn items from the NIOSH’ Job Stress Questionnaire to complement our measure of burnout (Hurrell & McLaney, 1988).

13 Bradshaw et al., 2008.

14 Sugai, Todd, and Horner (2000).

15 Social and Character Development Consortium, 2010.

16 Debnam, Pas &Bradshaw, 2012; Bradshaw et al., 2009; Johnson, Pas & Bradshaw, 2015

17 Bradshaw et al., 2014.

18 Silvia et al., 2011

19 Fielding this survey at baseline will allow the study team to identify a high-risk sub-group at baseline. Identification of this subgroup is necessary for answering one of the study’s research questions regarding the effects of MTSS-B on at-risk students.

20 Koth, Bradshaw and Leaf (2009).

21 Bradshaw, Waasdorp, and Leaf (2012).

22 Bradshaw, Debnam & Leaf, 2009.

23 Horner (2014)

24 IDEA, P.L. 108-446.

25 IDEA, P.L. 108-446.

26 Dr. Bradshaw is also a member of the evaluation team but served in this role because of her special expertise on training providers and MTSS-B training.

27 Berry, Pevar, and Zander-Contugno (2008); Singer and Kulka (2002).

28 James (1997).

29 For example, see Dillman (2007).

30 Gamse et al. (2008).

31 National Center for Education Evaluation (March 22, 2005).

32 Bradshaw et al., 2014.

33 Bradshaw, Waasdorp, and Leaf (2012).

34 National Center for Education Statistics (2002).

35 U.S. Department of Education, Institute for Education Sciences (2005).

36 After the district and schools had agreed to participate, the study team conducted the random assignment procedures. The multi-stage RA process randomly assigned schools within same school district or random assignment blocks within district to the treatment condition and the control condition with roughly the same probability. After randomization, the district and schools were informed of the results.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Elizabeth Nelson |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy