ED Response to OMB Passback

2016 Main NAEP Responses to OMB Passback.docx

National Assessment of Education Progress (NAEP) 2014-2016 System Clearance

ED Response to OMB Passback

OMB: 1850-0790

MEMORANDUM OMB # 1850-0790 v.42

DATE: August 27, September 3, and September 9, 2015

TO: Shelly Martinez, Office of Management and Budget

FROM: Patricia Etienne, National Center for Education Statistics

THROUGH: Kashka Kubzdela, National Center for Education Statistics

SUBJECT: Response to OMB passback on NAEP Main 2016

1st Passback

OMB Passback: In the memo, the sentence at the end of the first paragraph on page 2 seems to go even further than the Supporting Statement regarding NABG’s authority and is inconsistent with text on page 8. Please remove the sentence or align it with the text in the SS A and page 8.

NCES Response: We have removed the sentence on page 2.

OMB Passback: 4th paragraph on page 4--will the 2016 version be submitted with the next wave or will it be a separate, early submission?

NCES Response: As done in previous years, we submitted the 2015 version given the 2016 version was under development, but would be very similar and the brochure is limited to directions for using the online system. The 2016 version is now completed. We have updated Appendix D to reflect this.

OMB Passback: On page 5, post-assessment Follow-up Survey – please indicate where in the main Supporting Statement A and B that this type of activity is described. If it is not, please provide an explanation of purpose, periodicity, justification of sample size, burden estimates, etc. If a recurring activity, it should be added as a change request to the main supporting statement at the next opportunity. Finally, we don’t see a document in ROCIs called “Volume II” and we do not see this survey instrument as an IC. Please indicate what it is called in ROCIS and where it is located.

NCES Response: The post-assessment follow-up survey has become a standard NAEP process, after the submission of the last System Clearance. As such, it was not previously described in the main Supporting Statement Parts A and B. NCES will be submitting a new System Clearance this fall for the 2017-2019 assessments and will include a description of the post-assessment follow-up survey in that package.

The purpose of the survey is to gather additional information from the school coordinators who participated in NAEP in order to improve our customer service. As such, the survey questions focus on the school coordinators’ experience and satisfaction with the pre-assessment activities and the assessment day activities. NCES uses this information to evaluate and improve the processes for future years. Given that all school coordinators participate in the debriefing interview on the day of the assessment, it is not necessary to follow-up with all of them for this more detailed information. However, it is still important to interview enough school coordinators in order to obtain a variety of responses and cover the breadth of experiences of the school coordinators. As such, a sample of 25% is appropriate. Given the length of the interview (18 questions), per footnote 1 to the burden table (table 5) in Volume I, the burden is estimated at 10 minutes per interview. The survey is provided in ROCIS in “Part 4 2016 Main NAEP Assessment Feedback Forms” in the IC. We clarified the document reference in Volume I.

OMB Passback: Page 6, “…based on the most recent data, 22 percent of grade 4 students…” please provide a source for these estimates.

NCES Response: The percent of students identified as SD/ELL is from 2013 and 2014 – the most recent assessment years from which data is available. This information can be found in the NAEP Data Explorer (http://nces.ed.gov/nationsreportcard/naepdata/dataset.aspx), under the variable “Student disability or English Language Learner status” We added a footnote to that effect.

OMB Passback: Page 7, table 5 – the e-filing burden is 1 hour for 48% of schools – what is the burden for the non e-filers to do comparable tasks? Where is that described?

NCES Response: E-filing can be completed at either at the state, district, or school level. If it is completed at the state-level, the task is completed by the state coordinator. Given this position is funded by NCES, there is no burden associated with this time. The 48% represents the estimate of the percent of schools for which either the school or district personnel perform the e-filing. This information was added to Volume I.

OMB Passback: Please confirm the degree to which all new or revised pilot questions have been small-scale tested, e.g., via cog labs. Please provide access to summaries of that testing.

NCES Response: All items proposed for piloting have been tested in cognitive labs. Please see Appendix F of this submission for the summary reports of cognitive interviews (please click on each icon to retrieve each imbedded report document).

OMB Passback: Please provide justification for the new items (topic-specific rather than item-specific is fine).

NCES Response: The topics for item development were chosen based on literature reviews, consultation with expert groups, questionnaire experts, and the National Assessment Governing Board. Specifically, a white paper was prepared that served as a basis for the development of new core questions (the paper can be retrieved at: https://www.nagb.org/content/nagb/assets/documents/what-we-do/quarterly-board-meeting-materials/2014-8/tab12-plans-for-naep-contextual-modules.pdf).

OMB Passback: Please provide detail about the design of the pilot, such as research questions and testing of different treatments to compare against one another, globally and for different question sets (e.g., parental occupation). Please also provide details about the nature of the analysis that NCES will do with the pilot results in order to perform operational use of new and revised items.

NCES Response: When evaluating potential inclusion of pilot items in the operational administrations, item category frequencies, proportions of missing values, and relationships of individual items with achievement results can determine whether newly developed questions function as expected in a large-scale sample. In addition, factor analysis and timing data analysis will be applied when determining which pilot items are used in the operational administrations.

The pilot questionnaires include alternative versions of items targeting the same construct in order to identify the best possible items for operational NAEP. This procedure was previously applied successfully in the NAEP TEL 2013 pilot. The pilot design in 2016 is based on 8 different forms that are designed so that every student only receives 15 minutes of questions (an approximately 5 minute block of core questions and an approximately 10 minute block of subject-specific questions). Every item appears in two or more forms ensuring both sufficient sample size and allowing for comparison of items in different positions in the overall questionnaire. The 8 different forms are designed so that a student will only receive one of the alternative item versions (e.g., a student will only answer one of the different sets of parental occupation items). Alternative item versions will be compared in terms of the following criteria:

category frequencies (specifically, the ability of each version to capture variation in student responses);

missing values (specifically, whether one item version has lower missing rates);

timing data (specifically, whether students need less time to answer one item version then another);

correlations with achievement (specifically, whether one item version shows stronger relationships with achievement than others); and

factor structure (specifically, whether one item version contributes more to a desired questionnaire index (e.g., socioeconomic status) than other versions).

After the pilot assessments, items will be chosen to maximize validity and reliability, while minimizing student burden.

OMB Passback: How well do the items on victimization and/or bullying fit with the interagency work including questions asked in SSOCS and CRDC and where they differ, why?

NCES Response: The bullying items in NAEP conceptually overlap with the bullying and cyberbullying items used in the student questionnaire administered as the 2013 School Crime Supplement to the National Crime Victimization Survey (found at https://nces.ed.gov/programs/crime/pdf/student/SCS13.pdf). A key difference is that the SSOCS focuses on more bullying behaviors than the NAEP pilot items do. In addition, another key difference is that the SSOCS uses a dichotomous response format (yes/no) whereas NAEP uses more than two response categories so to capture more variation in student responses. Finally, another difference between the two questionnaires is that the NAEP questionnaires include fewer questions on bullying and victimization in order to keep student burden low.

In addition, it is important to note that the NAEP item development was further informed by the TIMSS 2015 pilot questionnaires and the draft items for the National School Climate Survey.

OMB Passback: Neither SSA and B provide details about how the questionnaires are administered to the target populations, including mode of administration, etc. This typically would be in SS B. Please provide those details here and these details should be added as a change request to the main supporting statement at the next opportunity.

NCES Response: The mode of administration is dependent upon the assessment and the subject, as well as policy changes as technology use and capabilities increase. This specific information is included in each annual submittal (waves), such as this one, particularly in Volume 1, Section 1 (Explanation for the Submittal). The details of the different types of questionnaires can be found in Volume 1, Section 4 (Information Pertaining to the 2016 Questionnaires in this Submittal). NCES will be submitting a new System Clearance this fall for the 2017-2019 assessments and will also include a description of the expected modes of administration in that package.

OMB Passback: Are any student questionnaires provided electronically and if so, are there skip patterns?

NCES Response: For the Arts assessment, the student questionnaires appear in the printed test booklets. For the digitally based pilot assessments, the student questionnaires are provided electronically, as part of the DBA system. Some skip patterns will be used in the 2016 DBA student questionnaires.

OMB Passback: How is a student supposed to respond to the series of “father” and “mother” questions if they (a) live with only one parent, (2) know only one parent, (3) have two mothers or two fathers?

NCES Response: The NAEP administrators are provided with directions on how to respond to student questions during the assessment, including those related to these circumstances. Generally, students are instructed to reply “I don’t know” or to respond based on (for example) the mother with the highest education.

2nd Passback

OMB Passback: NAEP should make a greater effort to follow the emerging standards or best practices across the Federal statistical system on measurement issues. Specifically, it should explain in detail how the bullying question fulfills the two-prong test of the Federal bullying definition and specifically how it is designed to get comparable answers to other NCES surveys.

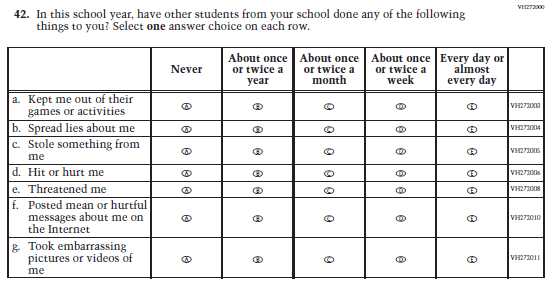

NCES Response: Thank you for suggesting that NAEP should better align with the other surveys and measurement practices. In future rounds of development, NCES will better align the NAEP survey questions with other surveys. In the meantime, NCES is removing the bullying questions from the 2016 pilot (i.e., item 40 from the 2016 Pilot Grade 8 Core Student Items questionnaire and item 42 from the 2016 Pilot Grade 12 Core Student Items questionnaire; also copied below).

OMB Passback: Further, the parent question and NCES’s response to OMB’s question reflects a poor understanding of the Federal-wide interagency work on the issues of household relationships. Andy Zuckerberg has participated in both the Measuring Household Relationships and the LGBT groups and can advise on ways to update the questions.

NCES Response: Following the recommendation from OMB, we consulted with Andy Zuckerberg, as well as Sarah Grady and Carolyn Fidelman, this week about the parent questions. Much of the work that NCES has done in this area in other surveys (particularly the National Household Education Survey and the Middle Grades Longitudinal Study) is focused on questions that are geared towards parents. The NAEP questions will need to be worded so that they are both inclusive and understandable to students, particularly 4th graders. In future development cycles, NAEP will explore how to better address non-traditional families in the NAEP context where the intended respondents are students as young as 9 years old. This exploration will include collaborating with Andy and managers of other surveys within NCES, as well as learning from surveys conducted by other agencies, and will result in questions that are revised appropriately. In doing so, the NAEP questions will be better aligned with the practices and language used across federal surveys.

OMB Passback: Please provide improved documentation on survey questions that depicts skip patterns and makes it clearer what the 8 different matrixes of questions comprise.

NCES Response: The attached Excel document shows the skip pattern sequences for all items that are included in the 8 student questionnaires (please note that the student core and each subject-specific set of questions is on a separate tab in the document). Each student will receive one version of the core and one version of the subject-specific questions.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | joc |

| File Modified | 0000-00-00 |

| File Created | 2021-01-30 |

© 2026 OMB.report | Privacy Policy