Attachments D-I

Part 3 --Attachments D-I.doc

Initiative to Reduce/Eliminate Seclusion and Restraint: State Incentive Grants and Coordinating Center

Attachments D-I

OMB: 0930-0271

ATTACHMENT D

Consultation Outside the Agency for the Facility/Program Characteristics Inventory and the Inventory of Seclusion and Restraint Reduction Interventions

Nationally Recognized Experts:

Dr. Robert Bernstein, Executive Director, Bazelon Center for Mental Health Law, 1101 15th Street, NW Suite 1212, Washington, DC 20005, (202) 467-5730.

Curtis L. Decker, Executive Director, National Association of Protection and Advocacy Systems (NAPAS), 900 Second Street NE, Suite 211, Washington, DC 20002, (202) 408-9514.

Leslie Morrison, MS, RN, Esq., Supervising Attorney, Protection and Advocacy Inc., 433 Heggenberger Rd, Suite 220, Oakland, CA 94621, (510) 430-8033.

Dr. Steve Onken, Assistant Professor, Columbia University School of Social Work, Mcvickar Hall, Mail Code 4600 622, West 113th Street, New York, NY 10025, (212) 851-2243

Trina Osher, M.A., Coordinator of Research and Practices, Federation of Families for Children’s Mental Health, 1101 King Street, Suite 420, Alexandria, VA 22314, (703) 684-7710.

Susan Stefan, JD, Attorney, Center for Public Representation, 246 Walnut Street, Newton, MA 02460 (617) 965-0776.

Jennifer Urff, JD, Senior Program Associate, Advocates for Human Potential, Inc., c/o UMASS Donahue Institute, 100 Venture Way, Suite 5, Hadley, MA 01035, (413) 587-2418.

Kevin Huckshorn, RN, MSN, CAP, ICADC, Director of Technical Assistance, National Association of State Mental Health Directors (NASMHPD), 66 Canal Center Plaza, Suite 302, Alexandria, VA 22314, (703) 139-9333 ext. 140.

Sarah R. Callahan, MSHA, Deputy Director of Technical Assistance, National Association of State Mental Health Directors (NASMHPD), 66 Canal Center Plaza, Suite 302, Alexandria, VA 22314, (703) 139-9333 ext. 141.

State Mental Health Agencies:

Vickie Cousins, Director of the Office of Consumer Affairs, South Carolina Department of Mental Health, PO Box 485, Columbia, SC 29202, (803) 898-8621.

Christina M. Donkervoet, MSN, Hawaii Department of Health, Child and Adolescent Mental Health Division, 3627 Kilauea Avenue, Room 101, Honolulu, HI 96616 (803) 733-9339.

Dr. Rupert Goetz, Medical Director, Hawaii State Hospital, 45-710 Keaahala Road, Kaneohe, HI 96744, (808) 236-8246.

Lynn Goldman, LCSW, Project Coordinator, Illinois Division of Mental Health, 160 N. LaSalle, 10th Floor, Chicago, IL 60601, (312) 814-4908.

Dr. Brian M. Hepburn, Maryland Department of Health and Mental Hygiene, 55 Wade Avenue, Dix Building, Catonsville, MD 21228, (410) 402-8452.

Dr. Pablo Hernandez, Superintendent, Wyoming State Hospital, PO Box 177, Evanston, WY 82931, (307) 789-3464 ext. 354.

Dr. Judy Hall, Washington Mental Health Division, PO Box 45320, Olympia, WA 98504-5320, (360) 902-0874.

Dr. Robert Keane, Deputy Commissioner, Massachusetts Department of Mental Health, 25 Staniford Street, Boston, MA 02114, (617) 626-8106.

William Payne, Louisiana Office of Mental Health, P.O. Box 4049, Baton Rouge, LA 70821, (225) 342-5956.

David Pratt, Kentucky Department for Mental Health and Mental Retardation Services, 275 E. Main Street, Frankfort, KY 40621.

Dr. Paula Travis, Kentucky Department for Mental Health and Mental Retardation Services, 275 E. Main Street, 5W-C, Frankfort, KY 40621. (502) 523-7020.

Felix Vincenz, Missouri Department of Mental Health, Comprehensive Psychiatric Services, 1706 East Elm Street, Jefferson City, MO 65102, (573) 592-4100.

Federal Personnel:

Dr. Paul Wohlford, Psychologist, Substance Abuse Mental Health Services Administration, Center for Mental Health Services, 1 Choke Cherry, Room 2-1113, Rockville MD 20850, (240) 276-1759.

Dr. John Morrow, Chief of State Planning and Systems Development Branch, Division of State and Community Systems Development, Substance Abuse Mental Health Services Administration, Center for Mental Health Services, 1 Choke Cherry, Room 2-1116, Rockville MD 20850, (240) 276-1783.

Dr. Nainan Thomas, Senior Public Health Advisor, Substance Abuse Mental Health Services Administration, Center for Mental Health Services, 1 Choke Cherry, Room 6-1037, Rockville MD 20850, (240) 276-1744.

Dr. Nancy Kennedy, Health Care Analyst, Substance Abuse Mental Health Services Administration, Center for Substance Abuse Prevention, 1 Choke Cherry, Room 4-1049, Rockville MD 20850, (240) 276-2497.

ATTACHMENT E

Introductory Letter for the Facility/Program Characteristic Inventory and the Inventory of Seclusion and Restraint Reduction Interventions

Dear {Targeted Respondent – Insert Name}:

As you know, CSR Incorporated (CSR) is working with the Center for Mental Health Services (CMHS) in the evaluation of the SAMHSA State Incentive Grants to Build Capacity for Alternatives to Restraint and Seclusion Project. Within the next few weeks, we will begin accepting data to be used in such evaluation. We will begin with two instruments in the evaluation: 1) Facility/Program Characteristic Inventory, and the 2) Inventory of Seclusion and Restraint Reduction Intervention. Described in the table below is the purpose of each instrument, frequency of collection, method of submitting the data, and expected hourly response burden per submission.

INSTRUMENT |

PURPOSE |

FREQUENCY OF COLLECTION |

SUBMISSION METHOD |

ESTIMATED HOURS TO COMPLETE |

Facility/Program Characteristic Inventory |

Collects information about the types of facilities/programs, characteristics of persons served, staffing patterns, and unit specific data. |

Baseline |

On-line submission |

2 |

Inventory of Seclusion and Restraint Reduction Intervention |

Collects information about components of the interventions that are implemented. |

Baseline and approximately annually (3 other times)

|

On-line submission |

8 |

You or someone within your state identified {Targeted Respondent – Insert Name} as the data contact for {facility/or program name}. In the next few days, {Targeted Respondent – Insert Name}, will receive an email with detailed instructions for data entry and submission from CSR as well as dates for data submission training.

If you have any questions regarding the instruments or the evaluation, please contact me at 703-741-7124, or via email at seclusion-restraint.info@csrincorporated.com.

The evaluation will help SAMHSA identify best practice approaches to reducing and ultimately eliminating the use of restraint and seclusion in mental health facilities. Thank you in advance for your help.

Sincerely,

Daniel Falk, Ph.D.

Evaluation Project Director

CSR Incorporated

ATTACHMENT F

Introductory Letter for the Seclusion and Restraint Event Data Matrix

Dear {Targeted Respondent – Insert Name}:

As you know, CSR Incorporated (CSR) is working with the Center for Mental Health Services (CMHS) in the evaluation of the SAMHSA State Incentive Grants to Build Capacity for Alternatives to Restraint and Seclusion Project. Within the next few weeks, we will begin accepting data for the evaluation using an instrument called the Seclusion and Restraint Event Data Matrix. Described in the table below is the purpose of this instrument, frequency of collection, method of submitting the data, and expected hourly response burden per submission.

INSTRUMENT |

PURPOSE |

FREQUENCY OF COLLECTION |

SUBMISSION METHOD |

ESTIMATED HOURS TO COMPLETE EACH MATRIX |

Seclusion and Restraint Event Data Matrix |

Collects bimonthly data about the use of restraint and seclusion. |

Monthly for the next two years |

On-line submission |

8 |

You or someone within your state identified {Targeted Respondent – Insert Name} as the data contact for {facility/or program name}. In the next few days, {Targeted Respondent – Insert Name}, will receive an email with detailed instructions for data entry and submission from CSR as well as dates for data submission training.

If you have any questions regarding the instruments or the evaluation, please contact me at 703-741-7124, or via email at seclusion-restraint.info@csrincorporated.com.

The evaluation will help SAMHSA identify best practice approaches to reducing and ultimately eliminating the use of restraint and seclusion in mental health facilities. Thank you in advance for your help.

Sincerely,

Daniel Falk, Ph.D.

Evaluation Project Director

CSR Incorporated

ATTACHMENT G

Instructions for Data Entry/Submission for the Facility/Program Characteristic Inventory and the Inventory of Seclusion and Restraint Reduction Interventions

PURPOSE OF THE EVALUATION

The Center for Mental Health Services (CMHS) has funded 8 State Incentive Grants (SIG) to Build Capacity for Alternatives to Restraint and Seclusion. The main purpose of the evaluation is to determine whether the implementation of best practice interventions have a positive effect on reducing rates of seclusion and restraint within mental health facilities.

THE ROLE OF CSR INCORPORATED

CMHS contracted with CSR Incorporated (CSR) to conduct an independent evaluation of the project. CSR is charged with handling all facets of the evaluation including data submission training, data collection, and data analysis.

DESCRIPTION OF INSTRUMENTS AND DATA SUBMISSION

We will begin by utilizing two instruments in the evaluation the: 1) Facility/Program Characteristic Inventory, and the 2) Inventory of Seclusion and Restraint Reduction Interventions. Described in the table below is the purpose of each instrument, frequency of collection, and method of submitting the data.

INSTRUMENT |

PURPOSE |

FREQUENCY OF COLLECTION |

SUBMISSION METHOD |

Facility/Program Characteristic Inventory |

Collects information about the types of facilities/programs, characteristics of persons served, staffing patterns, and unit specific data. |

Baseline |

Sections I-III: On-line data

entry |

Inventory of Seclusion and Restraint Reduction Intervention |

Collects information about components of the interventions that are implemented. |

Baseline and approximately annually (3 other times)

|

On-line data entry |

The final OMB-approved data protocol is attached and can also be downloaded from

http://seclusion-restraint.csrincorporated.com/.

DATA ENTRY/SUBMISSION

CSR has developed the Alternatives to Restraint and Seclusion (ARS) website. As a site administrator you will have access to the data entry and data transfer capabilities from the ARS website. In order to access the data entry and data transfer section of the ARS website you will be required to use a valid site username and password. Please follow the steps below to access the ARS website Data Entry/Submission Section.

Step 1: Go to the following link to access the ASR website:

http://seclusion-restraint.csrincorporated.com/.

Step 2: Click on Data Entry/Submission Section

Step 3: Log into the Data Entry/Submission Section by entering your Username and Password, which is specified below. Please note that both are case sensitive.

Username: <username>

Password: <password>

You will then enter the section, which contains instructions and guidelines for data entry and or data transfer.

TECHNICAL ASSISTANCE

There are several ways to obtain assistance regarding data entry or data submission or the ASR website initiative.

By email: Questions can be emailed directly to seclusion-restraint.info@csrincorporated.com

Via the website: Click on the “Help” button on the Data Entry/Data Transfer screen

By telephone: Call 703-741-7124.

DATA SUBMISSION SCHEDULE

INSTRUMENT |

SUBMISSION DATE |

SUBMISSION METHOD |

Facility/Program Characteristic Inventory |

March 2008 |

Sections 1-III: On-line data

entry |

Inventory of Seclusion and Restraint Reduction Intervention |

March 2008, October 2008, April 2010

|

On-line entry |

ATTACHMENT H

Instructions for Data Entry/Submission for the Seclusion and Restraint Event Data Matrix

PURPOSE OF THE EVALUATION

The Center for Mental Health Services (CMHS) has funded 8 State Incentive Grants (SIG) to Build Capacity for Alternatives to Restraint and Seclusion. The main purpose of the evaluation is to determine whether the implementation of best practice interventions has a positive effect on reducing rates of seclusion and restraint within mental health facilities.

THE ROLE OF CSR INCORPORATED

CMHS contracted with CSR Incorporated (CSR) to conduct an independent evaluation of the project. CSR is charged with handling all facets of the evaluation including data submission training, data collection, and data analysis.

DESCRIPTION OF INSTRUMENTS AND DATA SUBMISSION

We will introduce a new instrument in the evaluation called the Seclusion and Restraint Event Data Matrix. Described in the table below is the purpose of this instrument, frequency of collection, and method of submitting the data.

INSTRUMENT |

PURPOSE |

FREQUENCY OF COLLECTION |

SUBMISSION METHOD |

Seclusion and Restraint Event Data Matrix |

Collects bimonthly data about the use of restraint and seclusion. |

Monthly for the next two years |

Data file transfer |

The final OMB-approved data protocol is attached and can also be downloaded from

http://seclusion-restraint.csrincorporated.com/.

DATA ENTRY/SUBMISSION

CSR has developed the Alternatives to Restraint and Seclusion (ARS) website. As a site administrator you will have access to the data entry and data transfer capabilities from the ARS website. In order to access the data entry and data transfer section of the ARS website you will be required to use a valid site username and password. Please follow the steps below to access the ARS website Data Entry/Submission Section.

Step 1: Go to the following link to access the ASR website:

http://seclusion-restraint.csrincorporated.com/.

Step 2: Click on Data Entry/Submission Section

Step 3: Log into the Data Entry/Submission Section by entering your Username and Password, which is specified below. Please note that both are case sensitive.

Username: <username>

Password: <password>

You will then enter the section, which contains instructions and guidelines for data entry and or data transfer.

TECHNICAL ASSISTANCE

There are several ways to obtain assistance regarding data entry or data submission or the ASR website initiative.

By email: Questions can be emailed directly to seclusion-restraint.info@csrincorporated.com

Via the website: Click on the “Help” button on the Data Entry/Data Transfer screen

By telephone: Call 703-741-7124.

DATA SUBMISSION SCHEDULE

INSTRUMENT |

SUBMISSION DATE |

SUBMISSION METHOD |

Seclusion and Restraint Event Data Matrix |

Data submission is at the end of the first week of the month after the reporting month (e.g. November 2008 will be submitted on January 1, 2009) (See Figure 1 for the submission schedule) |

Data file transfer |

Figure 1. Draft Schedule for the Submitting Seclusion and Event Data (SRED) Matrix

ATTACHMENT I

Inventory of Seclusion and Restraint Reduction Interventions (ISRRI)

Reviewers’ Guide

Table of Contents

What is the ISRRI Reviewer’s Guide? 2

Who should complete the ISRRI Review? 2

How should the results of the ISRRI review be submitted? 2

How should this guide be used? 2

What are the ISRRI Worksheets? 2

What is the relationship of the ISRRI to the NTAC Six Core Strategies©? 2

What is the structure of the ISRRI? 2

What kinds of measures are used? 2

What are the plans for future development of the ISRRI? 2

III. CONDUCTING THE ISRRI REVIEW..................................................... 2

Who should conduct the review? 2

What are the sources of information for completing the ISRRI? 2

What is the “baseline” measurement? 2

What is the measurement period? 2

What is the meaning of “date” for each item? 2

What is the procedure for selecting debriefing reports for review? 2

IV. ISRRI WORKSHEET DESCRIPTION………………………………………………… 2

Worksheet Item Response Categories 2

V. OBTAINING SUPPORT FOR COMPLETING

THE ISRRI WORKSHEETS.................................................................... 2

I. INTRODUCTION

What is the ISRRI Reviewer’s Guide?

This guide is designed to assist agencies, facilities and individuals in completing the Inventory of Seclusion and Restraint Reduction Interventions (ISRRI), a part of the common protocol for evaluation of the Substance Abuse and Mental Health Services Administration Alternatives to Seclusion and Restraint State Infrastructure Grant (SAMHSA SIG) program, referred to here as the S/R Reduction Program. The Reviewer’s Guide consists of guidelines, recommendations, and worksheets that produce summary scores entered into the final ISRRI form. When the information needed to complete the ISRRI has been collected using the worksheets, a scoring algorithm will be used by the Independent Evaluator, CSR Incorporated (CSR), to convert the items on the worksheets to scores on the ISRRI.

Who should complete the ISRRI Review?

The ISRRI worksheets are designed to be completed by a representative or a team from each facility, or by an outside organization or individual. Reviewers may be NTAC consultants, staff participating in the S/R Reduction Program, agency staff not directly involved such as Quality Improvement/Quality Assurance staff, local evaluators identified in grantee’s SIG proposals, or other agency staff. Two considerations should govern the choice of reviewer whenever possible: that they are knowledgeable about S/R reduction interventions generally, and that they can be objective observers of a particular facility’s initiative. Although the ISRRI is designed to minimize the necessity of subjective decisions, some degree of this is inevitably required in choosing among response options. This element of subjectivity requires some degree of knowledge about S/R reduction and also creates the potential for unconscious bias when the reviewer has a stake in the program’s success. When feasible, therefore, the choice of reviewer should be governed by the degree to which the individual’s function allows for maximum objectivity. Conducting multiple reviews by a diverse set of reviewers is also a way of reducing bias and identifying it when it occurs. The guide therefore is addressed to the widest possible range of reviewers (for more discussion of reviewers see Section III, below).

The Guide will be supplemented by additional materials posted on the S/R reduction project Web site.

How should the results of the ISRRI review be submitted?

The preferred method for submitting information collected in the ISRRI review is online via the SIG grant Web site. There you will find the worksheets where data may be entered directly. Access to this section of the Web site, however, requires a password- if you do not have a password, please contact the CSR evaluation team.

How should this guide be used?

Following this introduction, Section II provides background information on the guide, its relationship to the ISRRI final form, the S/R Reduction model on which the ISRRI is based, and plans for the future. If your interest is in guidance on how to prepare for and conduct the ISRRI, you may wish to go directly to Section III “How to Conduct the ISRRI.” Section IV consists of information about the format of the worksheets, which will allow you to record information about the implementation of the S/R reduction initiative at the facility. Following the guide carefully will ensure consistency and reliability in ISRRI scores across facilities and among raters.

A note on terminology: Program, Intervention, Component, Initiative, and Review

Throughout the guide, the SAMHSA S/R Reduction SIG is referred to as “the program.” The best-practice model for reducing S/R implemented by the grantee sites with grant funding is described as “the intervention.” Elements of the intervention measured independently (e.g., workforce development) are referred to as “components.” Activities designed to reduce the use of S/R that are undertaken by the sites whether funded by the grant program or initiated independently or prior to the grant and regardless of type, are referred to generically as “S/R reduction initiatives.” “Review” refers to the process of collecting information and completing the worksheets.

II. OVERVIEW

What is the ISRRI?

The ISRRI is a tool for measuring, in standardized form, the nature, and extent of interventions implemented for the purpose of reducing seclusion and restraint at a particular facility. It is one of three components of the Common Protocol for evaluation of the S/R Reduction Program, the others being the Facility/Program Characteristic Inventory and the Seclusion and Restraint Event Data Matrix (pending OMB clearance).

The ISRRI is a type of instrument known as a fidelity scale. Fidelity scales are developed to measure the extent to which a program in practice adheres to a prescribed treatment model. Fidelity scales are useful for explaining program impacts, identifying critical components (“active ingredients”), and guiding replication of interventions, as well as for self-evaluation and accountability. Because implementation is measured as a scale (fidelity score), it allows assessment of the effect of the degree of implementation as it varies across sites. The ISRRI is a new scale developed specifically for the SIG project. It differs from some other fidelity scales in that it is designed to capture and assess the relative impact of a wide range of activities rather than an established evidence-based practice with a known set of critical components. Thus, it will serve in the development of the SIG interventions as evidence-based practices.

The ISRRI is also somewhat analogous to an organizational readiness checklist, such as the General Organizational Index included in the SAMHSA Evidence-Based Practice (EBP) Implementation Resource Kits1 or Dr. David Colton’s Checklist for Assessing Your Organization’s Readiness for Reducing Seclusion and Restraint.2 These differ from the ISRRI, however, in that they are broader in scope, aiming to collect a wide range of information related to readiness for organizational change, whereas the ISRRI seeks to enumerate the S/R Reduction activities that have been conducted by the facility at the time of the assessment.

What are the ISRRI Worksheets?

The worksheets included in the Guide are to be used by reviewers to obtain the information that will later be used by CSR for scoring the ISRRI. A scoring algorithm will be used to calculate component and overall intervention model scores for the final ISRRI. Since S/R reduction initiatives are still at the evaluation stage (prior to being demonstrated evidence-based practices), the primary purpose of the ISRRI, at this point, is to identify the relative effectiveness and ease of implementation of the various components.

It is not expected that any single facility or program will obtain a perfect score on the ISRRI, which conceptually represents the ideal intervention. For example, few if any facilities collect information on “near-misses,” i.e. successful avoidance of an S/R event. This item is included, however, because some have noted the value of this information and indicated that such measures are under development.

What is the relationship of the ISRRI to the NTAC Six Core Strategies©?

The ISRRI is intended to be generic and developmental; that is, to be used to identify and measure the hypothesized critical elements or components of any particular S/R reduction initiative implemented at the grantee sites, and to support their development as evidence-based practices. Thus the scale is intended to provide information about the individual importance of each of the components of S/R reduction initiatives. The components of the ISRRI are based on the NTAC Six Core Strategies for Reducing Seclusion and Restraint©, which are in turn derived from an extensive review of the literature and best practices in the field. However, the ISRRI is intended for use with other S/R reduction programs in general. For this reason, it includes some additional items in order to capture some potential S/R reduction initiatives that may not be included in the Core Strategies, and it varies slightly from the NTAC model in how individual items are classified according to components. Notably, some elements from the Core Strategies are grouped together in a separate, additional component, “Elevating Witnessing/Oversight.”

What is the structure of the ISRRI?

The ISRRI consists of seven domains, representing individual components of S/R Reduction programs such as NTAC’s. Each domain has one or more subdomains, for a total of 24 subdomains. Each subdomain includes one to ten specific activities, referred to as items. The Worksheets are designed to facilitate the collection of information about the status of these activities. Domains and subdomains are listed on the following page.

ISRRI Domain and Subdomain Categories:

I. LEADERSHIP

L.1 State Policy

L.2 Facility Policy

L.3 Facility Action Plan

L.4 Leadership for Recovery Oriented and Trauma-Informed Care

L.5 CEO

L.6 Medical Director

L.7 Non-Coercive Environment

L.8 Kickoff Celebration

L.9 Staff Recognition

II. DEBRIEFING

D.1 Immediate Post-Event

D.2 Formal Debriefing

III. USE OF DATA

U.1 Data Collected

U.2 Goal-Setting

IV. WORKFORCE DEVELOPMENT

W.1 Structure

W.2 Training

W.3 Supervision and Performance Review

W.4 Staff Empowerment

V. TOOLS FOR REDUCTION

T.1 Implementation

T.2 Emergency Intervention

T.3 Environment

VI. INCLUSION

I.1 Consumer Roles

I.2 Family Roles

I.3 Advocate Roles

VII. OVERSIGHT/WITNESSING

O.1 Elevating Oversight

What kinds of measures are used?

The activities or individual items within the subdomains consist of a mixture of structural and process measures, as described in the classic work on quality in health care by Avedis Donnabedian. “Structural” refers to characteristics of the organization or program. Examples of structural measures are the existence of a policy on S/R reduction, a training program for S/R reduction, or the availability of sensory rooms. “Process” refers to actions that are taken in the course of providing treatment services. Examples of process measures are the number of S/R events for which a debriefing was conducted as prescribed, or the number of consumers for whom risk assessments were made. The process measures are often expressed as a proportion or ratio, e.g., the percent of S/R episodes for which a debriefing was conducted.

The third class of quality measures in Donabedian’s terms is “outcome measures.” Structure and process measures are generally considered to be predictors of outcomes; that is, the degree to which necessary structural elements are in place and appropriate processes of care occur is expected to influence outcomes—in this context, the monthly rate for use of S/R. Because the SAMHSA S/R Reduction Program Evaluation Protocol will also measure outcomes, it will be possible to test the relationship of structure and process measures to outcomes.

What are the plans for future development of the ISRRI?

The use of the ISRRI for purposes of the SIG grant evaluation represents a field test of the instrument. The reliability and predictive validity of the ISRRI will be tested during the data analysis phase. Using the information about reliability, validity, and feasibility obtained through these activities, the instrument will be revised and issued, upon completion of the SIG program as a tested Seclusion and Restraint Reduction Fidelity Scale.

III. CONDUCTING THE ISRRI REVIEW

Who should conduct the review?

It is expected that the ISRRI will be conducted for every facility or program identified by grantees as participating in the SIG program. Optimally, a fidelity assessment is conducted by someone external to the program or organization, but knowledgeable about relevant issues. In the case of ISRRI, however, this may not always be feasible, in which case it may be necessary for the review to be conducted by someone within the organization. In this situation, it is preferable that the reviewer at least be someone who is not directly involved in, or affected by, the S/R process or the reduction initiative. This is not a matter of ensuring honesty in reporting, but simply to avoid factors that inevitably exert an influence on responses. As noted previously, the ISSRI is designed to be as unambiguous and quantifiable as possible, but some degree of judgment in assigning scores is unavoidable, and the idea of external reviewers is to ensure the objectivity of that judgment.

To the same end, we recommend the use of multiple reviewers (at least two) for each facility, but again this is not likely to be feasible in all cases. However, the Coordinating Center will do all we can to support and enhance the review process. For example, some parts of the review can be done off-site, such as assessing policy statements and training curricula, and either NTAC or CSR may be able to provide some resources for that purpose.

We anticipate that, in most cases, multiple reviewers will participate, with the configuration varying by facility. In some cases, a given reviewer may be qualified to complete only some sections of the ISRRI, while another reviewer may be qualified to complete the other sections. In other cases, each of the multiple reviewers may be able to independently complete an entire ISRRI. This is a highly desirable situation which will allow for data cross-checks to insure accuracy and completeness. Please note, however, that only one ISRRI should be entered on-line and submitted to CSR. Therefore, any discrepancies arising from differences in reviewer reporting within a facility or program should be resolved prior to submitting the ISRRI to CSR.

What are the sources of information for completing the ISRRI?

The following table describes the various sources for the information needed to complete the worksheets. Each item on the worksheet provides a space for noting the source of information.

Source of Information for ISRRI Worksheets |

|

Source |

Description |

Interviews |

Consumers, consumer peer-advisors, family members, advocates, direct care staff, nursing staff, CEO, medical director, and other appropriate administrative staff on-site or by telephone |

Direct observation |

Facility tour, observation of meetings, etc., on-site |

Documents. |

State and facility level mission statements, policies and procedures schedules and records of S/R reduction activities, action plans/program descriptions such as S/R reduction, trauma-informed care, recovery-oriented or strengths-based treatment planning |

Debriefing reports |

Random selection of persons experiencing a S/R event |

Other relevant reports |

Staff and consumer injuries, etc. |

Meeting records |

Minutes, agendas, schedules, with participant lists |

Training materials |

Curricula, course descriptions, course evaluations, schedules, numbers of people trained, numbers eligible |

Communication materials |

Newsletters, handbooks, posters, etc. |

MIS reports relevant to S/R reduction |

Information that facilities may gather and report (e.g., other demographic or clinical characteristics). |

What is the “baseline” measurement?

The SIG grant evaluation employs a pre-post design, i.e., it compares rates of seclusion and restraint before and after implementation of the grant-supported intervention. (More precisely, it compares before and after implementation of various components of the intervention). The analysis therefore will need to accommodate considerable variation among facilities as to the start date of implementation of these components, with multiple possibilities: some facilities are starting soon after receiving the grant while other grantee sites are choosing to implement the intervention sequentially in a number of facilities. Moreover, some facilities have implemented components of the intervention prior to receiving the grant, and by the end some facilities will have implemented some but not all of the components. A graphic representation of these possibilities is provided below.

Facility/Component |

Pre-Grant |

Grant Year 1 |

Grant Year 2 |

Grant Year 3 |

Facility A |

|

|

|

|

Component 1 |

|

|

|

|

Component 2 |

|

|

|

|

Facility B |

|

|

|

|

Component 1 |

|

|

|

|

Component 2 |

|

|

|

|

Facility C |

|

|

|

|

Component 1 |

|

|

|

|

Component 2 |

|

|

|

|

To identify the impact of each component upon the outcomes data (monthly S/R rates), it is necessary to know the date at which each component was implemented. The ISRRI worksheets are designed to capture that information. Thus there will not be a baseline in the sense of a single start-up date marking a pre-post line for all intervention components in all facilities. Instead each component at each facility will have an individual baseline, in the sense of a start-up point, and the analysis will thereby be able to measure the effect (changes in monthly S/R rates) of individual components across multiple facilities, regardless of when they were implemented.

What is the measurement period?

Initial Review

For a variety of reasons, both pragmatic and methodological, the ISRRI, like the other components of the Common Protocol, is designed to collect information respectively. That is, the type of information called for (e.g., a policy change or the introduction of S/R reduction tools) may be collected some months after the event actually occurred, assuming that some record exists or an interviewee can accurately recall. This is relevant particularly to the first round of ISRRI reviews for each facility.

The initial review of the ISRRI is designed to capture information about the types of interventions in place within mental health facilities and programs prior to the grant award (October 2007). However, for external reasons, it was not possible to conduct the initial ISRRI review until some way into the first year, one reason for the capability of capturing information retrospectively. Most of the items on the ISRRI worksheets include an entry for the date at which that particular element was implemented- the exact date if known, or the timeframe if estimated.

For the initial ISRRI, reviewers are therefore asked to report only those interventions that were in place within their facility prior to the grant award (October 2007). For information derived from debriefing reports (Sections II and VII), the reports should be drawn from two measurement months, the first within 3 months of September 2006 and the second 2-3 months before October 2007 (see below for more discussion of selecting debriefing reports).

Subsequent Reviews

The subsequent reviews of the ISRRI will be completed on a near annual basis throughout the grant cycle (September 2008, September 2009, and April 2010). These reviews will ask about any new interventions established during the past year.

What is the meaning of “date” for each item?

This information is necessary because the amount of time any particular S/R reduction activity has been present, which may vary from one facility to another, is likely to influence the magnitude of the effect on rates of seclusion and restraint in the respective facilities. For example, the use of trauma assessments upon admission would hypothetically have a larger effect in a facility where it had been implemented one year prior to the assessment, compared to another where it had been in place for only one month. Likewise, the effect of an intervention such as a kick-off event hypothetically might diminish over time. This information, therefore, will help to understand why S/R rates may vary from one facility to another.

What is the procedure for selecting debriefing reports for review?

One of the ISSRI subdomains calls for reviews of debriefing reports. The recommended method for determining which should be reviewed is to randomly select a specified number from a particular timeframe. In practice, this process is complicated somewhat by the fact that the number of charts and S/R events varies widely among facilities. Accordingly we offer the guidelines below to be followed as closely as possible by reviewers. The consideration in these guidelines is that the documents reviewed be representative, that is that they accurately reflect current practice in the facility. Any departure from these guidelines, therefore should take into consideration whether these might make the selection less representative.

The procedure for random selection, and the selection itself, should be done in advance of the review in coordination with the contact person at the facility.

The preferred method for selecting reports would be for the facility to provide a de-identified list (i.e., a list of reports identified by some code, which is retained by the facility) from which the reviewer, using some method of randomization, would select a specified number to review. A number of random number generators are available on the Web, for example, http://www.randomizer.org. The reviewer needs only to recode the original list as sequential numbers (if that is how the facility coded them in the first place), and then enter the range of numbers and required quantity to be selected. The randomizer produces a randomized list of the specified quantity of numbers, which can then be matched to the original code, and this list returned to the facility to make those debriefing reports available at the time of the review. This method avoids the possibility of bias, either in the debriefing reports presented by the facility or in some ordering of debriefing reports that might affect the results, for example, if the first five debriefing reports are drawn from a list that turns out to be ordered chronologically, those selected might not represent current practice at the facility.

We recommend reviewing at least five debriefing reports per measurement period. This number is determined primarily on the basis of feasibility, however, and reviewing more, if possible, would increase the likely accuracy of findings.

Two sections of the initial ISRRI (Sections II and VII) call for a review of debriefing reports drawn from two measurement months, the first within 3 months of September 2006 and the second 2-3 months before October 2007. There may be some reasons for altering this, however. In the first place, some facilities may have less than five events in those months, in which case they should start with the given month and then go back far enough in time to select the five most recent events, without randomization. Another possibility is that those particular months may be anomalous for some reason, for example an unrelated policy initiative that temporarily competes with S/R reduction or some event affecting staffing levels, in which case it is acceptable to select from another month. This should be within three months prior to the recommended measurement month, and the reason for deviation from the recommendation documented.

IV. ISRRI WORKSHEET DESCRIPTION

Worksheet Layout

Organization of worksheets:

The worksheets are organized according to the domains representing components of the S/R Reduction initiative: 1) Leadership; 2) Debriefing; 3) Use of Data; 4) Workforce Development; 5) Tools for Reduction; 6) Consumer/Family/Advocate Involvement; 7) Elevating Oversight/Witnessing.

Each of the Domain Worksheets consists of the following elements:

Name of domain.

Separate subdomains representing specific activities within the domains.

Description for domain.

Method to be used (e.g., random selection) for some items as needed

A checklist for specific items, indicating whether or not they are present or have occurred. In some cases this additionally calls for a frequency or percent of that item’s occurrence.

The source of information to address the item.

A space to indicate the date of implementation or, if precise date is unavailable, the general time frame of implementation (date range).

A space to indicate the end date of implementation if an action ended during the grant period.

A space to describe a reason for change if an ISRRI is modified in the future. This element need only be completed if a respondent is modifying a previously submitted ISRRI.

A space for comment on any aspect of the information or the collection process.

|

Domain and Subdomain Name and Number |

||

□ |

Item |

||

|

Source of information: |

||

Start Date: / / or: |

Date Range: (given as 6-month interval choices from a drop-down menu) |

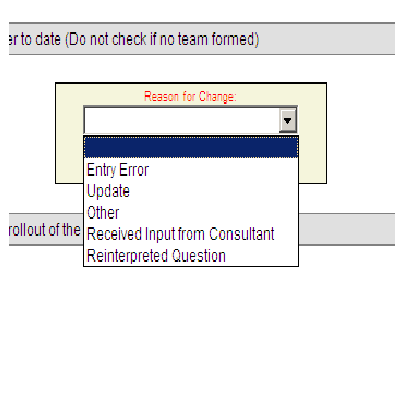

Reason for Change: |

|

|

|||

|

End Date: / / |

||

Template for layout of ISRRI worksheets

Worksheet Item Response Categories

Start Date

In addition, items ask for start date of implementation (preferred) or time period of implementation (if precise date is unavailable). The purpose of this is to determine the length of time that particular practice has been in place, and therefore the extent to which it may have contributed to current rates of seclusion and restraint.

For some types of items, for example a policy, the date would be that at which the policy was implemented. For other types of items, the date may be more difficult to determine precisely, but the response should be the date at which that practice became established. This decision may be to some extent at the reviewer’s discretion, in which case this information should be entered in the “comments” section of the worksheet.

Some states or facilities may have implemented some aspects of the NTAC Core Strategies prior to receiving the grant in October 2007. The initial ISRRI review will ask respondents to report only on those practices that were established prior to October 2007 (the initiation of the SIG grant project). Thus, the reported start date must precede October 2007. For the subsequent ISRRI reviews, the date will indicate at what point the particular practice was put into place during the past year, and therefore the extent of its expected effect on seclusion and restraint rates. Having this information allows for cross-site comparison of the effectiveness of S/R reduction initiatives, even though some sites may be further along than others in implementing the reduction strategies.

End Date

This field is not required but is included in the ISRRI because over the course of the project, it is understood that an action that was previously implemented may not be implemented any more. If your facility has ceased an action, please note the date or estimated date in this box using mm/dd/yyyy format. The End Date box is also intended for actions that spanned several days or months, such as the “kickoff” celebration, which, at some facilities was one day but at others was several days. If your kickoff lasted one day, ignore the end date. If your kickoff celebration was on several days, please note the end of the celebration in the end date box.

Reason for Change

For first round ISRRIs (i.e., the first time a reviewer completes the inventory and submits it) ignore the Reason for Change category. This feature is intended to help the evaluators understand the facility/user relationship to technical assistance as well as the ISRRI form and format.

If a reviewer is modifying a previously submitted ISRRI, the reviewer should provide a reason for changing a response based on but not limited to the following reason options.

Reason for Change Options:

Entry Error- If an item had missing, omitted or incorrect data before the reviewer modified the item with accurate data. For example, if a start date was missing and later provided, the reviewer would choose this option.

An unchecked item is read as though the action never occurred. An item that was marked as implemented, then later unchecked with a reason for change provided is understood to be an entry error.

Update- If an item was not previously marked as having been implemented, the reviewer may note that it is now has been implemented or is being implemented by inputting the relevant information and choosing this reason for changing the status of the item. Please note that if implementation has ended for an item, it is preferable that the respondent note an End Date, rather than uncheck the item. As noted above, an unchecked item is interpreted as the action never having taken place.

Received input from Consultant- Some items may be interpreted in different ways. For example, for the item in the Tools for Reduction worksheet, Section 3, item 1: “The facility is characterized by a sensory/comfort room.” If a facility has a comfort room but that comfort room is locked, one reviewer may interpret that facility as not having a comfort room while another reviewer may believe that the facility in question is characterized by having a comfort room. If the common protocol or the question itself does not fully explain an item, we encourage facilities to speak with their consultants and/or the evaluation team. NTAC and CSR will be able to help answer questions in matters such as these in a systematic way. If an instance such as the aforementioned example occurs, a respondent would use this Reason for Change option.

Reinterpreted Question- This option is similar to Received input from Consultant but refers to cases in which the respondent has reinterpreted a question without the aid of a consultant. All efforts have been made to provide facilities with the necessary information to complete the evaluation tasks. If a reviewer reads the common protocol and other instructions fully, we hope that questions will be interpreted in the manner in which they were intended.

Other- For other reasons and unforeseen circumstances, respondents may choose this Reason for Change option if the other options do not apply.

V. OBTAINING SUPPORT FOR COMPLETING THE ISRRI WORKSHEETS

Any questions or problems in completing the worksheets should be addressed to anyone on the evaluation team at CSR. The SIG Web site includes a link to send a request for assistance of further information. Emails can also be sent directly to seclusion-restraint.info@csrincorporated.com. We encourage such contact in order to insure high quality data and consistency in the reviews, and will respond rapidly.

We appreciate your contribution to this important effort to assess the effectiveness of interventions to reduce the use of seclusion and restraint in facilities providing mental health treatment.

1 http://mentalhealth.samhsa.gov/cmhs/communitysupport/toolkits

2 http://rccp.cornell.edu/pdfs/SR%20Checklist%201-Colton.pdf

| File Type | application/msword |

| File Title | ATTACHMENT D |

| Author | daniel falk |

| Last Modified By | daniel falk |

| File Modified | 2008-07-08 |

| File Created | 2008-07-08 |

© 2026 OMB.report | Privacy Policy