PACE Followup OMB Part B - Revised August 2015_clean

PACE Followup OMB Part B - Revised August 2015_clean.docx

Pathways for Advancing Careers and Education (PACE)

OMB: 0970-0397

Supporting Statement for OMB Clearance Request

Part B: Statistical Methods

Pathways for Advancing Careers and Education (PACE) – Second Follow-up Data Collection

OMB No. 0970-0397

Revised August 2015

Submitted by:

Brendan Kelly

Office of Planning,

Research

and Evaluation

Administration for

Children

and Families

U.S. Department of Health and Human Services

Supporting Statement for OMB Clearance Request – Part B: Statistical Methods

Table of Contents

B.1 Respondent Universe and Sampling Methods 1

B.1.1 PACE Programs and Study Participants 1

B.2 Procedures for Collection of Information 4

B.2.4 Degree of Accuracy Required 6

B.2.5 Who Will Collect the Information and How It Will Be Done 7

B.2.6 Procedures with Special Populations 8

B.3 Methods to Maximize Response Rates and Deal with Non-response 8

B.3.1 Participant Tracking and Locating 8

B.3.2 Tokens of Appreciation 9

B.3.3 Sample Control during the Data Collection Period 9

B.4 Tests of Procedures or Methods to be Undertaken 10

B.5 Individuals Consulted on Statistical Aspects of the Design 10

Introduction

This document presents Part B of the Supporting Statement for the 36-month follow-up data collection activities that are part of the Pathways for Advancing Careers and Education (PACE) PACE evaluation sponsored by the Office of Planning, Research and Evaluation in the Administration for Children and Families (ACF) in the U.S. Department of Health and Human Services (HHS).1 (For a description of prior data collection activities, see previous information collection request (# 0970-0397) approved August 2013)

B.1 Respondent Universe and Sampling Methods

For the 36-month follow-up data collection, the respondent universe for the PACE evaluation includes PACE study participants.

B.1.1 PACE Programs and Study Participants

The PACE study recruited programs that have innovative career pathway programs in place and could implement random assignment tests of these programs. Program selection began with conversations between key stakeholders and the PACE research team. Each program selected into PACE satisfied criteria in three categories:

Programmatic criteria which fit the career pathways framework and include assessments, basic skills and occupational instruction, support-related services, and employment connections;

Technical criteria that emphasize the statistical requirements of the evaluation design, such as programs with the capacity to serve a minimum of 500 participants and to recruit a minimum of 1,000 eligible applicants over a two-year enrollment period; and

Research capacity criteria that address the site’s ability to implement an experimental evaluation.

Additionally, ACF required that three of the programs be Health Profession Opportunity Grant (HPOG) recipients.2

The nine programs selected all promote completion of certificates and degrees in occupations in high demand and, to this end, incorporate multiple steps on the career ladder, with college credit or articulation agreements available for completers of the lower rungs (see Appendix A for a depiction of the career pathways theory of change). While varying in specific strategies and target populations, they all provide some level of the core career pathways services (assessment, instruction, supports and employment connections) although the emphasis placed on each varies by program. Appendix B provides summaries of the nine PACE programs.

The PACE program selection process spanned a period of over two years and included detailed assessments of more than 200 potential programs. After winnowing down the list of prospective programs based on a combination of factors, such as the intervention, its goals, the primary program components, program eligibility criteria, and the number of participants that enroll in the program annually, the PACE team recruited nine promising career pathways programs into the study.

The universe of potential respondents is low-income adults (age 18 or older) who are interested in occupational skills training and who reside in the geographical areas where PACE sites are located. The target sample size in eight of the nine sites ranges from 500 to roughly 1,200, with most near 1,000—equally distributed between with about 500 in each of the two research groups. The ninth site has an estimated sample of 2,540 across eight sub-sites, with 1,695 in the treatment group and 845 in the control group.

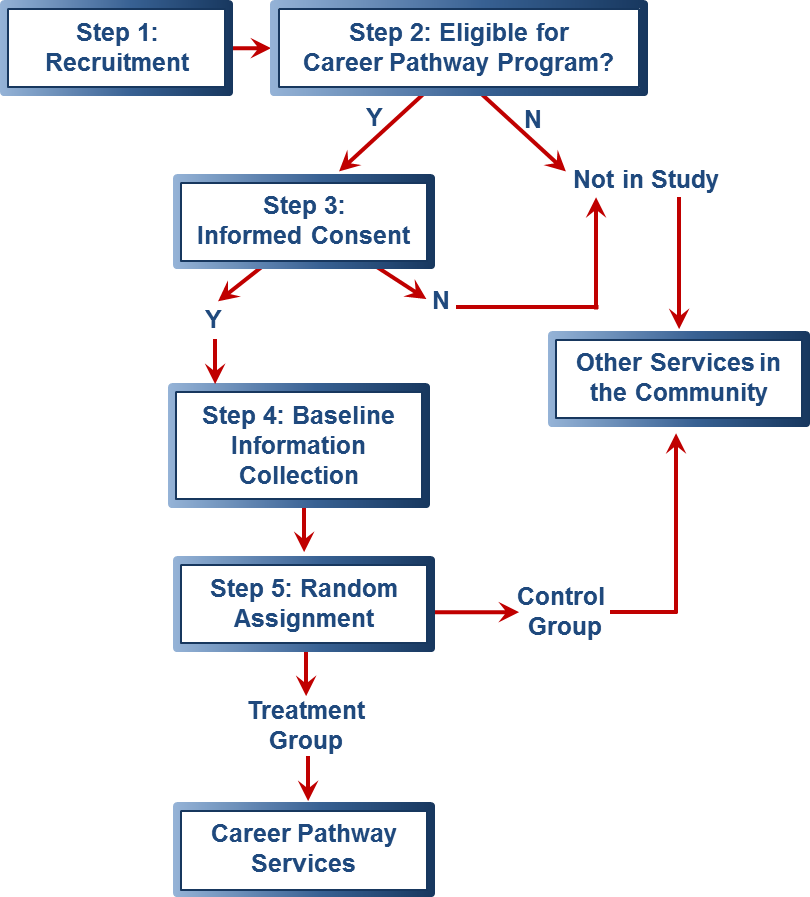

PACE study participants will be the subjects of the data collection instruments for which this OMB package requests clearance (the 36-month follow up survey and the 36-month tracking letters). The respondent universe for 36-month survey and the 36-month tracking form is the universe of study participants in both the treatment and control groups. Program staff recruits individuals, determines eligibility, and if the individual is eligible, obtains informed consent from those who volunteer to be in the study. The specific steps are as follows: Program staff informs eligible individuals about the study. Staff then administers the participant agreement form, which describes the study and requires individuals to sign the form if they wish to participate in the evaluation. Those who refuse to sign the consent form are not included in the study and are not eligible for the career pathways program. They will receive information about other services in the community. Appendix G contains two PACE Participation Agreements: one for PACE sites that are HPOG grantees and one for PACE sites with no HPOG funds. These agreements were approved in November 2011 under OMB No. 970-0397).

For those who consent, program staff collects baseline data, which includes the Basic Information Form (BIF) and Self-Administered Questionnaire (SAQ), and the research team collects follow-up data with the 15-month follow up survey. OMB approved these forms under the previous request for clearance (OMB No. 0970-0397). Program staff (and in the case of the Year Up site, participants) enter information from the BIF into a web-based system developed specifically for the evaluation. Staff then use the system to conduct random assignment to the treatment or control group. Those assigned to the treatment group are offered the provided services while those assigned to the control group are not able to participate in the program but can access other services in the community. Exhibit B-1 summarizes the general process above.

Exhibit B-1. PACE Study Participant Recruitment and Random Assignment Process

B.1.3 Target Response Rates

Overall, the research team expects response rates to be sufficiently high in this study to produce valid and reliable results that can be generalized to the universe of the study. The response rate for the baseline data collection is 100 percent. The expected rate for the 15-month follow-up survey (which is currently underway) and 36-month follow-up are 80 percent, which is based on experiences in other studies with similar populations and follow-up intervals.

Based on response rates to date, the PACE team expects to achieve an 80 percent response rate on the 15 month survey. The following table shows response rates for cohorts released to date.

Random Assignment Month (Cohort) |

Number of Cases |

Percent of Total Sample |

Expected Response Rate |

Response Rate to Date |

November 2011 |

48 |

1% |

75% |

75% |

December 2011 |

67 |

1% |

78% |

78% |

January 2012 |

69 |

1% |

64% |

64% |

February 2012 |

73 |

1% |

77% |

77% |

March 2012 |

79 |

1% |

72% |

72% |

April 2012 |

130 |

1% |

77% |

77% |

May 2012 |

132 |

1% |

80% |

80% |

June 2012 |

170 |

2% |

81% |

81% |

July 2012 |

209 |

2% |

74% |

74% |

August 2012 |

305 |

3% |

77% |

74% |

September 2012 |

145 |

2% |

77% |

68% |

October 2012 |

202 |

2% |

80% |

70% |

November 2012 |

238 |

3% |

80% |

67% |

December 2012 |

220 |

2% |

80% |

57% |

January 2013 |

356 |

4% |

80% |

53% |

February 2013 |

363 |

4% |

81% |

36% |

March 2013 |

319 |

3% |

81% |

21% |

April 2013 |

330 |

4% |

81% |

4% |

Total Sample Released to Date |

3455 |

37% |

79% |

55% |

Total Projected Sample |

9232 |

100% |

80% |

|

As it shows, the PACE survey sample is released in monthly cohorts 15 months after random assignment. As of August 4, 2014, the overall response rate is 55 percent, but this figure includes a mix of monthly cohorts that are finalized and cohorts that have been released in the previous week and month where survey work has just started. The eight earliest monthly cohorts (November 2011 through July2012), comprising 977 sample members, are closed. The overall response rate for these completed cohorts is about 76 percent. These cohorts were released prior to the approval and adoption of a contact information update protocol. The fact that the survey team obtained a 76 percent response rate with these cohorts in the absence of updated contact information gives ACF and the survey team confidence that an 80 percent response rate overall will be achieved. Although none of the cohorts in which the contact information update protocol was employed are completed, the survey team has higher completion rates in the first few months of the survey period than for the earlier cohorts. For the later cohorts, the survey team obtained a 35 to 40 percent response rate in the telephone center in the first eight weeks. This is generally the threshold for transferring the case to the field interviewing team. The survey team took twice as long (16 weeks) to reach this benchmark with the earlier cohorts (those without contact updates). The survey team expects to obtain response rates above 80 percent for these later cohorts to compensate for the 76 percent rate in the early cohorts, and thus reach the 80 percent response rate goal.

B.2 Procedures for Collection of Information

B.2.1 Sample Design

The target sample size for the PACE study is 9,232 individuals. All but one of the nine study sites will ultimately recruit between 500 and 1,200 individuals interested in career pathways services and who agree to participate in the study. The ninth site, the Year Up program, will recruit about 2,540 individuals. In eight sites, half of the sample members in each site will be assigned to the treatment group to receive the career pathways intervention and the other half will be assigned to a control group. In Year Up, the ratio will be two-thirds and one-third, respectively. Sample members assigned to the control group will have access to all other services provided in the community.

All randomly assigned individuals will be included in participant tracking and follow-up data collection. For the 36-month follow-up survey, the research team will attempt to contact and interview all members of the study sample (9,232 individuals) and expects to complete interviews with 80 percent of them (7,386). Therefore, no sampling is required among PACE study participants for the tracking or the follow-up survey. However, the 36-month survey includes a parenting and child outcome module, and the research team plans to sample the focal child.

Sampling Plan for Study of Impacts on Child Outcomes

In order to assess the program impacts on children, we will administer a set of questions to respondents with children. The child module to all respondents who report at least one child in the household between the ages of 3 and 18 years who has resided with the respondent more than half time during the 12 months prior to the survey. Each household will be asked about a specific focal child in the household regardless of how many children are eligible. The sampling plan calls for selecting approximately equal numbers of children from each of three age categories: preschool-age children aged 3 through 5 and not yet in kindergarten; children in kindergarten through grade 5; and children in grades 6 through 12. The procedure for selecting a focal child from each household will depend on the configuration of children from each age category present in the household. Specifically, there are seven possible household configurations of age groups in households with at least one child present:

Preschool child(ren) only

Child(ren) in K – 5th grade only

Children in 6th – 12th grades only

Preschool and K -5th grade children

K – 5th grade and 6th – 12 grade children

Preschool and children 6th – 12 grade children

Preschool-age, K – 5th grade, and 6th – 12 grade children.

The sampling plan is as follows:

For household configurations 1, 2, and 3, only one age group will be sampled; if there are multiple children in that age group, one child will be selected at random.

For household configurations 4 and 5, a K – 5th grade child will be selected from 30 percent of households and a child from the other age category in the household (preschool-age or 6th – 12th grade) will be selected from 70 percent of households.

For household configuration 6, a preschool child will be selected from 50 percent of the households, and a child in 6th – 12th grade will be selected from 50 percent of the households.

For household configuration 7, a child in K – 5th grade will be selected from 20 percent of households, a preschool-age child will be selected from 40 percent of households, and a child in 6th – 12th will be selected from 40 percent of households.

Sampling weights will be used to account for the differential sampling ratios for some child age categories in some household configurations. By applying the sampling weights, the sample for estimating program impacts on children will represent the distribution of the seven household configurations among study households.

B.2.3 Estimation Procedures

The research team will use a variety of estimation techniques. The primary analysis of treatment effects on 36-month outcomes will be intent-to-treat, i.e., they will estimate effects on those who are offered access to the program. Given the rich set of baseline data collected, regression analysis will be used to improve the precision of the estimates while preserving their unbiased character. The estimates of precision presented in the next section assume such regression adjustments, with precision gains based on those obtained in similar studies such as the National Job Training Partnership Act (JTPA) evaluation (Orr et. al. 1996). Plans are also being developed to study sources of variation in outcomes as an exploratory study. Some of the estimation techniques employed for that will not rely on randomization for inference but rather rely on the completeness of baseline covariates to remove selection biases.

B.2.4 Degree of Accuracy Required

The baseline data collected will be used in the future in conjunction with follow-up survey data and administrative data to estimate impacts of career pathways interventions. The research team has estimated the minimum detectable impacts (MDIs). As shown in Exhibit B.2 below, the MDI is the smallest true impact that the study will have an 80 percent probability of detecting when the test for the hypothesis of “no impact” has just a 10 percent chance of finding an impact if the true impact is zero.

MDI estimates for two sample sizes are shown in Exhibit B.2—one for the typical PACE site (treatment and control groups both 500), one for smaller PACE sites (300 each for treatments and controls) and one for Year Up (1,695 treatment, 845 control). MDIs are displayed for outcomes treated as confirmatory at the 15-month follow-up point (percent with substantial progress in career pathways training) and at the 36-month point and beyond (average annual earnings).

Exhibit B-2. Minimum Detectable Impacts for Confirmatory Hypotheses

Statistic |

% with Substantial Educational Progress (Confirmatory at 15 months) |

Average Quarterly Earnings (Confirmatory at 36, 60 months) |

MDE for Sample Sizes with: |

|

|

500 T: 500 C (most programs) |

6.2 |

$324 |

300 T: 300 C (recruitment shortfall) |

8.0 |

$418 |

1695 T: 845 C (Year Up) |

4.1 |

$207 |

Control Group Mean |

50.0 |

$2,863 |

Note: MDEs based on 80% power with a 10% significance level in a one-tailed test, assuming baseline variables explain 15% of the variance in the binary outcome and 30% of the variance in earnings. We have set the variance for credential attainment conservatively at the highest possible level for a dichotomous outcome, 25% (based on the assumption that 50% of the control group experiences the outcome). The variance estimate for earnings in both sample size categories comes from special tabulations of survey data from the second follow-up year of a small random assignment test of Year Up.3

The research team estimates these MDIs are sufficient to detect impacts likely to be policy relevant in each site. Recent evaluations with positive findings provide a range of estimates for impacts on pertinent post-secondary training outcomes and earnings. Estimates are available for two PACE programs. A non-experimental analysis of I-BEST found impacts of greater than 20 percentage points on certificate/degree receipt but did not find statistically significant impacts on earnings (Zeidenberg et al. 2010). An experimental evaluation of Year Up (Roder & Elliot 2011) found positive second-year impacts on college attendance of six percentage points (insignificant) and on average annual earnings of $3,641 (significant).

Other recent experiments have tested related innovations operated by community colleges and community-based organizations. The community college experiments generally test narrower and typically shorter interventions than PACE—approaches such as enhanced guidance and student supports, performance-based scholarships, and short-term learning communities aimed at developmental education students—and, as such, impact analyses to date focused on more incremental outcomes such as semester-to-semester persistence and credits earned. Statistically significant findings tended to be in the 5-10 percentage point range, and initial findings in this range have led to broader demonstrations.4 The Sectoral Employment Impact Study, an experiment testing short-term customized training by community-based organizations, found $4,011 average impacts on second-year earnings but did not analyze post-secondary training impacts (Maguire et al. 2010).

Looking further back, a 2001 meta-analysis of 31 government-sponsored voluntary training programs from the 1960s to 1990s (Greenberg et al., 2003) collected impacts on annual earnings and converted them into 1999 dollars. Adjusting their figures forward to 2012 with the CPI figure of 37 percent, the average intervention effect was $1,417 for women and just $318 for men (though estimates for men are substantially larger—$1,365—when restricted to random assignment studies).5

B.2.5 Who Will Collect the Information and How It Will Be Done

The second follow-up survey will be administered 36 months following enrollment in the study and random assignment. The PACE data collection team will send tracking letters at four-month intervals, starting in month 19. The tracking letter asks the study participant to update his/her contact information as needed, as well as that of the three contacts listed on the Basic Information Form. Study participants will receive a $2 prepayment with each tracking letter to thank them for their time. In the 36th month—approximately one week before the sample for a particular cohort is released—the data collection team will send study participants an advance letter (see Appendix H) reminding them of their participation in the PACE study and informing them that they will soon receive a call from an PACE interviewer who will want to interview them over the telephone. The letter will remind the sample member that their participation is voluntary and that they will receive $40 upon completion of the interview. The advance letter will also include $5 to thank them in advance for participating, as there is an extensive literature documenting the effectiveness of prepayments in increasing response rates (see discussion in Part A). Additionally, we are seeking approval to capture explicit permission to communicate with study participants via text messaging using data the respondent has already provided (cell phone number). Centralized interviewers using computer-assisted telephone interview (CATI) software will conduct the follow-up survey. Interviewers will be trained on the study protocols and their performance will be regularly monitored. The interviewers will first try to reach the sample member by calling the specified contact numbers to administer the 60-minute follow-up survey. For sample members who cannot be reached at the original phone number provided, interviewers will attempt to locate new telephone numbers for the sample members by calling the secondary contacts provided for this purpose. Once the centralized interviewers have exhausted all leads, the case will be transferred to the field staff to locate and interview the sample member in-person. When field staff succeed in finding a sample member and gains agreement to participate in the survey, the field staff will use computer-assisted personal interviewing (CAPI) technology to interview the individual on site. The research team will attempt to interview all sample members within six months of their release date (36 months following random assignment).

Copies of the proposed instruments can be found in the Appendices.

B.2.6 Procedures with Special Populations

The follow-up survey instrument will be available in English and Spanish. Interviewers will be available to conduct the interview in either language. Persons who speak neither English nor Spanish, deaf persons, and persons on extended overseas assignment or travel will be ineligible for follow-up, but information will be collected on reasons for ineligibility. Also, tracking will continue in case they become eligible for future follow-up activities. Persons who are incarcerated or institutionalized will be eligible for follow-up only if the institution authorizes the contact with the individual.

B.3 Methods to Maximize Response Rates and Deal with Non-response

The goal will be to administer the follow-up survey to all study participants in each site, reaching a target response rate of at least 80 percent. To achieve this response rate, the PACE team developed a comprehensive plan to minimize sample attrition and maximize response rates. This plan involves regular tracking and locating of all study participants, providing tokens of appreciation, and sample control during the data collection period.

B.3.1 Participant Tracking and Locating

The PACE team developed a comprehensive participant tracking system, in order to maximize response to the PACE follow-up surveys. This multi-stage locating strategy blends active locating efforts (which involve direct participant contact) with passive locating efforts (which rely on various consumer database searches). At each point of contact with a participant (through tracking letters and at the end of the survey), the research team will collect updated name, address, telephone and email information. In addition, the research team will also collect contact data for up to three people that do not live with the participant, but will likely know how to reach him or her. Interviewers only use secondary contact data if the primary contact information proves to be invalid—for example, if they encounter a disconnected telephone number or a returned letter marked as undeliverable. Appendix E shows a copy of the tracking letter.

In addition to the direct contact with participants, the research team will conduct several database searches to obtain additional contact information. Passive tracking resources are comparatively inexpensive and generally available, although some sources require special arrangements for access.

B.3.2 Tokens of Appreciation

Offering appropriate monetary gifts to study participants in appreciation for their time can help ensure a high response rate, which is necessary to ensure unbiased impact estimates. Study participants will be provided $40 after completing the first follow-up survey. As noted above, in addition to the survey, every four months the participants will receive a tracking letter with a contact update form, which lists the contact information they had previously provided. The letter will ask them to update this contact information by calling a toll-free number or returning the contact update form in the enclosed postage-free business reply envelope. Each tracking letter will include a $2 prepayment to thank the participant for his or her time. Study participants will also receive $5 in their advance letter.

B.3.3 Sample Control during the Data Collection Period

During the data collection period, the research team will minimize non-response levels and the risk of non-response bias in the following ways:

Using trained interviewers (in the phone center and in the field) who are skilled at working with low-income adults and skilled in maintaining rapport with respondents, to minimize the number of break-offs and incidence of non-response bias.

Using a contact information update letter at 19, 23, 27, 31, and 35 months post RA to keep the sample member engaged in the study and to enable the research team to locate them for the follow-up data collection activities. (See Appendix F for a copy of the contact information update letter.)

Using an advance letter that clearly conveys the purpose of the survey to study participants, the incentive structure, and reassurances about privacy, so they will perceive that cooperating is worthwhile. (See Appendix G for a copy of the advance letter.)

Sending email reminders to non-respondents (for whom we have an email address) informing them of the study and allowing them the opportunity to schedule an interview (Appendix I).

Providing a toll-free study hotline number—which will be included in all communications to study participants—for them to use to ask questions about the survey, to update their contact information, and to indicate a preferred time to be called for the survey.

Taking additional tracking and locating steps, as needed, when the research team does not find sample members at the phone numbers or addresses previously collected.

Using customized materials in the field, such as “tried to reach you” flyers with study information and the toll-free number (Appendix J).

Employing a rigorous telephone process to ensure that all available contact information is utilized to make contact with participants. The approach includes a maximum of 30 telephone contact attempts and two months in the field. Spanish-speaking telephone interviewers will be available for participants with identified language barriers.

Requiring the survey supervisors to manage the sample to ensure that a relatively equal response rate for treatment and control groups in each PACE site is achieved.

Through these methods, the research team anticipates being able to achieve the targeted 80 percent response rate for the follow-up survey.6

B.4 Tests of Procedures or Methods to be Undertaken

To ensure the length of the instrument is within the burden estimate, we took efforts to pretest and edit the instruments to keep burden to a minimum. During internal pretesting, all instruments were closely examined to eliminate unnecessary respondent burden and questions deemed to be unnecessary were eliminated. External pretesting was conducted with five respondents and the length of the instrument was found to be consistent with the burden estimate. Some instrument revisions were made to improve question clarity and response categories. Edits made as a result of the pretest have been incorporated in the instruments attached in Appendix C.

B.5 Individuals Consulted on Statistical Aspects of the Design

The individuals shown in Exhibit B-5 assisted ACF in the statistical design of the evaluation.

Exhibit B-5. Individuals Consulted on the Study Design

Name |

Role in Study |

Karen Gardiner Abt Associates Inc. |

Project Director |

Dr. Howard Rolston Abt Associates Inc. |

Principal Investigator |

Dr. David Fein Abt Associates Inc. |

Principal Investigator |

David Judkins Abt Associates Inc. |

Director of Analysis |

Inquiries regarding the statistical aspects of the study’s planned analysis should be directed to:

Karen Gardiner PACE Project Director

David Fein PACE Principal Investigator

David Judkins PACE Director of Analysis

Brendan Kelly Office of Planning, Research & Evaluation

Administration of Children and Families, US DHHS

References

Church, Allan H. 1993. “The Effective of Incentives on Mail Survey Response Rates: A Meta-Analysis.” Public Opinion Quarterly 57:62-79.

Clark, Stephen M. and Stephane P. Mack. 2009. “SIPP 2008 Incentive Analysis.” Presented at the research conference of the Federal Committee on Statistical Methodology, November 3, Washington D.C.

Creighton, K., King, K., and Martin, E. (2007). The use of monetary incentives in Census Bureau longitudinal surveys. Washington, DC: U.S. Census Bureau.

Deng, Yiting, D. Sunshine Hillygus, Jerome P. Reiter, Yajuan Si, and Siyu Zheng. 2013. “Handling Attrition in Longitudinal Studies: The Case for Refreshment Samples.” Statistical Science 28(2):238-56.

Duffer, A. et al., (1994). Effects of incentive payments on response rates and field costs in a pretest of a national CAPI survey. Chapel Hill: Research Triangle Institute

Educational Testing Service (1991). National Adult Literacy Survey addendum to clearance package, volume II: Analyses of the NALS field test, pp. 2-3.

Edwards, P., Cooper, R., Roberts, I., and Frost, C. 2005. Meta-Analysis of Randomised Trials of Monetary Incentives and Response to Mailed Questionnaires. Journal of Epidemiology and Community Health 59:987-99.

Edwards, P., Roberts, I., Clarke, M., DiGuiseppi, C., Pratap, S., Wentz, R., and Kwan, I. 2002. Increasing Response Rates to Postal Questionnaires: Systematic Review. British Medical Journal 324:1883-85.

Fitzgerald, John, Peter Gottschalk, and Robert Moffitt. 1998. “An Analysis of Sample Attrition in Panel Data: The Michigan Panel Study of Income Dynamics.” NBER Technical Working Paper No. 220.

Greenberg, D., Michalopoulos, C., and Robins, P. (2003). A Meta-Analysis of Government-Sponsored Training Programs. Industrial and Labor Relations Review.

Hernandez, Donald J. 1999. “Comparing Response Rates for SPD, PSID, and NLSY.” Memorandum, January 28. (http://www.census.gov/spd/workpaper/spd-comp.htm; retrieved August 6, 2014)

Hill, Martha. 1991. The Panel Study of Income Dynamics: A User’s Guide. Vol. 2. Newbury, CA: Sage.

Kennet, J., and Gfroerer, J. (Eds.). (2005). Evaluating and improving methods used in the National Survey on Drug Use and Health (DHHS Publication No. SMA 05-4044,

Little, T.D., Jorgensen, M.S., Lang, K.M., and Moore, W. (2014). On the joys of missing data. Journal of Pediatric Psychology, 39(2), 151-162.

Methodology Series M-5. Rockville, MD: Substance Abuse and Mental Health

Services Administration, Office of Applied Studies.

Maguire, S., Freely, J., Clymer, C., Conway, M., and Schwartz, D. (2010). Tuning In to Local Labor Markets: Findings From the Sectoral Employment Impact Study. Philadelphia, PA: Public/Private Ventures.

Nisar, H., Klerman, J. and Juras, R. (2013). Designing training evaluations: New estimates of design parameters and their implications for design (working paper). Cambridge, MA: Abt Associates.

Orr, L.L., Bloom, H.S., Bell, S.H., Lin, W., Cave, G., and Doolittle, F. (1996). Does Job Training for the Disadvantaged Work? Evidence from the National JTPA Study. Washington, DC: Urban Institute Press.

Roder, A., and Elliott, M. (2011). A Promising Start: Year Up’s Initial Impacts on Low-Income Young Adults’ Careers. New York, NY: Economic Mobility Corporation.

Scrivener, S. and Coghlan, E. (2011). Opening Doors to Student Success: A Synthesis of Findings from an Evaluation at Six Community Colleges. New York: MDRC.

Sommo, C., Mayer, A., Rudd, T., and Cullinan, D. (2012). Commencement Day: Six-Year Effects of a Freshman Learning Community Program at Kingsborough Community College. New York: MDRC.Weiss, M., Brock, T., Sommo, C., Rudd, T., and Turner, M. (2011). Serving Community College Students on Probation: Four-Year Findings from Chaffey College’s Opening Doors Program. New York: MDRC.

Yammarino, F., Skinner, S. and Childers, T. ( 1991). Understanding Mail Survey Response Behavior: A Meta-Analysis. Public Opinion Quarterly 55:613-39.

Zeidenberg, M., Cho, S., and Jenkins, D. (2010). Washington State's Integrated Basic Education and Skills Training Program (I-BEST): New Evidence of Effectiveness (CCRC Working Paper No. 20). New York: Community College Research Center, Teachers College, Columbia University.

1From the project inception in 2007 through October 2014 the project was called Innovative Strategies for Increasing Self-Sufficiency.

2 The Health Profession Opportunity Grants (HPOG) program provides education and training to Temporary Assistance to Needy Families recipients and other low-income individuals for occupations in the health care field that pay well and are expected to either experience labor shortages or be in high demand. The HPOG program is administered by the Office of Family Assistance within ACF. In FY 2010, $67 million in grant awards were made to 32 entities located across 23 states, including four tribal colleges and one tribal organization. These demonstration projects are intended to address two challenges: the increasing shortfall in supply of healthcare professionals in the face of expanding demand; and the increasing requirement for a post-secondary education to secure a well-paying job. Grant funds may be used for training and education as well as supportive services such as case management, child care, and transportation. http://www.acf.hhs.gov/programs/opre/welfare_employ/evaluation_hpog/overview.html

3These earnings variance estimates (derived from standard deviations of $12,748 and $10,160 in annual earnings for treatment and control groups, respectively) were the only variance estimates available for populations actually served in PACE sites. Though based on a small survey sample (120 treatment, 44 control), they are very close to estimates P/PV provided PACE for participants in its sectoral demonstration project, which involved a wider age range than the youth (18–24) targeted in Year Up. The projected variance reductions due to use of baseline variables are from Nisar, Klerman, and Juras (2013).

4 See, for example, Kingsborough learning community (Scrivner et al, 2008); Louisiana performance scholarship (Scriver and Coghlan, 2011); Chafee College (Weiss et al, 2011).

5 Authors’ calculations based on estimated mean, fraction experimental, coefficient on experimental dummy in Greenberg et al. (2003, Tables 3 & 5).

6 As noted earlier, based on response rates to date, the PACE team expects to achieve an 80 percent response rate on the 15 month survey.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Abt Single-Sided Body Template |

| Author | Missy Robinson |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy