ECE-ICHQ Supporting Statement A

ECE-ICHQ OMB Section A clean 9.18.15.docx

Pre-testing of Evaluation Surveys

ECE-ICHQ Supporting Statement A

OMB: 0970-0355

Assessing the Implementation and Cost of High Quality Early Care and Education: Comparative Multi-Case Study, Phase 1

OMB Information Collection Request

0970-0355

Supporting Statement

Part A

September 2015

Submitted by:

Office of Planning, Research and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

7th Floor, West Aerospace Building

370 L’Enfant Promenade SW

Washington, DC 20447

Project officers:

Ivelisse Martinez-Beck, Senior Social Science Research Analyst and

Child Care Research Team Leader

Meryl Barofsky, Social Science Research Analyst

Contents

A1. Necessity for the data collection 1

A2. Purpose of survey and data collection procedures 2

A3. Improved information technology to reduce burden 7

A4. Efforts to identify duplication 7

A5. Involvement of small organizations 7

A6. Consequences of less frequent data collection 8

A8. Federal register notice and consultation 8

A9. Incentives for respondents 9

A12. Estimation of information collection burden 10

A13. Cost burden to respondents or record keepers 12

A14. Estimate of cost to the federal government 12

A16. Plan and time schedule for information collection, tabulation and publication 12

A17. Reasons not to display omb expiration date 12

A18. Exceptions to certification for paperwork reduction act submissions 13

Tables

A.1 Research questions 3

A.2 Phases of data collection for the ECE-ICHQ multi-case study 4

A.3 Data collection activity for Phase 1 of the ECE-ICHQ Multi-Case Study, by timing, respondent, and format 4

A.4 ECE-ICHQ technical expert panel members 8

A.5 Total burden requested under this information collection 11

A.6 Multi-case study schedule 12

attachments

ATTACHMENT A: CENTER DIRECTOR TELEPHONE SCRIPT

ATTACHMENT B: CENTER DIRECTOR SELF ADMINISTERED QUESTIONNAIRE (SAQ)

ATTACHMENT C: SAQ COGNITIVE INTERVIEW PROTOCOL

ATTACHMENT D: IMPLEMENTATION INTERVIEW PROTOCOL

ATTACHMENT E: COST WORKBOOK

ATTACHMENT F: COST INTERVIEW PROTOCOL

ATTACHMENT G: TIME-USE SURVEY

ATTACHMENT H: FEDERAL REGISTER NOTICE

ATTACHMENT I: INITIAL EMAIL TO CENTER DIRECTORS

ATTACHMENT J: ADDITIONAL RECRUITMENT MATERIALS

A1. Necessity for the Data Collection

The Administration for Children and Families (ACF) at the U.S. Department of Health and Human Services (HHS) seeks approval to collect information to inform the development of measures of the implementation and costs of high quality early care and education. This information collection is part of the project, Assessing the Implementation and Cost of High Quality Early Care and Education (ECE-ICHQ).

Study Background

Support at the federal and state level to improve the quality of early care and education (ECE) services for young children has increased based on evidence about the benefits of high quality ECE, particularly for low-income children. However, information is lacking about how to effectively target funds to increase quality in ECE. ACF’s Office of Planning, Research and Evaluation (OPRE) contracted with Mathematica Policy Research and consultant Elizabeth Davis of the University of Minnesota to conduct the ECE-ICHQ project with the goal of creating an instrument that will produce measures of the implementation and costs of the key functions that support quality in center-based ECE serving children from birth to age 5.1 The framework for measuring implementation of ECE center functions—from classroom instruction and monitoring individual child progress to strategic program planning and evaluation will be created using principles of implementation science.

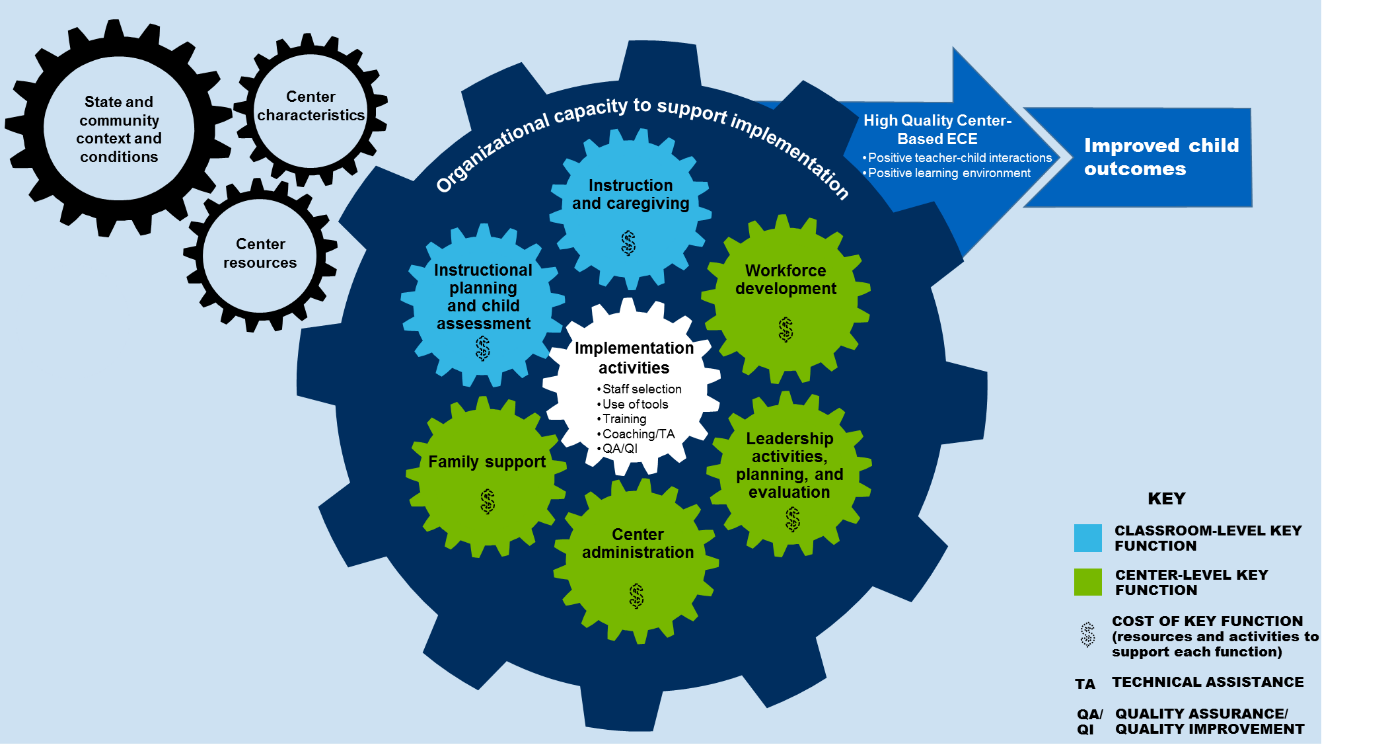

The premise of the ECE-ICHQ project is that centers vary in their investments in and capacities to implement key functions in ways that support quality. The draft conceptual framework in Figure A.1 will guide the study’s approach to data collection. The framework depicts the key functions of a center-based ECE provider (a term shortened to “ECE center” throughout), the costs underlying them, and how these functions are driven by a number of elements that influence whether and how a center can achieve high quality and improve child outcomes. The gears represent the key functions (that may support quality) that the study team expects to find in ECE centers. What the functions look like and how they are carried out within each ECE center is driven by (1) the implementation activities that support them, (2) the organizational capacity in which they operate, and (3) the resources and characteristics of the ECE center. All of this is further driven by the context and conditions within the broader community and state.

What each center is doing to support quality and how these efforts are implemented will be captured by implementation measures. Key functions of an ECE center will be assigned costs to describe the distribution of resources within total costs. We will identify how resources are distributed within ECE centers in ways that may influence quality by producing cost measures by function.

Figure A.1. Draft Conceptual framework for the ECE-ICHQ project

Since the fall of 2014, the ECE-ICHQ study team has developed a conceptual framework; conducted a review of the literature (Caronongan et al., forthcoming); and consulted with a technical expert panel (TEP). This information collection request is for Phase 1 of a three-phase comparative multi-case study that will be both a qualitative study of the implementation of key functions of center-based ECE providers and an analysis of costs. The goals of the study are (1) to test and refine a mixed methods approach to identifying the implementation activities and costs of key functions within ECE centers and (2) to produce data for creating measures of implementation and costs. Phase 1 will be used to thoroughly test data collection tools and methods, to conduct cognitive interviewing to obtain feedback from respondents about the tools, and to refine the tools for later phases. Subsequent phases will further refine the data collection tools and procedures (ACF will submit additional information collection requests for these future phases).

Legal or Administrative Requirements that Necessitate the Collection

There are no legal or administrative requirements that necessitate this collection. ACF is undertaking the collection at the discretion of the agency.

A2. Purpose of Survey and Data Collection Procedures

Overview of Purpose and Approach

The purpose of information collected under the current request is to test the usability of the ECE-ICHQ measurement items and refine the data collection tools and approach. Following this initial phase, ACF will submit additional information collection requests to further design and finalize the data collection tools. We expect to refine instruments through three phases. Over the course of the three phases, data collection tools will become more structured and refined. For example, while Phase 1 includes on-site, semi-structured interviews with center directors, in Phases 2 and 3 the same information will be collected through a self-administered questionnaire, with follow-up conducted by telephone. The specific goals of Phase 1 are to determine that:

the key functions can be defined clearly and distinctly,

costs can readily be assigned to them,

the functions apply across a range of center-based ECE providers serving children from birth to age 5, and

the measurement items are clear, concise and valid in measuring key functions, implementation, and costs.

Research Questions

Table A.1 outlines the ECE-ICHQ research questions addressed by the multi-case study. While all questions are relevant to Phase 1, those of particular importance to this current request are bolded. In consultation with the TEP and from the literature review, these research questions were developed in order to address gaps in knowledge and measurement.

Questions focused on center-based ECE centers: |

What are the differences in center characteristics, contexts, and conditions that affect implementation and costs? |

What are the attributes of the program-level and classroom-level functions that a center-based ECE provider pursues and what implementation activities support each function? |

What are the costs associated with the implementation of key functions? |

How do staff members use their time in support of key functions within the center? |

Questions focused on measures development: |

How can time-use data from selected staff be efficiently collected and analyzed to allocate labor costs into distinct cost categories by key function? |

What approaches to data collection and coding will produce an efficient and feasible instrument for broad use? |

Questions focused on the purpose and relevance of the measures for policy and practice: |

What are the best methods for aligning implementation and cost data to produce relevant and useful measures that will inform decisions about how to invest in implementation activities and key functions that are likely to lead to quality? |

How will the measures help practitioners decide which activities are useful to pursue within a program or classroom and how to implement them? |

How might policymakers use these measures to inform decisions about funding, regulation, and quality investments in center-based ECE? |

How might the measures inform the use and allocation of resources at the practitioner, state, and possibly national level? |

Study Design

The study will include three phases of data collection, outlined in Table A.2. Across the three phases, the study team will collect data from 72 selected ECE centers. Phase 1, the focus of this request and bolded in the table below, involves collecting and analyzing data during visits to 24 ECE centers. Phase 1 will allow the study team to test data collection tools and procedures, as well as to refine these tools for Phases 2 and 3 (to be submitted in future information collection requests). In Phase 2, the study team will select 21 new centers to respond to the updated data collection tools, via telephone and web. Finally, in Phase 3, the study team will collect data from the 24 ECE centers included in Phase 1 and 27 newly selected centers. This will allow the team to test refined tools and remote data collection procedures based on Phases 1 and 2, in addition to validating the tools by comparing key data elements from Phases 1 and 3.

Table A.2. Phases of data collection for the ECE-ICHQ multi-case study

Phase |

Purpose |

Methods |

Number of centers |

1 |

Identify the range of implementation activities and key functions; test data collection tools and methods using cognitive interviewing techniques |

Semi-structured, on-site interviews; electronic or paper self-administered questionnaires; electronic cost workbooks; paper time-use surveys |

24 |

2 |

Test usability and efficiency of structured data collection tools and methods; specifically test web-based collection for time-use data for teachers and aides |

Electronic self-administered questionnaires; electronic cost workbooks; telephone interviews; web-based time-use survey |

21 |

3 |

Conduct structured data collection through refined tools and methods |

Electronic self-administered questionnaires; electronic cost workbooks; telephone interviews; web-based time-use survey |

51 (27 new, plus 24 from Phase 1) |

Universe of Data Collection Efforts

This current information collection request includes the following data collection activities, designed to support data collection for ECE-ICHQ Phase 1 data collection. Table A3 lists each activity, when it will be conducted, respondent type, and format.

Table A.3. Data collection activity for Phase 1 of the ECE-ICHQ Multi-Case Study, by timing, respondent, and format

Data collection activity |

Timing |

Respondents |

Format |

Self-administered questionnaire |

Sent in advance of site visits |

Site administrator or center director

|

Paper |

Document review |

Conducted in advance and during site visits |

Not applicable (conducted by study team) |

Paper |

Implementation interview protocol |

Conducted during site visits |

Site administrator or center director Education specialist Umbrella organization administrator (as applicable) |

In-person interview |

Cost workbook |

Sent in advance of site visits |

Financial manager at site Financial manager of umbrella organization (as applicable) |

Excel workbook |

Cost interview protocol |

Conducted during site visits |

Financial manager at site Financial manager of umbrella organization (as applicable) |

In-person interview |

Time-use survey |

Distributed and completed during site visits |

Site administrator or center director Education specialist Teachers Aides |

Paper |

Center recruitment and engagement call script. Project staff will call the director of each selected center to discuss the study and recruit the director to participate. The center recruitment and engagement call script (Attachment A) will guide the recruiter through the process of (1) explaining the study; (2) requesting participation from the center director; and, if the director agrees, (3) collecting background information about the center, including staff structure, and requesting documents that will help the study team gather information in advance and prepare for the on-site visit. Finally, the recruiter will schedule the site visit, which will last a day and a half.

Document review. The study team will conduct a document review in advance of the visit to become familiar with information that can support data collection about implementation. Specifically, the team will review program planning and training documents to complete sections of the SAQ, as possible. While on-site, the team will collect additional documents such as staffing charts, schedules, and other written documentation that are available to gather details of implementation. The document reviews can also familiarize the study team with the type and format of financial information that may exist to help the team understand how the key function categories may align with categories already in use in tracking costs within ECE centers. The team will use the document reviews to refine the data collection approach and instructions for specific tools for later phases. The document reviews will not require additional time from respondents; time for gathering the documents and responding to questions is included in the time needed for other data collection tools.

Self-administered questionnaire (SAQ). The SAQ (Attachment B) will collect closed-ended information on center characteristics, community and state contexts, organizational capacities to support implementation, key functions, and implementation activities to support key functions. The research team will complete questions on the SAQ, as feasible, through the review of documents received in advance of the visit. The partially filled in SAQ will be sent in advance of visits to the site administrator or center director for completion but the study team will review it with respondents on site. A goal for data collection is to achieve a balance between what is reasonable and feasible to ask administrators to complete on their own and what information should be collected through interviews. The information collected with the SAQ is intended to serve as a guide for the implementation interview—that is, to clarify information or go into more detail about each topic as signaled by responses on the SAQ.

Self-administered questionnaire (SAQ) cognitive interview protocol. The SAQ cognitive interview protocol (Attachment C) is a semi-structured interview guide that will assess respondents’ experience completing the questionnaire, including the clarity of questions, appropriateness of response categories, and sources of information they drew from to answer the questions.

Implementation interview protocol. The implementation protocol (Attachment D) is a semi-structured interview guide that will be adapted based on responses to the SAQ. Using the protocol, interviewers will collect comprehensive information across a range of topics to inform the picture of implementation, while at the same time learning about the best methods to collect the information. For example, the protocol includes questions to document the range of decisions about and processes that support implementation of the key functions. The protocol also includes a set of cognitive interview questions to obtain respondents’ feedback on the clarity of questions and overall experience with the interview.

Cost workbook. The cost workbook (Attachment E) will collect information on costs, including salaries and benefits by staff category, nonlabor costs, and indirect (overhead) costs. The study team will send electronic workbooks to centers to complete in advance of site visits. The workbook will be organized for ease of completion by center-based directors and finance managers and will be accompanied by clear, succinct definitions of items as well as instructions for completion. The project’s goal is to identify costs related to key functions, but the workbook itself is not arranged around key functions. The workbook requests information that will be used to allocate costs to key functions. For example, in the workbook, training costs will be reported separately by topics associated with the key functions. In addition, some costs associated with implementation will be requested in electronic workbooks. For example, costs associated with the purchase, training, and technical assistance or coaching to support the adoption of a new curriculum or child assessment tool will be collected within the cost workbook.

Cost interview protocol. The cost protocol (Attachment F) for Phase 1 will also be a semi-structured interview guide. The interview will collect information on topics such as the alignment of implementation and cost items. The protocol also includes a set of questions to obtain respondents’ feedback on the clarity of questions and overall experience in completing the cost workbook. As in the implementation protocol, the goal is to use what is learned in Phase 1 to develop structured instruments for Phases 2 and 3.

Time-use survey. The purpose of the survey (Attachment G) is to collect information on staff time use that will help transform labor time into costs associated with the key functions. Several recent Mathematica cost studies have fielded web surveys to capture time allocation by program staff; this experience will lead to efficiencies in conducting and refining the survey.

In Phase 1, the time-use surveys will be administered on paper. The study team will debrief with each staff member after completion of the survey to obtain his or her feedback about the survey length, language, and general ease of completion using a set of cognitive interview questions (included in Attachment G). Using the information from this cognitive interviewing, the team will refine the survey and create a web-based version for use in Phases 2 and 3.

The study team will conduct Phase 1 in 8 centers in each of three selected states, for a total of 24 centers. More detailed information about state and center selection can be found in Supporting Statement B. After an initial recruiting call, study staff will hold an additional call with the center director to talk about the study and learn more about the center’s staffing structure and availability for the site visit. In advance of the site visit, the study team will send the center director the SAQ and cost workbook. If the study team receives completed materials prior to the site visit, this information will help make better use of the time on site. If the SAQ and cost workbook are not completed prior to the visit, study staff will walk through the instrument with the center director while on site. Whether or not the SAQ and cost workbook are completed ahead of time, site visits will include two semi-structured interviews: one about implementation and one about costs. Finally, study staff will distribute the time-use survey to center directors and other staff.

A3. Improved Information Technology to Reduce Burden

Most data collection activities undertaken as part of Phase 1 do not make use of information technology. The study team will primarily use paper questionnaires and in-person interviews to thoroughly test data collection tools and methods, conduct cognitive interviewing to obtain feedback from the respondents about the tools, and refine the tools for later phases.

The center director SAQ is in a portable document format (PDF) or Microsoft Word format to facilitate efficient transmission. Respondents may also complete the SAQ in hard copy depending on their preference or comfort level.

The cost workbooks will be provided in an electronic spreadsheet format. Respondents may also complete the workbook in or hard copy depending on their preference or comfort level.

The study team will not use additional information technology, such as computerized interviewing, for two reasons: (1) the instruments will be refined for use in Phases 2 and 3 where electronic options will be used, and (2) the expected sample size for these instruments at Phase 1 does not justify the costs related to development and maintenance of web-based applications.

A4. Efforts to Identify Duplication

None of the study instruments will ask for information that can be reliably obtained from alternative data sources, in a format that assigns costs to key functions. No comparable data have been collected on the costs of key functions associated with providing quality services at the center level for ECE programs serving children from birth to age 5.

Furthermore, the design of the study instruments ensures that the duplication of data collected through each instrument is minimized. There is just one cost workbook and one SAQ focused on implementation that each center will complete. The time-use surveys will be administered to multiple respondents—teachers, directors, managers, and aides—to provide a full picture of staff members’ time use.

A5. Involvement of Small Organizations

Some child care centers participating in Phase 1 are likely to be small organizations. In order to minimize the burden on these centers, the study team will carefully schedule interviews and small group discussions with the directors, managers, and teachers at times that are most convenient for them, and when it will not interfere with the care of children. For example, the team will schedule interviews with directors in the early mornings or late afternoons when there are fewer children at the center, and will schedule teacher interviews before or after school or during nap times or scheduled breaks.

A6. Consequences of Less Frequent Data Collection

Phase 1 is a one-time data collection. The study team will use findings from Phase 1 to inform refinement of data collection tools and methods but findings will not be reported. Centers involved in Phase 1 will also be asked to participate in Phase 3 and given the final data collection instruments so that the data collected can be analyzed and reported across Phases 2 and 3. Repeating data collection with the same centers in Phases 1 and 3 will also allow the team to analyze key constructs across Phases 1 and 3 to assist in validating the Phase 3 methods. For example, the team will analyze the time-use data from the centers that were included in both Phases 1 and 3 to examine the consistency in the data reported, particularly to see if variations may occur based on the time of year in which the “typical week” occurs.

A7. Special Circumstances

There are no special circumstances for the proposed data collection efforts.

A8. Federal Register Notice and Consultation

Federal Register Notice and Comments

In accordance with the Paperwork Reduction Act of 1995 (Pub. L. 104-13) and Office of Management and Budget (OMB) regulations at 5 CFR Part 1320 (60 FR 44978 August 29, 1995), ACF published a notice in the Federal Register announcing the agency’s intention to request an OMB review of this information collection activity. This notice was published on September 15, 2014, (vol. 79, no. 178, p. 54985) and provided a 60-day period for public comment. ACF did not receive any comments in response to this notice. A copy of the notice is attached as Attachment H.

Consultation with Experts Outside of the Study

In designing the study, the study team drew on the expertise of a pool of experts to complement the knowledge and experience of the team (Table A.4). To ensure that multiple perspectives and areas of expertise were represented, the expert consultants included program administrators, policy experts, and researchers. Collectively, the study team and external experts have specialized knowledge in measuring child care quality, cost-benefit analysis, time-use analysis and implementation associated with high quality child care.

Through a combination of in-person meetings and conference calls, study experts have provided input to help the team (1) define what ECE-ICHQ will measure; (2) identify elements of the conceptual framework and the relationships between them; and (3) make key decisions about the approach, sampling, and methods of the study. The experts have provided input about important aspects of the study and the approach to Phase 1, including measurement approaches and tools, criteria for center selection, and how to promote center participation. The study team has also solicited input from experts via individual telephone consultation to discuss the conceptual framework and its implications for data collection and to obtain feedback on the data collection tools.

Table A.4. ECE-ICHQ technical expert panel members

Name |

Affiliation |

Melanie Brizzi |

Office of Early Childhood and Out of School Learning, Indiana Family Social Services Administration |

Rena Hallam |

Delaware Institute for Excellence in Early Childhood, University of Delaware |

Lynn Karoly |

RAND Corporation |

Mark Kehoe |

Brightside Academy |

Henry Levin |

Teacher’s College, Columbia University |

Katherine Magnuson |

School of Social Work, University of Wisconsin–Madison |

Tammy Mann |

The Campagna Center |

Nancy Marshall |

Wellesley Center for Women, Wellesley College |

Allison Metz |

National Implementation Research Network, FPG Child Development Institute, University of North Carolina at Chapel Hill |

Louise Stoney |

Alliance for Early Childhood Finance |

A9. Incentives for Respondents

With OMB approval, the study team will offer centers a gift card valued at $100 to be distributed to center staff at the center director’s discretion as a token of appreciation for their involvement in the study, including completing the SAQ, participating in interviews, and completing the cost workbook and time-use surveys. The team will also provide a small gift, valued at less than $2, to center staff who participate directly in the data collection. This amount was determined based on the estimated burden to participants and is consistent with those offered in prior studies using similar methodologies and data collection instruments (such as the Head Start Family and Child Experiences Survey and the Early Head Start Family and Child Experiences Survey).

A10. Privacy of Respondents

Information collected will be kept private to the extent permitted by law. The study team will inform respondents of all planned uses of data, that their participation is voluntary, and that their information will be kept private to the extent permitted by law. The study team will submit all materials planned for use with respondents as part of this information collection to the New England Institutional Review Board for approval.

As specified in the contract, Mathematica will protect respondents’ privacy to the extent permitted by law and will comply with all federal and departmental regulations for private information. Mathematica has developed a data safety and monitoring plan that assesses all protections of respondents’ personally identifiable information (PII). Mathematica will ensure that all of its employees, subcontractors (at all tiers), and employees of each subcontractor, who perform work under this contract, are trained on data privacy issues and comply with the above requirements. Upon hire, every Mathematica employee signs a Confidentiality Pledge stating that any identifying facts or information about individuals, businesses, organizations, and families participating in projects conducted by Mathematica are private and are not to be released unless authorized.

As specified in the evaluator’s contract, Mathematica will use Federal Information Processing Standard (currently, FIPS 140-2) compliant encryption (Security Requirements for Cryptographic Module, as amended) to protect all instances of sensitive information during storage and transmission. Mathematica will securely generate and manage encryption keys to prevent unauthorized decryption of information, in accordance with the Federal Processing Standard. Mathematica will (1) ensure that this standard is incorporated into our property management and control system; and (2) establish a procedure to account for all laptop computers, desktop computers, and other mobile devices and portable media that store or process sensitive information. Any data stored electronically will be secured in accordance with the most current National Institute of Standards and Technology requirements and other applicable federal and departmental regulations. In addition, Mathematica must submit a plan for minimizing to the extent possible the inclusion of sensitive information on paper records and for protecting any paper records, field notes, or other documents that contain sensitive or personally identifiable information in order to ensure secure storage and limits on access.

A11. Sensitive Questions

There are no sensitive questions in this data collection.

A12. Estimation of Information Collection Burden

Newly Requested Information Collections

Table A.5 summarizes the estimated reporting burden and costs for each of the study tools included in this information collection request. The estimates include time for respondents to review instructions, search data sources, complete and review their responses, and transmit or disclose information. Figures are estimated as follows:

Center recruitment and engagement call script. There are two parts to the script – Part 1 focuses on recruitment and will take approximately 20 minutes. Part 2 focuses on obtaining information from centers that agree to participate in the study and will take approximately 25 minutes. Based on past studies, we expect to reach out to 72 centers in order to secure the participation of the 24 centers needed for this study. The study team therefore expects to conduct Part 1 with 72 centers and Part 2 with the 24 centers that agree to participate.

Self-administered questionnaire (SAQ). The study team will survey 24 centers directors. Center directors might need to consult with other staff to answer some SAQ items. The estimated burden for the self-administered questionnaires is 3.5 hours, inclusive of consultations with other staff.

Self-administered questionnaire (SAQ) cognitive interview protocol. Center directors at each center will complete the SAQ cognitive interview protocol. The interview is expected to take 30 minutes to complete.

Implementation interview protocol. The 2.5-hour implementation interview will be conducted with the center director and education specialist, when applicable, at each of the 24 centers.

Cost workbooks. The financial manager at each center or umbrella organization (or a combination of the two) will complete the cost workbook. The cost workbook is expected to take 6.5 hours to complete, including time for gathering and collecting documents needed, and addressing follow-up questions from the study team to clarify information reported in the workbook.

Cost interview. The cost interview will be completed with the financial manager at each center, the umbrella organization, or a combination of the two. The total interview time across applicable respondents for each center is expected to be 1.5 hours.

Time-use survey. The time-use survey will be administered to each site administrator or center director, one specialist, and an average of six teachers, three assistant teachers, and two aides at each of the 24 centers. The time-use survey is expected to take 30 minutes to complete with a 15-minute debriefing.

Table A.5. Total burden requested under this information collection

Instrument |

Total/Annual number of respondents |

Number of responses per respondent |

Average burden hours per response |

Annual burden hours |

Average hourly wage |

Total annual cost |

Center recruitment and engagement call script (Part 1) |

72 |

1 |

.33 |

24 |

$38.88 |

$933.12 |

Center recruitment and engagement call script (Part 2) |

24 |

1 |

.42 |

10 |

$38.88 |

$388.80 |

Self-administered questionnaire (SAQ) |

24 |

1 |

3.5 |

84 |

$38.88 |

$3,265.92 |

SAQ cognitive interview protocol |

24 |

1 |

.5 |

12 |

$38.88 |

$466.56 |

Implementation interview protocol |

48 |

1 |

2.5 |

120 |

$38.88 |

$4,665.60 |

Cost workbooks |

24 |

1 |

6.5 |

156 |

$38.88 |

$6,065.28 |

Cost interview |

24 |

1 |

1.5 |

36 |

$38.88 |

$1,399.68 |

Time-use surveys |

312 |

1 |

.75 |

234 |

$18.77 |

$4,392.18 |

Estimated annual burden total |

676 |

|

$21,577.14 |

|||

Total Annual Cost

Average hourly wage estimates for deriving total annual costs are based on data from the Bureau of Labor Statistics, Current Employment Statistics Survey (2013). For each instrument included in Table A.5, the total annual cost is calculated by multiplying the annual burden hours by the average hourly wage.

The median weekly salary for full-time employees over the age of 25 with a degree higher than a bachelor’s degree ($38.88 per hour) is used for center directors, education managers, and financial managers and applies to all data collection tools except the time-use survey. The mean salary for child care providers ($15.11) is used for child care providers and assistants. We calculated hourly average wage burden for the time-use survey based on 2 staff (an administrator and an education specialist) at $38.88 and 11 child care staff at $15.11, for an average of $18.77.

A13. Cost Burden to Respondents or Record Keepers

There are no additional costs to respondents.

A14. Estimate of Cost to the Federal Government

The total/annual cost for the data collection activities under this current request will be $228,127. This includes direct and indirect costs of data collection.

A15. Change in Burden

This is additional data collection under generic clearance 0970-0355.

A16. Plan and Time Schedule for Information Collection, Tabulation and Publication

Analysis will start with preliminary coding of data from each instrument based on constructs from the conceptual framework (for example, key functions such as instruction and caregiving or implementation activities such as coaching). Given the large amount of qualitative data being collected in Phase 1, the study team will use a coding software such as ATLAS.ti to organize data. Items from the SAQ, cost workbook, and time-use surveys will be coded, as will interview notes from the implementation and cost discussions.

The goal of this phase is to understand the usefulness of the data collection tools and refine them to make them as clear and easy as possible for respondents to answer. The study team will use the results of data analysis in Phase 1 to refine instruments for Phases 2 and 3. For example, the implementation interviews might uncover aspects of key functions not yet included; these could be added as questions in the Phase 2 and 3 SAQ.

The schedule for all three phases can be found in Table A.6 below. As stated earlier, the study team will not publicly report on data from Phase 1 although methodological findings of interest may be described in publications related to the development of the final instrument.

Table A.6. Multi-case study schedule

Task |

Date |

State engagement and center selection |

September 2015–October 2015 |

Phase 1 data collection |

November 2015–March 2016 |

Submit OMB package for Phases 2 and 3 |

April 2016 |

Phase 2 data collection |

September 2016–November 2016 |

Phase 3 data collection |

January 2017–April 2017 |

Multi-case study report |

November 2017 |

A17. Reasons Not to Display OMB Expiration Date

All instruments will display the expiration date for OMB approval.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions are necessary for this information collection.

REFERENCES

Caronongan, P., G. Kirby, K. Boller, E. Modlin, and J. Lyskawa. “Assessing the Implementation and Cost of High Quality Early Care and Education: A Review of the Literature.” Cambridge, MA: Mathematica Policy Research, forthcoming.

1 The ECE-ICHQ conceptual framework includes six key functions: (1) instruction and caregiving; (2) workforce development; (3) leadership activities, planning, and evaluation; (4) center administration; (5) family support; and (6) instructional planning and child assessment.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | ECE OMB COLLECTION REQUEST |

| Subject | OMB |

| Author | MATHEMATICA STAFF |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy