Part B - Quantitative Supporting Statement (Fresh Empire Campaign.Wave 2

Part B - Quantitative Supporting Statement (Fresh Empire Campaign.Wave 2.docx

Generic Clearance for the Collection of Quantitative Data on Tobacco Products and Communications

Part B - Quantitative Supporting Statement (Fresh Empire Campaign.Wave 2

OMB: 0910-0810

Fresh Empire Campaign: Wave 2 Quantitative Study of Reactions to Rough-Cut Advertising Designed to Prevent Youth Tobacco Use

0910-0810

Supporting Statement: Part B

B. STATISTICAL METHODS

Respondent Universe and Sampling Methods

The one-time actual burden figures are listed in Tables 4 & 5, Part A

The primary outcome of this study will be based on a non-random sample of 855 youth ages 12-17, who are cigarette experimenters or at-risk non-triers and who are influenced by the Hip Hop peer crowd. The study is a cross-sectional design, and participants will be recruited in-person from middle and high schools across the US, and via targeted social media advertisements. The screening criteria are based on age, smoking status, intention to smoke in the future, residence within the geographic target, and Hip Hop peer crowd influence. As this study is considered part of formative research for campaign development and planning, these methods are not intended to generate nationally representative samples or precise estimates of population parameters. The sample drawn here is designed primarily to provide information on the perceived effectiveness of 4 video ads for FDA’s Fresh Empire Campaign and to identify any potential unintended consequences of viewing the ads.

Sampling Methods

This study will utilize two recruitment methods: (1) in-person recruitment at middle and high schools; and (2) social media recruitment using targeted advertisements.

In-Person Recruitment

In-person recruitment will occur in middle and high schools across the US that have been identified selected based on density of target audience as well as building geographic diversity into the sample. The list of recruited schools can be found in Attachment N. Permission to conduct research at each school will be obtained through their respective principals and/or school districts as necessary. Researchers will submit IRB approval documentation to principals in order to clarify the goals of the research and inform them of privacy assurances. School officials will be given assurance that school and student-level information will be protected and that data will only be reported in aggregate form (i.e. no data will be presented that can be traced back to a particular school or student). Researchers will also provide information to school officials regarding the Protection of Pupil Rights Amendment (PPRA) and their obligation to inform parents of research activities on campus. Once permission to conduct research at schools is established, researchers will schedule a site visit to recruit participants onsite during lunch periods. During each lunch period, researchers will approach youth, introduce themselves, and explain that they will be conducting a study regarding teen culture and health. If the student is interested and available, the researcher will explain that students are selected via a Screener that they can take immediately. In a non-random fashion, researchers will sample as many students as possible from every area of the lunchroom. Researchers will never turn away youth who ask to complete a Screener.

The Screener will be completed electronically on a password-protected tablet (Attachment A). A paper version of the survey will also be available in case of technical difficulties with a tablet (Attachment B).

The Screener will collect the following information in two phases:

Phase 1

Demographic information: age (for verification of 12-17 age range), race/ethnicity, and sex;

Self-reported cigarette use or susceptibility to cigarette use: number of cigarettes smoked per lifetime, a series of validated questions assessing susceptibility to cigarette use;

Battery of non-tobacco related behavioral questions utilized as “distractors” to help minimize non-response due to perceived tobacco-related nature of research;

Questions related to previous research participation and tobacco industry affiliation;

Assessment of Hip Hop peer crowd influence.

Phase 2

Identifying information for recruitment coordination: youth name, last classroom on day of recruitment (to notify selected participants), last classroom on the study day (for final study reminder), cell or home phone number (to text message or call the evening of recruitment to confirm study participation and to remind students of the location the night before participation), and youth email address (to complete the Questionnaire on their own device if they do not attend the study session).

Youth will be notified at the end of Phase 1 if they did not qualify in order to prevent collection of unnecessary data from ineligible youth. If youth qualify after Phase 1, they will automatically continue on to Phase 2 questions. This approach ensures that identifying information is only collected if youth qualify to complete the Questionnaire.

Youth will be notified of their qualification on the same day either during lunch or their last class period with a written notification, and reminded the day of the study session with a written note. Researchers will invite qualified youth to attend an after school study session. Youth will be reminded of the date and location of their study session via text message or phone call on the evening following recruitment and on the evening before the study session. Eligible youth ages 13-17 who do not attend an in-person study session will be emailed the study link and up to 2 reminders as needed to complete the Questionnaire online on their own device.

Social Media Recruitment

In-school data collection can be time consuming and is dependent on the individual school’s schedule. For that reason, recruitment via social media is being conducted to supplement the in-school sample. Advertising through social media platforms, such as Facebook, can help increase the diversity of the study sample and increase representation of traditionally underrepresented groups, including racial/ethnic minorities (Lane, Armin, & Gordon, 2015; Graham et al., 2008). Data also suggest that social media engagement among multicultural youth ages 12-17 is high. For example, a recent online survey found that 71% of US teens ages 13-17 use Facebook, including 75% of African American teens and 70% of Hispanic teens (Pew Research Center, 2015). For many social media platforms, ad targeting can be adjusted in real-time allowing researchers to react to shifting recruitment needs if a particular demographic is lacking in the overall sample. Social media advertisements may be deployed based on factors such as age, geographic location within or around a target campaign city, and interest in Hip Hop cultural pages or hashtags. Respondents who click on any social media sponsored ad (Attachment K) will be redirected to the Screener splash page. Youth who complete the Screener (Attachment C) and are identified as eligible will be asked to provide a parent’s email address, for parental opt out notification, and will receive a link via email and text message to the Questionnaire no less than 24 hours later to allow sufficient time for parental opt out.

Screening and qualification criteria for participants recruited online are the same as those listed above for in-person recruitment, with the following differences:

Phase 1:

Demographics:

Participant zip code will be collected. This will be used to ensure that participants are within determined geographic targets for the study.

Participants aged 12 will not qualify to complete the Questionnaire if they are recruited via social media, to comply with Children’s Online Privacy Protection Act (COPPA) regulations.

Email verification:

Youth email address will be collected to check against all current respondent data to avoid duplicates and reduce fraudulent activity.

Phase 2:

Identifying Information:

Youth name and last period class information will not be collected from youth who complete the Screener online.

Youth will be required to provide an email address for their parent. This will be used to email the Parental Opt-Out Form to parents of eligible youth. Parental email address will not be used for any other purpose.

IP addresses will be collected automatically as part of the Screener completion process to avoid duplication (i.e. ensure that no entry with same IP address already exists) and as a verification of participant country of origin (should be within US).

Incentive:

Youth who complete the Screener and qualify will receive a $5 electronic gift card pre-paid incentive to demonstrate the legitimacy of the study and reduce drop off between recruitment and Questionnaire completion. This represents a split incentive strategy and will be implemented along with an additional $20 electronic gift card post-paid incentive that participants will receive after completing and submitting the Questionnaire.

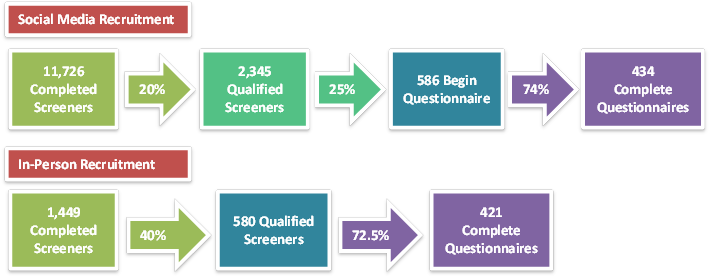

Sample Size

To obtain a final sample of 855 youth ages 12-17 who have experimented with smoking or are susceptible to smoking in the future and who are influenced by the Hip Hop peer crowd, we estimate that we will need to screen approximately 13,175 potential respondents. This estimate was developed using Rescues SCG’s extensive experience conducting research with this target audience and using similar methodologies. We estimate that approximately 20.0% of youth screened via social media and 40.0% of those screened in-person will qualify to complete the Questionnaire. We anticipate that approximately 25.0% of qualified youth recruited via social media will click the link to return to the Questionnaire after the 24-hour parental opt-out period, and approximately 74.0% of those who click the link will complete and submit the Questionnaire. For in-person recruitment, we estimate that approximately 72.5% of qualified youth will attend a study session or click on the emailed link and complete and submit the Questionnaire. Attrition and sample size are explained in Table 6.

Table 8. Sample Size for Wave 2 Copy Testing Questionnaire

Procedures for the Collection of Information

This section describes the procedures for the data collection. The study will utilize two Questionnaire completion methods: (1) in-person during an after school study session held on school campus; and (2) online completed on the participant’s own device such as a mobile phone or computer. All surveys will be conducted using a self-administered, electronic Questionnaire.

Summary of Protocol

The list of study procedures is as follows:

In-person data collection will be conducted during an after school study session held in a classroom setting at the school campus where participants were recruited. Participants will complete all activities individually with minimal assistance from a researcher as necessary. Each participant will be assigned a set of headphones and a tablet or desktop computer station on which they will view ads and provide individual feedback. Participants will be asked not to interact with each other or discuss the study stimuli. A supervisor and up to 3 researchers will facilitate each study session.

Youth ages 13-17 who are invited to but do not attend a study session will receive an email with details on how to access the Questionnaire from their own device, including a unique link to the Questionnaire. Youth 12 years of age who are invited to but do not attend a study session will not be invited to complete the Questionnaire on their own device, to comply with COPPA regulations. Youth who do not attend their assigned study session and have not completed the Questionnaire within 24 hours after receiving the link via email will receive up to 2 reminder emails with the study link.

Online data collection will be completed by youth independently, on their own electronic devices, such as a mobile phone or home computer. Eligible youth will be emailed and text messaged a link to complete the Questionnaire on their own devices after a 24-hour waiting period to allow for parental opt-out. Qualified participants whose parents do not opt them out, and who have not completed the Questionnaire within 24 hours after initially receiving the study link will receive up to 2 reminders. The reminders will be in the form of an email and text message containing the study link.

All youth who begin the Questionnaire will be randomly assigned to the ad-viewing group or the control group. Youth in the ad-viewing group will be randomly assigned to view 2 of the 4 video ads being tested (Attachment L). Exhibit 7 indicates the variables to be assessed during the Questionnaire and the participant groups that will be exposed to these survey items.

Table 9. Structure of the Copy Testing Process and Questionnaire

Action or Variable |

Description |

Presented to Ad-Viewing Participants |

Presented to Control Participants |

Ad exposure |

Each of the ad-viewing participants will view 2 unique video ads. |

X |

|

Tobacco use and peer tobacco use |

Items on household tobacco use, peer cigarette use, and participant past 30-day tobacco use. |

X |

X |

Perceived ad effectiveness |

Items to assess ad effectiveness, presented immediately following each video ad. |

X |

|

Tobacco-related attitudes, beliefs and risk perceptions |

Items tailored to align with the tobacco facts chosen for inclusion in the video ads. Items assessing participants’ attitudes, beliefs, and risk perceptions related to tobacco use. |

X |

X |

Unusual Problems Requiring Specialized Sampling Procedures

No specialized sampling procedures are involved.

Use of Periodic Data Collection Cycles to Reduce Burden

This is a one-time survey data collection effort.

Methods to Maximize Response Rates

General Methods to Reduce Non-Response & Drop-Off

Several features of this study have been designed to maximize participant response rate and Questionnaire completion across recruitment methods.

Incentives: As participants often have competing demands for their time, incentives are used to encourage participation in research. Numerous empirical studies have shown that incentives can significantly increase response rates in cross-sectional surveys and reduce attrition in longitudinal surveys (e.g., Abreu & Winters, 1999; Castiglioni, Pforr, & Krieger, 2008; Jäckle & Lynn, 2008; Shettle & Mooney, 1999; Singer, 2002). In this study, we will use incentives totaling $25 per participant to equalize the incentive for participants based on mode of recruitment. . The purpose of the incentive is to provide enough motivation for them to participate in the study rather than another activity.

Reminders: A series of reminders will be utilized. Youth recruited in-person will receive a reminder text message or call on the night of recruitment and the night before their study session, and will receive a paper reminder note during their last period class on the day of their study session. These reminders will include the time and location of their study session, to ensure youth have all necessary information to participate. Youth recruited via social media, and youth ages 13-17 who are recruited in-person but do not attend a study session, will receive 2 reminders via email and text message after receiving an initial invitation to complete the Questionnaire. These reminder messages will include a unique link to the survey, to enable youth to easily complete the Questionnaire. These reminders are intended to decrease non-response by ensuring youth have the necessary information to complete the Questionnaire, and by encouraging youth who do not initially complete the Questionnaire to complete it before the conclusion of data collection.

Parental Opt-Out: A parental opt-out approach will be utilized for youth ages 13-17. Due to the target population of this study, traditional written parental consent procedures would discourage participation among the very participants most appropriate for the aims of this study. Many youth who smoke or are at-risk for smoking are unlikely to seek out parental consent or have parents who provide written consent for their children’s participation in prevention programs (Levine, 1995; Pokorny et al., 2001; Unger et al., 2004; Severson and Ary, 1983). Demonstrating this point, there is consistent evidence of quantifiable differences in the characteristics of youth who participate in smoking cessation research when traditional written consent is required compared to waived parental consent, including participant demographics and smoking history (Kearney et al., 1983; Anderman et al., 1995; Severson and Ary, 1983). Utilizing a parental opt-out approach will remove a barrier that might discourage the target audience from returning to complete the Questionnaire, thereby reducing non-response.

Mobile Phone Responsiveness: Both the Screener and Questionnaire will be optimized for performance on a mobile phone, in addition to other electronic devices such as tablets and laptops. This is especially important as 73% of US teens 13-17 report having or having access to a smartphone, and 91% of teens reporting going online using a mobile device at least occasionally (Pew Research Center, 2015). Based on this information and Rescue SCG’s previous experience with online data collection, we expect that many youth will attempt to complete the Screener and Questionnaire on a mobile phone. Ensuring that the surveys are optimized for mobile phone performance will reduce non-response and drop-off due to technical issues related to compatibility of the instruments with the mobile phone format.

Methods to Reduce In-Person Recruitment Non-Response & Drop-Off

Two methods specific to in-person recruitment and data collection will be implemented to encourage completion.

Location of Study Sessions: Study sessions for youth recruited in-person to complete the Questionnaire will be conducted after school, at the school from which participants were recruited. This setting and timing will make participation convenient, as youth will already be at the location of the study session on the day and time of the session. Increasing convenience of Questionnaire completion by bringing data collection to youth in a location where they will already be should reduce non-response and drop-off.

Online Completion Option: Youth ages 13-17 recruited in-person who do not attend their assigned study session, and whose parents have not opted them out of participation, will be emailed a link to complete the Questionnaire online on their own device. This will reduce drop-off among qualified youth recruited in-person, by providing non-respondents an opportunity to complete the Questionnaire if they are unable to stay after school for the study session.

Methods to Reduce Social Media Recruitment Non-Response & Drop-Off

Several methods specific to social media recruitment and data collection will be implemented to encourage completion.

Targeted Social Media Advertising: The social media advertisement campaign (Attachment K) will utilize specially developed ads and take advantage of social media platform targeting capabilities to deliver relatable advertisements to youth who are most likely to qualify for the study. Social media ads will feature images and copy designed to appeal to Hip Hop youth, in order to increase interest and the likelihood of completion. Additionally, these social media ads will be delivered to youth most likely to be in the target audience via targeted advertisement delivery methods available in social media platforms. Using features of the social media platform such as demographic information from profiles and “likes” or hashtags, advertisements can be delivered to youth who are most likely to be within the age range, living within the determined geographic targets, and interested in Hip Hop culture. This methodology will ensure that social media advertisements are delivered to youth who are most likely to qualify, and that those youth feel a personal connection with the study, as a way to increase response rates.

Social Media Advertising Responsiveness: Adjustments in social media ads, including ad image and copy, will be utilized to maximize response. At any given time, low-performing ads will be removed from circulation. Ad performance will be assessed and compared overall, and segmented by device (i.e. mobile or desktop). Copy and images included in Attachment K will be introduced to the campaign and recombined in unique ways in response to ad performance. Additionally, advertising targeting may be revised to expand interest-based targeting to increase the sampling pool. These methods are benefits of the social media advertising recruitment approach, and allow researchers to react in real time to low response and qualification rates by adjusting the social media advertising and targeting to better reach the target audience and improve response rates.

Pre-Paid Incentive: For participants recruited via social media, a split incentive approach will be utilized. Youth recruited via social media who complete the Screener and qualify to participate will receive a $5 electronic gift card upon qualification. Those who then return after the 24-hour parental opt-out window and complete and submit the Questionnaire will receive an additional $20 electronic gift card, for a total of $25 in electronic gift card incentives. A pre-paid incentive has been shown to increase response rates for surveys (Messer et al., 2011; Coughlin et al., 2013; Gajic, Cameron, & Hurley, 2012; Dirmaier et al., 2007; Ulrich et al., 2005; Stevenson et al., 2011), and to be effective when used in conjunction with a post-paid incentive (OMB Control No. 0920-0805, Report on Incentives). In some cases, pre-paid incentives have also been demonstrated to increase response rates among racial and ethnic minorities (Beebe et al., 2005; Dykema et al., 2012). Additionally, a $5 pre-paid incentive seems to be effective at maximizing participation, compared to other incentive amounts (Warriner et al., 1996; Asch et al., 1998; Han et al., 2012; Montaquila et al., 2013; Dykema et al., 2012). Based on this information, it is believed that a $5 prepaid incentive for youth recruited via social media will help to reduce attrition after the 24-hour parental opt-out period.

Tests of Procedures or Methods

The campaign contractor Rescue SCG will conduct rigorous internal testing of the electronic survey instruments prior to their fielding. Trained researchers will review the Screeners and Questionnaire to verify that instrument skip patterns are functioning properly, delivery of campaign media materials is working properly, and that all survey questions are worded correctly and are in accordance with the instrument approved by OMB.

Individuals Involved in Statistical Consultation and Information Collection

The following individuals inside the agency have been consulted on the design of the copy testing plan, survey development, or intra-agency coordination of information collection efforts:

Tesfa Alexander

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993-0002

Phone: 301-796-9335

E-mail: Tesfa.Alexander@fda.hhs.gov

Gem Benoza

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-402-0088

E-mail: Maria.Benoza@fda.hhs.gov

Matthew Walker

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-402-3824

E-mail: Matthew.Walker@fda.hhs.gov

Leah Hoffman

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-743-1777

E-mail: Leah.Hoffman@fda.hhs.gov

Atanaska (Nasi) Dineva

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993-0002

Phone: 301-796-4498

E-mail: Atanaska.Dineva@fda.hhs.gov

Chaunetta Jones

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993-0002

Phone: 240-402-0427

E-mail: Chauentta.Jones@fda.hhs.gov

The following individuals outside of the agency have been consulted on questionnaire development.

Dana Wagner

Rescue Social Change Group

660 Pennsylvania Ave SE, Suite 400

Washington, DC 20003

Phone: 619-231-7555 x 331

Email: dana@rescuescg.com

Carolyn Stalgaitis

Rescue Social Change Group

660 Pennsylvania Ave SE, Suite 400

Washington, DC 20003

Phone: 619-231-7555 x 313

Email: carolyn@rescuescg.com

Mayo Djakaria

Rescue Social Change Group

660 Pennsylvania Avenue SE, Suite 400

Washington, DC 20003

Phone: 619-231-7555 x 120

Email: mayo@rescuescg.com

Xiaoquan Zhao

Department of Communication

George Mason University

Robinson Hall A, Room 307B

4400 University Drive, 3D6

Fairfax, VA 22030

Phone: 703-993-4008

E-mail: xzhao3@gmu.edu

References

Abreu, D. A., & Winters, F. (1999). Using monetary incentives to reduce attrition in the survey of income and program participation. In Proceedings of the Survey Research Methods Section (pp. 533-538).

Anderman, C., Cheadle, A., Curry, S., Diehr, P., Shultz, L., & Wagner, E. (1995). Selection bias related to parental consent in schoolbased survey research. Evaluation Review, 19, 663–674.

Asch, D. A., Christakis, N. A., & Ubel, P. A. (1998). Conducting physician mail surveys on a limited budget: a randomized trial comparing $2 bill versus $5 bill incentives. Medical Care, 36(1), 95-99.

Beebe, T. J., Davern, M. E., McAlpine, D. D., Call, K. T., & Rockwood, T. H. (2005). Increasing response rates in a survey of Medicaid enrollees: the effect of a pre-paid monetary incentive and mixed modes (mail and telephone). Medical Care, 43(4), 411-414.

Castiglioni, L., Pforr, K., & Krieger, U. (2008, December). The effect of incentives on response rates and panel attrition: Results of a controlled experiment. In Survey Research Methods (Vol. 2, No. 3, pp. 151-158).

Coughlin, S. S., Aliaga, P., Barth, S., Eber, S., Maillard, J., Mahan, C., & Williams, M. (2013). The effectiveness of a monetary incentive on response rates in a survey of recent US veterans. Survey Practice, 4(1).

Dirmaier, J., Harfst, T., Koch, U., & Schulz, H. (2007). Incentives increased return rates but did not influence partial nonresponse or treatment outcome in a randomized trial. Journal of Clinical Epidemiology, 60(12), 1263-1270.

Dykema, J., Stevenson, J., Kniss, C., Kvale, K., Gonzalez, K., & Cautley, E. (2012). Use of monetary and nonmonetary incentives to increase response rates among African Americans in the Wisconsin Pregnancy Risk Assessment Monitoring System. Maternal and Child Health Journal, 16(4), 785-791.

Gajic, A., Cameron, D., & Hurley, J. (2012). The cost-effectiveness of cash versus lottery incentives for a web-based, stated-preference community survey. The European Journal of Health Economics, 13(6), 789-799.

Graham, A. L., Milner, P., Saul, J. E., & Pfaff, L. (2008). Online advertising as a public health and recruitment tool: comparison of different media campaigns to increase demand for smoking cessation interventions. Journal of Medical Internet Research, 10(5), e50.

Han, D., Montaquila, J. M., & Brick, J. M. (2012). An Evaluation of Incentive Experiments in a Two-Phase Address-Based Sample Mail Survey. In Proceedings of the Survey Research Methods Section of the American Statistical Association.

Jäckle, A., & Lynn, P. (2008). Offre de primes d’encouragement aux répondants dans une enquête par panel multimodes: effets cumulatifs sur la non-réponse et le biais. Techniques d’enquête, 34(1), 115-130.

Kearney, K.A., Hopkins, R.H., Mauss, A.L., & Weisheit, R.A. (1983). Sample bias resulting from a requirement for written parental consent. Public Opinion Quarterly, 47, 96102.

Lane, T.S., Armin, J., & Gordon, J. S. (2015). Online recruitment methods for web-based and mobile health studies: A review of the literature. Journal of Medical Internet Research, 17(7), e183.

Levine, R.J. (1995) Adolescents as research subjects without permission of their parents or guardians: Ethical considerations. Journal of Adolescent Health, 17(5), 289-297.

Messer, B. L., & Dillman, D. A. (2011). Surveying the general public over the internet using address-based sampling and mail contact procedures. Public Opinion Quarterly, 75(3), 429-457.

Montaquila, J. M., Brick, J. M., Williams, D., Kim, K., & Han, D. (2013). A study of two-phase mail survey data collection methods. Journal of Survey Statistics and Methodology, 1(1), 66-87.

Pew Research Center. (2015). Teens, Social Media & Technology Overview 2015. Washington, DC: Pew Research Center.

Pokorny, S. B., Jason, L. A., Schoeny, M. E., Townsend, S. M., & Curie, C. J. (2001). Do participation rates change when active consent procedures replace passive consent. Evaluation Review, 25(5), 567-580.

Racial and Ethnic Approaches to Community Health Across the U.S. (REACH U.S.) Evaluation. OMB CONTROL NUMBER: 0920-0805 Report on Incentive Experiments www.reginfo.gov/public/do/DownloadDocument?objectID=30165501. Accessed on February 1, 2016.

Severson, H.H., & Ary, D.V. (1983). Sampling bias due to consent procedures with adolescents. Addictive Behaviors, 8(4), 433–437.

Singer, E. (2002). The use of incentives to reduce nonresponse in household surveys. Survey nonresponse, 51, 163-177.

Shettle, C., & Mooney, G. (1999). Monetary incentives in US government surveys. Journal of Official Statistics, 15(2), 231.

Stevenson, J., Dykema, J., Kniss, C., Black, P., & Moberg, D. P. (2011). Effects of mode and incentives on response rates, costs and response quality in a mixed mode survey of alcohol use among young adults. In annual meeting of the American Association for Public Opinion Research, May, Phoenix, AZ.

Ulrich, C.M., Danis, M., Koziol, D., Garrett-Mayer, E., Hubbard, R., & Grady, C. (2005). Does it pay to pay? A randomized trial of prepaid financial incentives and lottery incentives in surveys of nonphysician healthcare professionals. Nursing Research, 54(3), 178-183.

Unger, J.B., Gallaher, P., Palmer, P.H., Baezconde-Garbanati, L., Trinidad, T.R., Cen, S., & Johnson, C.A. (2004). No news is bad news: Characteristics of adolescents who provide neither parental consent nor refusal for participation in school-based survey research. Evaluation Review, 28(1), 52-63.

Warriner, Keith, John Goyder, Heidi Gjertsen, Paula Hohner, and Kathleen McSpurren. 1996. “Charities, No, Lotteries, No, Cash, Yes: Main Effects and Interactions in a Canadian Incentives Experiment.” Paper presented at the Survey Non-Response Session of the Fourth International Social Science Methodology Conference, University of Essex, Institute for the Social Sciences, Colchester, UK.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-26 |

© 2026 OMB.report | Privacy Policy