Section B-Supporting Statement f ro Cross-Site Evaluation of CB Child Welfare T-TA Centers

Section B-Supporting Statement f ro Cross-Site Evaluation of CB Child Welfare T-TA Centers.docx

Cross-site Evaluation of the Children's Bureau's Child Welfare Technical Assistance Implementation Centers and National Child Welfare Resource Centers

OMB: 0970-0377

Cross-Site Evaluation of the Children’s Bureau’s Child Welfare Technical Assistance Implementation Centers and National Child Welfare Resource Centers

Section B: Collections of Information Employing Statistical Methods

Respondent universe and sampling methods 1

Agency Results Survey: The potential respondent universe for the Agency Results Survey includes Child Welfare Directors (or their designee) from all 50 States, the District of Columbia and Puerto Rico, and the Directors from 148 Tribes and 3 Territories (American Samoa, Guam, and the U.S. Virgin Islands) that currently receive Title IV-B funding and are eligible to receive T/TA from the ICs or NRCs. Nonprobability sampling strategies will be used. Two samples will be drawn. A census sample of Child Welfare Directors (or their designees) from the 50 States, the District of Columbia and Commonwealth of Puerto Rico will be included. This sample comprises all agencies that are federally monitored through the Child and Family Services Review and entitled to receive T/TA services from the CB. A census is necessary to obtain all 52 child welfare leaders’ perceptions and perspectives about T/TA utilization and services received with regard to specific change initiatives in their respective child welfare systems.

A purposive sample of 22 Child Welfare/Social Service Directors from the 148 Tribes and 3 Territories receiving Title IV-B funding will be selected. These Tribes, Tribal Consortia, and Territories will represent diversity in a number of areas: (1) Selection or approval for Children’s Bureau Implementation Projects in FY 2009; (2) Geographical representation across ACF Regions I-X; and (3) Amount of Title IV-B award (range is from $10,000 to $ 1 million).

Expert sampling will be used to identify the Indian and Territorial Child Welfare/Social Service Directors. 2 Selection of the Title IV-B sample will occur in conjunction with Children’s Bureau officials and ACF Regional Office staff. Any Tribal or Territorial community that declines participation in the study will be replaced with another Tribe or Territory that preserves the intended representative diversity.

A total of 74 State, Territorial, and Tribal Directors will be selected for survey administration. The baseline survey was administered in FY 2010 and the first follow-up was administered in FY 2012. The second follow-up will be administered in FY 2013 (estimated timeframe is June 2013).

Training and Technical Assistance (T/TA) Activity Survey: The target population for the T/TA Activity Survey consists of all State/Tribal recipients of T/TA delivered by the ICs or NRCs during the two designated reporting windows in the fiscal year (October through December; and April through June). A nonprobability sampling strategy will be used to construct a sampling frame from the T/TA tracking system, OneNet. The frame will consist of electronic forms that record relevant program information. Each form will represent one or more T/TA activities per day or multi-day on-site activity and one of the activities will be designated as the primary activity. Because we only plan to collect information about the primary activity, there is a one-to-one correspondence between the form and the primary activity. Therefore, the frame of forms is equivalent to a frame of primary activities. The following information will be available for each form on the frame: type of provider (NRC or IC), mode of T/TA (on-site or other), recipient of T/TA (State or Tribe), and other information that may be used to contact the recipient. A census will be conducted of all eligible T/TA recipients.

We propose a census of onsite and offsite T/TA events at intervals of six months. Data will be collected every six months with the activities occurring in the final three months of each six month period serving as the population of activities from which the census is taken. Constraints will be imposed on the off-site activities so that only off-site activities that occurred in the last six weeks of the three-month window will be included, to assist with respondent recall. Every six months, eligible forms within the prior three months (i.e., the reporting window) will be used to identify eligible recipients of onsite T/TA. Then, for offsite T/TA, eligible forms within the last six weeks of the reporting window will be selected to identify eligible recipients of offsite T/TA. The reason for administering the survey to a census of T/TA recipients rather than drawing a sample as previously proposed is because CB and the evaluator have learned there is a relatively small number of agency staff members recorded as the persons knowledgeable about the T/TA received by the agency3, because the evaluation design restricts the number of surveys sent to an individual for any survey round (to minimize individual burden), and because response rates have been lower than initially projected.

To date, four waves of data have been collected using the T/TA Activity Survey—two during FY 2011 and two during FY 2012.

Web-Based Network Survey: The potential respondent universe for the Web-Based Network Survey is 15 individuals who serve as the Directors of the NRCs and the ICs, which are key provider organizations within the Children’s Bureau T/TA Network. A nonprobability sampling strategy will be used. A census will be conducted with regard to this population. The expected response rate is 100 percent. The baseline survey was administered in FY 2010 and a follow-up survey was administered in FY 2012.

No statistical methodology for stratification and sample selection will be used for the Agency Results Survey, T/TA Activity Survey, and the Web-Based Network Survey. For all three instruments, a census will be taken rather than drawing a sample, and thus power analyses are not relevant.

Agency Results Survey. To initiate the survey process for the administration in FY 2010, the CB sent an initial introductory letter to the CW directors of the 74 jurisdictions inviting them to participate in the ARS. Within a few weeks, the evaluation team followed with a letter explaining the context of the evaluation and the survey process. The cross-site team then undertook email and phone communication with the respondents in order to negotiate a mutually agreeable date and time for the one-hour interview. For the administration in FY 2012, introductory letters were no longer necessary because the evaluation team already had established relationships with CW directors and therefore sent email communication about scheduling a follow-up interview. The exception to this was when there had been turnover in jurisdictions and new CW directors were in place. In these situations, the CB sent an introductory letter and this was followed by a letter from the evaluation team. The same procedure will be used for the follow-up administration of the Agency Results Survey in FY 2013.

Two senior research staff members will participate in each telephone interview; one will serve as the lead in facilitating the interview and the other will take notes. Upon completion of the interview data collection, the cross-site team will assign a unique ID to each completed survey. The quantitative items will be coded and entered into SPSS. The data will be cleaned and inspected for missing data. The team will then examine frequency distributions and variability and prepare appropriate tabulations.

Similarly, the team will clean the qualitative data emanating from the open-ended survey items, and prepare the data for qualitative analyses. For content analysis, ATLAS.ti software will be used to aid in eliciting key information as well as themes and patterns in the data. The team will review and code the responses to the open-ended questions, compile the responses, and identify as well as examine the relevant patterns and themes that emerge from the data.

T/TA Activity Survey. Every six months, T/TA recipients of onsite T/TA will be sent e-mail messages (based on the contact information associated with the T/TA event in the OneNet system) and will be invited to click on a link, which brings them to the T/TA Activity Survey instrument, set up in the OneNet system. Respondents will be asked to complete the survey on the computer and then upon saving it, it is submitted electronically to the evaluation team. At least two follow-up reminder messages are sent to T/TA recipients. The same procedures will be followed for offsite T/TA recipients. E-mail messages with link to survey instrument will be sent to recipients of offsite T/TA approximately two weeks following the message sent to recipients of onsite T/TA. This also will be followed by two follow-up messages to remind recipients to complete the survey instrument. Follow-up reminders are sent to all contacts every 10 calendar days.

Web-based Network Survey. The Web-based Network Survey was designed in Qualtrics, an e-survey software program. The directors of T/TA providers will be invited to participate in the baseline survey through an e-mail message introducing the survey and its purpose. They will be provided with their own unique link to the Web-based Network Survey, which will offer them flexibility to return to the survey instrument and make changes or revisions to their responses if necessary. Within a few weeks of sending out the original invitation for the survey, the evaluation team will send directors that have not yet completed a survey a reminder that stresses the importance of gaining the perspective of all eligible respondents in the analysis. A second reminder will be distributed approximately one month after the initial e-mail is sent.

Maximizing response rates is critical to the administration of the aforementioned surveys.4 Calculation of the response rates is as follows:

Exhibit B-3. Calculation of response rates

Survey |

Respondent |

# Respondents/ # Sampled |

Response Rate (%) |

Agency Results Survey |

State Child Welfare Directors |

52/52 |

100 |

|

Child Welfare/Social Service Directors from Tribes, Tribal Consortia, and Territories (Title IV-B grantee)s |

22/22 |

100 |

T/TA Activity Survey |

State and tribal T/TA recipients |

98/246 |

405 |

Web-Based Network Survey |

T/TA Network Directors |

15/15 |

100 |

The content and format of the three survey instruments were developed in close consultation with key stakeholders, including the IC and NRCs. In addition, development of the Agency Results Survey and T/TA Activity Survey was informed by previously developed measures involving T/TA provision.

Strategies that emphasize flexibility, confidentiality, and a respect for the respondent’s time facilitate timely participation. The following strategies will be implemented to maximize participation in the data collection and achieve the desired response rates: 6

Introduction and notification: An introductory letter will be sent on CB letterhead to inform all new State and Tribal respondents not previously surveyed by the evaluation team about the administration of the Agency Results Survey. A description of the cross-site evaluation will be included in this mailing. For prospective Tribal respondents, this letter of introduction will also be sent to the Tribal Leader or Chairperson. Follow-up introductory calls will be made with prospective Tribal respondents to introduce the evaluation team and to address any questions about the data collection. In the subsequent years, reminder emails will be sent or telephone calls will be made to all State and Tribal respondents. We recognize that there may be some turnover in leadership over time and that we may have to re-introduce the survey. The CB will notify potential respondents about administration of the T/TA Activity Survey through listserv announcements. Similarly, the CB will notify the IC and NRC directors about the administration of the Web-Based Network Survey through their email communications and conference calls with these groups.

Timing of data collection: Discussions were held with stakeholders to determine optimal periods for data collection in order to minimize respondent burden and to facilitate recall. The Agency Results Survey will be conducted during the month of June 2013. Administration will continue to be coordinated with data collections efforts conducted by the ICs. The T/TA Activity Survey will be administered twice per year in February and July. Data collection will occur within a three-month window (and for offsite T/TA activities, within a six-week window) following T/TA provision to facilitate respondent recall. In order to maximize participation and ensure a timely response, the Web-Based Network Survey is discussed in advance during provider teleconferences and through email communications so that directors are prepared and expecting the survey.

Pre-interview preparation: Child Welfare agency directors that have participated in the baseline and first follow-up administration of the Agency Results Survey already are familiar with the instrument. Nonetheless, a copy of the Agency Results Survey will be sent to all respondents in advance of the telephone interview. Background information for certain survey items will be “pre-filled” using information obtained from OneNet, semi-annual reports, or agency websites. Prior interviewer knowledge or familiarity with each State or Tribe’s child welfare system will expedite administration of the interview. Pre-interview preparation is not applicable to the T/TA Activity Survey and Web-Based Network Survey.

Administration: For the Agency Results Survey, the telephone interviews will be scheduled at the respondents’ convenience. The evaluation team will confirm the interview 2-3 days beforehand and re-schedule any interviews as necessary to accommodate any changes in a Director’s schedule, given the dynamic work environment of public child welfare agencies. 7 Similarly, the evaluation team will do the same with Tribal respondents, given the potential for schedule changes due to community obligations or seasonal fluctuations in cultural activities. 8 (Running Wolf, Soler, Manteuffel, Sondheimer, Santiago & Erickson, 2004). For the T/TA Activity Survey and Web-Based Network Survey, an email notification will be sent to all sampled T/TA recipients with a request to complete the survey (i.e., by accessing a web-link to an online version of the survey or accessing an attached survey to complete and return via email, mail or secure fax). Electronic participation will allow respondents the flexibility to complete the survey at the most convenient time with minimal burden. Approximately one week fter sending this initial email, the first follow-up reminder email will be sent. A second reminder will be sent 10 days later to those respondents who have still not yet completed the survey.

Alternate response methods: All respondents will be given the option to use an alternate method. For the Agency Results Survey, if a respondent prefers to submit written responses to the survey in lieu of participating in a telephone interview, we will provide him/her with a paper version to complete via fax, email, or mail. Similarly, paper versions of the T/TA Activity Survey and Web-Based Network Survey will be sent to respondents upon request. Alternately, the latter surveys will be administered through a telephone interview if requested to accommodate any special needs.

Assurances of data confidentiality: Respondents to all surveys will be assured that reported data are aggregated and not attributable to individuals or organizational entities.

There are no incentives provided for participation in any of the surveys.

The four instruments contained herein were subject to review and feedback by key stakeholders, including the CB, ACF Regional Office staff, ICs, and, NRCs, and the T/TA Network.

The Agency Results Survey, T/TA Activity Survey, and Web-Based Network Survey were pilot tested to confirm survey item validity and to identify possible procedural or methodological challenges in need of attention or improvement. Pilot tests were conducted for each instrument using a sample of no more than nine respondents (i.e., former State and Tribal child welfare Directors, former child welfare agency personnel, and previous National Resource Center Directors). Following the pilot tests, the instruments were refined to minimize burden and improve utility. Similarly, a paper version of the web-based forms used in the OneNet tracking system was tested by prospective users and refined. The pilot tests were instrumental in determining the amount of time required to complete the surveys and forms and develop the burden estimates. Since 2010, these instruments have been in use with the national cross-site evaluation and have yielded valuable information regarding experiences with the T/TA services of providers and the functioning of the CB’s T/TA Network.

User access and responsiveness to the web-based methodology for completing the T/TA Activity Survey, Web-Based Network Survey, and OneNet forms has been positive. Feedback from respondents and timely responses have proven that the method is an appropriate one for gathering data.

National Cross-Site Evaluation Contractor |

Statistical Consultant: |

|

James Bell Associates 1001 19th Street North, Suite 1500 Arlington, VA (703) 528-3230 |

ICF International

9300 Lee Highway Fairfax, VA 22031 (703) 934-3000 |

Jyostha Prabhakaran, Ph.D. Statistician ICF International 9300 Lee Highway Fairfax, VA 22031 (703) 934-3320

|

Appendix A: Cross-site evaluation research questions

Cross-Site Evaluation Questions

The evaluation questions explored through the cross-site evaluation address the work of ICs and NRCs, the quality of the T/TA delivered, the costs of services, relationships between T/TA providers and the jurisdictions receiving the T/TA, and the outcomes of IC and NRC T/TA provision to States and Tribes. Additional questions address changes in the T/TA Network over time and how providers have responded to these questions. Further, the evaluation addresses outcomes of IC and NRC T/TA provision as it relates to changes in organizational capacity, culture, climate, and child welfare systems. Outcomes related to children and families are also examined. A separate set of evaluation questions examines the identity, cohesion, and functioning of the T/TA Network. The evaluation questions are listed below.

Questions that address T/TA Services of ICs and NRCs

Is utilization of T/TA increasing among those State and Tribal child welfare systems that have demonstrated the greatest needs?

Is utilization of T/TA increasing among those State and Tribal child welfare systems that have been specifically targeted for outreach by the ICs?

What are the key factors that facilitate and impede utilization of the NRCs and ICs by State and Tribal child welfare systems?

Questions that address T/TA Quality

What is the overall quality of the T/TA that is provided by the ICs and NRCs? And is the quality of T/TA improving over the period of the study?

Questions that address Outcomes of T/TA

Have States and Tribes that have entered into formal partnerships with ICs achieved their desired outcomes?

To what degree are the T/TA activities and approaches of the ICs and NRCs resulting in changes in organizational culture/climate and capacity in State and Tribal child welfare systems?

To what degree are the T/TA activities and approaches of the ICs and NRCs resulting in desired systems change in State and Tribal child welfare systems?

What key variables affect whether desired systems change is achieved as a result of T/TA provided by the ICs and NRCs?

Have intended systems changes also resulted in improved outcomes for children and families in State and Tribal child welfare systems?

Question regarding Relationships between T/TA Providers and Jurisdictions

What is the nature and quality of the relationships and interactions established between States and Tribes (and other key constituents) and the ICs and NRCs with whom they work?

Question regarding Cost of T/TA

What are the costs of providing the type, frequency, and intensity of T/TA that is most likely to result in the desired systems change and improved outcomes?

Questions that address the T/TA Network, including Identity, Cohesion, and Functioning of Network

What is the nature and quality of the relationships and interactions established between the members of the T/TA Network?

Are collaboration, coordination, and application of the Child and Family Services Review (CFSR) and Systems of Care (SOC) principles increasing among T/TA Network members over the life of the ICs and NRCs grant awards?

To what degree does the transfer of knowledge and information take place between T/TA Network members?

To what degree are members of the T/TA Network subscribing to and promoting a shared identity through common messaging in outreach and service delivery?

What changes occur in the work of the ICs and NRCs over time with respect to roles, responsibilities, and expectations regarding how providers are expected to work with States and Tribes and one another?

Appendix B: Stakeholder Feedback

Individuals and Organizations Providing Feedback on

Cross-Site Evaluation Data Collection Strategies

Appendix B

Individuals and Organizations Providing Feedback on

Cross-Site Evaluation Data Collection Strategies

Review and Comments on Data Collection Approach, Instruments, and Data Collection System (OneNet) |

|

Implementation Center Representatives |

|

Michelle I. Graef,

Ph.D. |

Lauren Alper Evaluator NRC Diligent Recruitment @AUK 6108 Colina Lane Austin, TX 78759 Phone: (501) 250-2311

|

Cathy Sowell, LCSW Partner, Western and Pacific Child Welfare Implementation Center Department of Child & Family Studies Louis de la Parte Florida Mental Health Institute University

of South Florida |

John C. Levesque Associate Center Director National Resource Center for Adoption 86 Loveitts Field Road South Portland, ME 04106 Jlevesq7@maine.rr.com Phone: (207) 809-0041

|

Kris Sahonchik National Resource Center for Organizational Improvement Director of Strategy and Coordination Phone: (207) 780-5856 |

Tammy Richards NCIC, University of Southern Maine Muskie School of Public Service Phone: (207) 780-5859 |

National Resource Center Representatives |

|

Peter Watson,

Director Muskie School of Public Service University

of Southern Maine |

Sharonlyn Harrison,

Ph.D.

|

Scott Trowbridge, Esq. Staff

Attorney Center

on Children and the Law |

Gerald

P. Mallon, DSW |

Debbie Milner NRC-CWDT Project Manager Child Welfare League of America Phone: home/office (850) 622-1567

|

Theresa Costello Director, National Resource Center for Child Protective Services Deputy Director, ACTION for Child Protection Phone: (505) 345-2444 office (505) 301-3105 mobile |

|

|

Other Network Members and Stakeholders |

|

Linda Baker, Director FRIENDS National Resource Center for CBCAP Phone: (919) 768-0162

|

Anita P. Barbee, MSSW, Ph.D. Professor Kent School of Social Work University of Louisville Phone: (502) 852-0416 (502) 852-0422 |

Central and Regional Office Staff |

|

Brian Deakins Capacity Building Division HHS/ACF/ACYF/Children's Bureau Phone: (202) 205-8769

|

John L. (Jack) Denniston Child Welfare Program Specialist (contractor) Children's Bureau Division of Research and Innovation ICF International Phone: (919) 968-0784 |

Central and Regional Office Staff (continued) |

|

Melissa Lim Brodowski, MSW, MPH Office on Child Abuse and Neglect Children's Bureau, ACYF, ACF, HHS Washington, DC 20024 Phone: (202) 205-2629 |

Jesse Wolovoy |

Randi Walters, Ph.D. Capacity Building Division Washington, DC Phone: (202) 205-5588 randi.walters@acf.hhs.gov

|

|

T/TA Activity Survey

Agency Results Survey

Web-based Network Survey

OneNet

1. T/TA Activity Survey – Sample of Reporting of Findings

Respondents reported that federal factors had somewhat more influence on their decisions to request or apply for T/TA than the other two factors (Figure 1). Among four individual federal factors, the most influential one reported by respondents is CFSR findings/PIP development, and the least influential factor is federal law or policy change (Table 3).

* 1 = No influence; 2 = Some influence; 3= A great deal of influence

Table 3: Degree of Influence on Decisions to Request or Apply for T/TA* |

|||

|

All Recipients |

IC T/TA Recipients |

NRC T/TA Recipients |

Federal Factors |

(n=111) |

(n=49) |

(n=62) |

CFSR findings/PIP development |

2.4 |

2.4 |

2.3 |

Other Federal factors |

2.1 |

2.2 |

2.0 |

ACF Regional Office suggestion/referral |

2.0 |

2.0 |

1.9 |

Federal law or policy change |

1.5 |

1.5 |

1.5 |

T/TA Network Factors |

(n=120) |

(n=50) |

(n=70) |

Prior use of National Resource Center services |

2.2 |

1.9 |

2.5 |

Outreach to your State/Tribe by the Implementation Center in your ACF Region |

1.9 |

2.4 |

1.3 |

Outreach to your State/Tribe by the National Resource Center |

1.7 |

1.7 |

1.7 |

Prior use of Implementation Center services |

1.7 |

2.0 |

1.4 |

Other T/TA Network factors |

1.6 |

2.3 |

1.3 |

Peer networking activities facilitated by the National Resource Centers |

1.6 |

1.5 |

1.6 |

Peer networking activities facilitated by the ICs in your ACF Region |

1.5 |

2.0 |

1.1 |

Geographic proximity of the Implementation Center in your ACF Region |

1.4 |

1.6 |

1.1 |

Recommendation/Referral from other National Resource Centers |

1.4 |

1.4 |

1.3 |

Geographic proximity of the National Resource Centers |

1.2 |

1.2 |

1.2 |

Recommendation/Referral from another IC outside ACF Region |

1.0 |

1.1 |

1.0 |

State/Tribal Factors |

(n=109) |

(n=46) |

(n=63) |

Agency/organization leadership |

2.3 |

2.6 |

2.1 |

State/local law or policy change |

1.8 |

1.9 |

1.8 |

State/Tribal quality assurance review |

1.7 |

2.0 |

1.4 |

Other State/Tribal factors |

1.7 |

1.8 |

1.6 |

Lawsuit/legal settlement |

1.3 |

1.5 |

1.2 |

Recommendation from other State/Tribe |

1.3 |

1.3 |

1.3 |

Specific State/Tribal incident (e.g., child fatality) |

1.2 |

1.4 |

1.1 |

2

Satisfaction with T/TA Provider

Follow-through

The respondents were generally satisfied with T/TA provider follow-through, as evidenced by the overall satisfaction ratings (see table VI-2). The States/Tribes that were working with an IC reported a satisfaction rating of 4.2 with the ICs’ follow-through, whereas those who had worked with NRCs rated their satisfaction with follow-through from NRCs at 4.5. There were only slight differences in ratings between States/Tribes that worked only with NRCs (n=24, average rating 4.6) as compared to those that worked with NRCs and an IC (n=18, rating=4.4) on follow-through from the NRCs.

Table VI-2: T/TA Provider Follow-through |

|||||||

|

|

All |

States |

Tribes |

|||

Survey item |

|

N |

Mean score* |

N |

Mean score* |

N |

Mean score* |

How satisfied have you been with the level of follow-through from your T/TA providers |

IC |

17 |

4.2 |

14 |

4.1 |

3 |

4.7 |

NRCs |

42 |

4.5 |

37 |

4.6 |

5 |

4.2 |

|

*Based on a 5-point scale. The response categories are: 1=not at all, 2=a little, 3=somewhat, 4=a lot, and 5=very much.

Satisfaction with Communication with

the T/TA Providers

Table VI-3: Satisfaction with Communication with T/TA Providers |

|||||||

|

|

All |

States |

Tribes |

|||

Survey item |

N |

Mean score* |

N |

Mean score* |

N |

Mean score* |

|

How satisfied are you with the level of accessibility of the T/TA providers |

IC |

39 |

3.7 |

32 |

3.7 |

7 |

3.9 |

NRCs |

48 |

4.4 |

40 |

4.5 |

8 |

3.5 |

|

When working with the T/TA providers, how satisfied have you been with the frequency of communication |

IC |

17 |

4.2 |

14 |

4.1 |

3 |

4.7 |

NRCs |

43 |

4.4 |

38 |

4.5 |

5 |

4.0 |

|

When working with the T/TA providers, do you feel [State/Tribe] plays active part in decision-making |

IC |

17 |

4.5 |

14 |

4.6 |

3 |

4.0 |

NRCs |

42 |

4.6 |

38 |

4.6 |

4 |

4.5 |

|

*Based on a 5-point scale. The response categories are: 1=not at all, 2=a little, 3=somewhat, 4=a lot, and 5=very much.

Information on respondents’ experiences and satisfaction with communication with the T/TA providers came from responses to survey items that addressed three dimensions of communication. The States/Tribes rated the accessibility of the ICs and NRCs, frequency of communication when working with the providers, and decision-making within the T/TA process (see table VI-3). In addition to the ratings, some respondents also provided additional clarifying comments or suggestions for how communication could be improved.

Satisfaction with the level of accessibility of the ICs. Overall, the respondents were “somewhat” satisfied with the accessibility of the ICs as indicated by the average rating of 3.7 based on responses from 39 interviewees. However, not surprisingly, those respondents who had worked with an IC assigned to their State/Tribe rated the accessibility of the ICs considerably higher (average rating of 4.4 based on 19 respondents) than did those who had only worked with NRCs and had no real experience with the ICs (average rating of 3.1 based on 20 respondents). Some respondents noted that they did not have any communication with the IC as they had not needed it. One State respondent explained that when the agency submits TA requests to an NRC, the TA includes implementation support. Therefore the agency has not needed to connect with the IC. He further noted that if the CB were to define to role of the NRCs as pre-implementation only, the agency would then change their TA requests accordingly.

Satisfaction with the level of accessibility of the NRCs. The respondents’ rated their satisfaction at 4.4 (“a lot”) on average. There were no meaningful differences in ratings between those who had used NRCs’ T/TA and those who had used both NRCs and an IC. However, States rated NRCs’ accessibility considerably higher (4.5)

3. Web-based Network Survey – Sample of Reporting of Findings

III. collaboration AND coordination among T/TA Network members

In an effort to begin to address the cross-site evaluation question, “Are collaboration and coordination increasing among T/TA Network members over the life of the projects?”, respondents were asked questions regarding their satisfaction with information sharing, coordination of services, collaboration, and Network member contributions to the Network. The four variables used to capture collaboration and coordination among T/TA Network members were: 1) Collaboration and information sharing; 2) Barriers to collaboration; 3) Perceived sense of contribution and value; and 4) Service coordination.

C

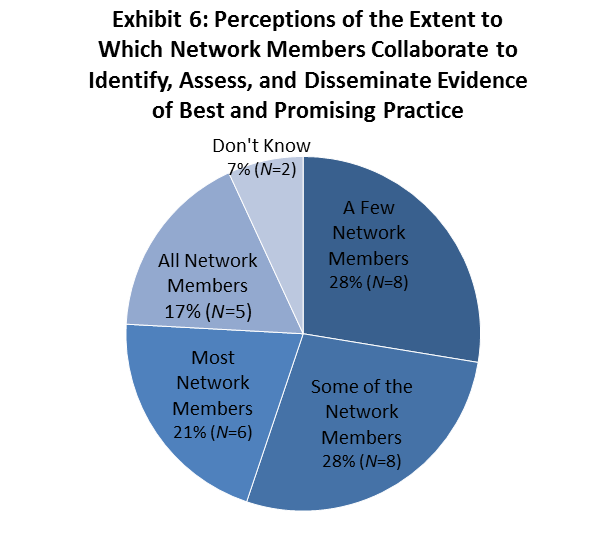

Network Member Collaboration to Identify, Assess, and Disseminate Evidence of Best and Promising Practice. Respondents were asked to indicate the extent to which Network members collaborate to identify, assess, and disseminate evidence of best and promising practice in Child Welfare. Responses were based on a 5-point Likert scale in which 0= none of the Network members, 1=a few Network members, 2=some Network members, 3=most Network members, and 4=all Network members. Network member responses can be seen in Exhibit 6.

Responses indicate that on average, members of the T/TA Network perceived only some Network Members as collaborating to identify, assess, and disseminate evidence of best and promising practice in Child Welfare (M= 2.30, N=29).

Two respondents indicated that they were unable to speak to whether or not Network members collaborate to identify, assess, and disseminate evidence of best and promising practice in Child Welfare (as indicated by a don’t know response on the survey). Both of these respondents were Extended Network members.

Five respondents (2 NRCs; 2 Extended Network members; and 1 IC) reported that all Network members collaborate to identify, assess, and disseminate evidence of best and promising practice in Child Welfare.

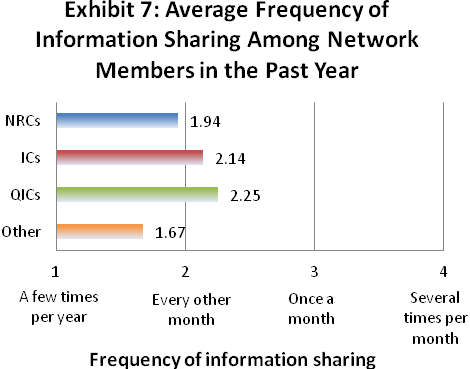

Information

Sharing Among Network Members.

Respondents

who indicated that in the past year they interacted with each T/TA

Network member either sometimes/occasionally,

frequently/often,

or very

often,

were also asked to estimate how frequently they shared TA materials,

products, and knowledge with other T/TA Network members in the past

year (1=

a

few times per year,

2= every

other month,

3= once

a month,

and 4= several

times per month).

Exhibit

7 depicts group-level information sharing within the past year,

while Exhibit 8 illustrates the average frequency of information

sharing among individual Network members in the past year.

Information

Sharing Among Network Members.

Respondents

who indicated that in the past year they interacted with each T/TA

Network member either sometimes/occasionally,

frequently/often,

or very

often,

were also asked to estimate how frequently they shared TA materials,

products, and knowledge with other T/TA Network members in the past

year (1=

a

few times per year,

2= every

other month,

3= once

a month,

and 4= several

times per month).

Exhibit

7 depicts group-level information sharing within the past year,

while Exhibit 8 illustrates the average frequency of information

sharing among individual Network members in the past year.

The average frequency of information sharing among all respondents in the past year was every other month (M=1.94, N=29).

Information sharing occurred most frequently with QIC-ChildRep (M=3.00, N=2); QIC-DR (M=2.83, N=6); and the Child Welfare Information Gateway (M=2.71, N=24).

The most frequent information sharing occurred with the QICs as a group. Network members shared information with QICs every other month (M=2.25).

Network members indicated that on average, they shared information with ICs as a group every other month (M=2.14) and with NRCs nearly every other month (M=1.94).

Respondents communicated with Extended Network members as a group nearly every other month (M=1.86)

.

4. OneNet – Sample of Reporting of Findings

What types of T/TA are being provided to States and Tribes?

Figure 11 displays the percent of T/TA hours by T/TA type and provider. Over the year timeframe, the most common T/TA type was consultation/problem solving/discussion9. Slightly less than 45% of all IC and NRC T/TA involved general consultation and problem solving and the other two categories that comprised more than 10% of T/TA type included dissemination of information and factilitation. In brief, both types of providers delivered similar percentages of T/TA for their three respective top categories: (1) consultation/problem solving/discussion, (2) disseminatino of information, and (3) facilitation. These three categories of T/TA types constituted 75% of IC activities and 73% of NRC activities. In the future, the National Evaluation team will assess whether and how T/TA types change over time. Changes in T/TA type and focus may also be more readily apparent by displaying any changes by the specific implementation project or T/TA request rather than presenting data in aggregate form.

What ‘stage’ of implementation (e.g., steps in the change process) are T/TA activities targeting?

One of the overarching frameworks being utilized by network providers is how an organization’s readiness for change may impact: (1) identification of need, (2) ability to select the appropriate organizational change strategy, and (3) likelihood that an organization will be able to successfully plan, implement, and sustain positive organizational changes.

Children’s Bureau has guided such critical thinking by engaging the National Implementation Research Network10 and other implementation science researchers to present at multiple T/TA conferences regarding readiness, implementation, and the various ‘stages’ organizations and communities undergo to plan for and implement complex systems and organizational change strategies intended to benefit their consumers – in this case, children and families.

1 Timely data entry in the OneNet system is part of the ICs’ and NRCs’ work responsibility and federal reporting requirements. OneNet will be used to take a census of identified TA recipients for the T/TA Activity Survey and is addressed below.

2 Trochim, W.M.K. 2001. The Research Methods Knowledge Base. Cincinnati, OH: Atomic Dog Publishing.

3 In general, because the approach used asks people to respond as key informants rather than as individuals who participate in a training event and then respond once to a survey (e.g., what typically happens with TA evaluation surveys), the numbers of potential respondents is consequently smaller.

4 This section does not address data collection for OneNet as data entry is part of the grantee’s work responsibility and reporting requirements. The OneNet system does have several features incorporated which help facilitate timely reporting of information, including several dropdown options and tabs on the OneNet forms in response to requests to better organize information and reduce the number of narrative fields users need to enter. These features make it easier for respondents to fill in the forms.

5 A response rate of 40 percent is expected for the T/TA Activity Survey. This is based on prior response rates for the four previous administrations of the survey, which averages 40 percent (overall, 206 of 508 surveys were returned). It is important to note that continued data collection with surveys yielding lower response rates are often justified by agencies in cases when they are seeking to gather information that is not intended to be generalized to a target population. Examples of these kinds of collections may include some customer satisfaction and web site user surveys and other qualitative or anecdotal collections.

6 Strategies that pertain to two or more data collections are discussed together.

7 Brooks, D. & Wind, L.H. (2002). Challenges implementing and evaluating child welfare demonstration projects. Children and Youth Services Review, 24, nos. 6/7, 379-383. Solomon, B. (2002). Accountability in public child welfare: Linking program theory, program specification and program evaluation. Children and Youth Services Review, 24, nos. 6/7, 385-407.

8 Running Wolf, P., Soler, R., Manteuffel, B., Sondheimer, D., Santiago, R.L., Erickson, J. (2004). Cultural Competence Approaches to Evaluation in Tribal Communities. Rockville, MD: Substance Abuse and Mental Health Services Administration.

9 For specific categories, including how the different types of T/TA modes are operationalized, please see Appendix A in the OneNet User Guide.

10 National Implementation Research Network (NIRN) – For more information, please see http://www.fpg.unc.edu/~nirn/default.cfm.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-29 |

© 2026 OMB.report | Privacy Policy